I have a SQL Query which I want to change into pyspark. we have a Column called CGPA which contains some values and i need to divide the values by 25 where our conditions are matching

UPDATE STUDENT_TABLE

SET STUDENT_TABLE.CGPA = ([STUDENT_TABLE].CGPA/25)

WHERE (((STUDENT_TABLE.CGPA)>"5" Or (STUDENT_TABLE.CGPA)="100"));

The Pyspark Code i am trying is not working

df=df.withColumn('CGPA' , F.when((col('CGPA') > '5') & (col('CGPA') == '100'),(df.CGPA/25) .otherwise(df['CGPA])

CodePudding user response:

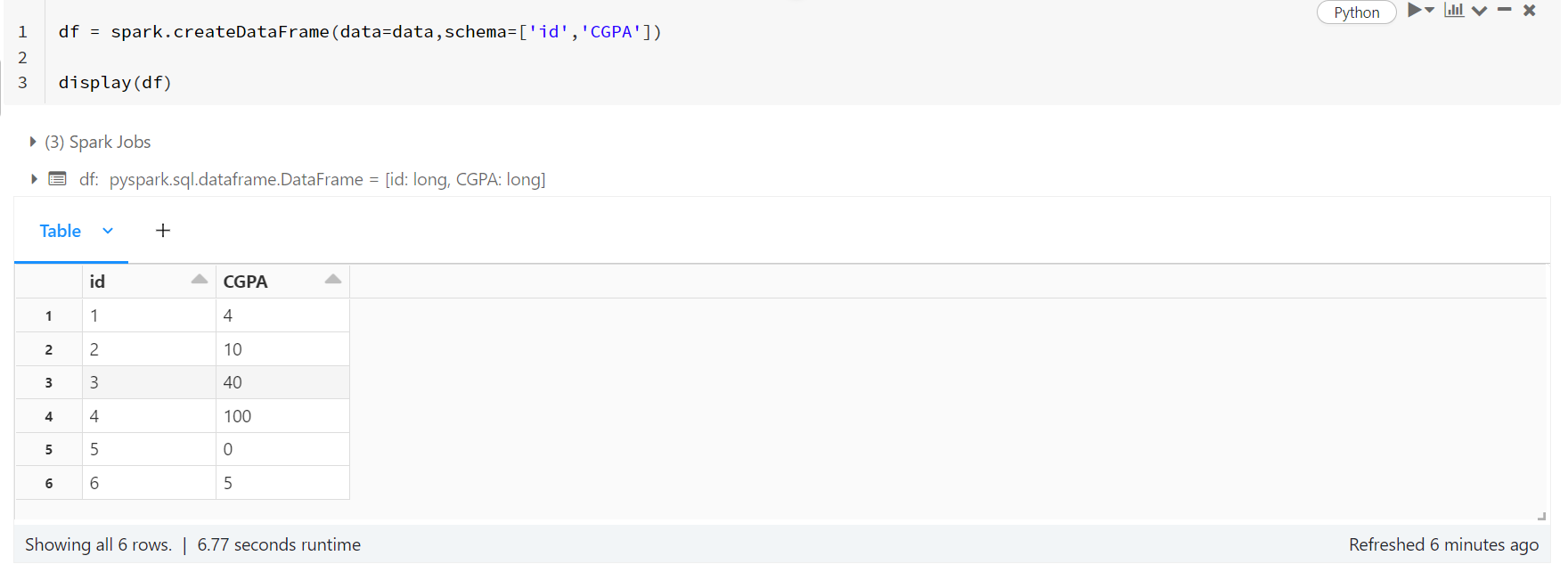

I have taken the following sample data for my dataframe to demonstrate.

data = [[1,4],[2,10],[3,40],[4,100],[5,0],[6,5]]

df = spark.createDataFrame(data=data,schema=['id','CGPA'])

display(df)

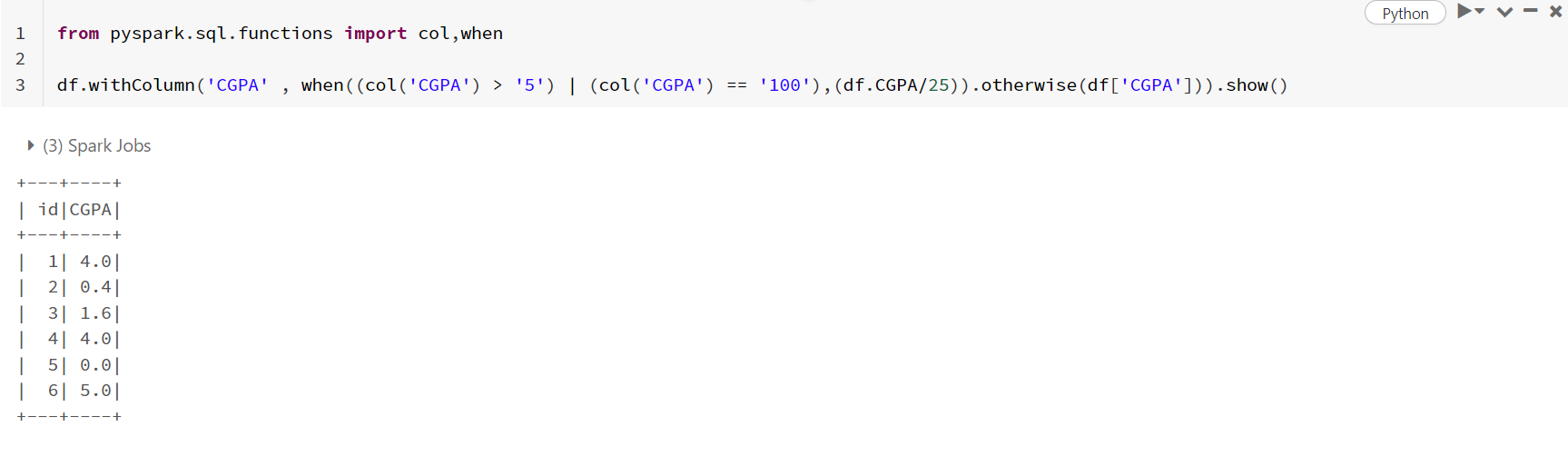

- Looking at your SQL query, as you want to update when either of the conditions is true (i.e., or condition in SQL UPDATE), you can use the following code:

from pyspark.sql.functions import col,when

df.withColumn('CGPA' , when((col('CGPA') > '5') | (col('CGPA') == '100'),(df.CGPA/25)).otherwise(df['CGPA'])).show()

#df = df.withColumn('CGPA' , when((col('CGPA') > '5') | (col('CGPA') == '100'),(df.CGPA/25)).otherwise(df['CGPA']))

#df.show()

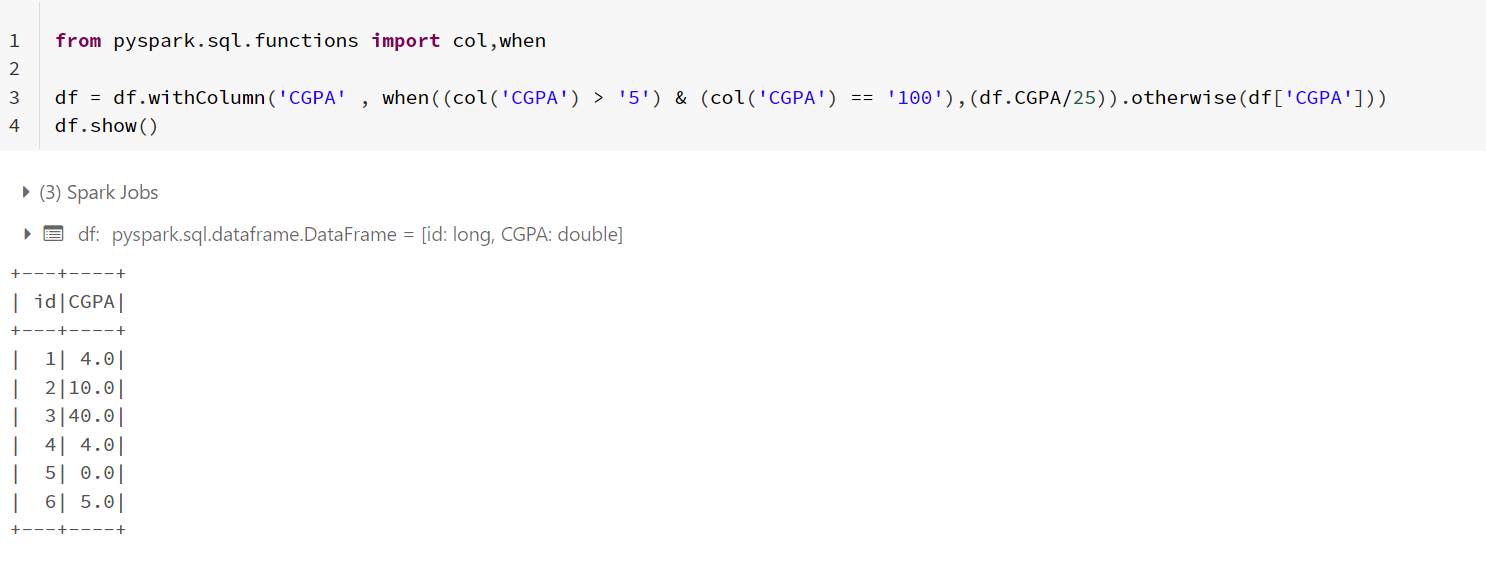

If condition is AND, then use this code:

from pyspark.sql.functions import col,when

df = df.withColumn('CGPA' , when((col('CGPA') > '5') & (col('CGPA') == '100'),(df.CGPA/25)).otherwise(df['CGPA']))

df.show()