I trained a model and uploaded it to Google AI Platform. When I test the model from the command line I expect to get predictions back from my uploaded model, instead I get an error message. Here are the steps I followed:

- Installing Gcloud

- Saving my model

gcloud ai-platform local train \

--module-name trainer.final_task \

--package-path trainer/ --

- Created manually a bucket

- Added created file from step 2 to bucket (

saved_model.pb) - Created a model in Gcloud like

- Tested it from a command line (this produces the error)

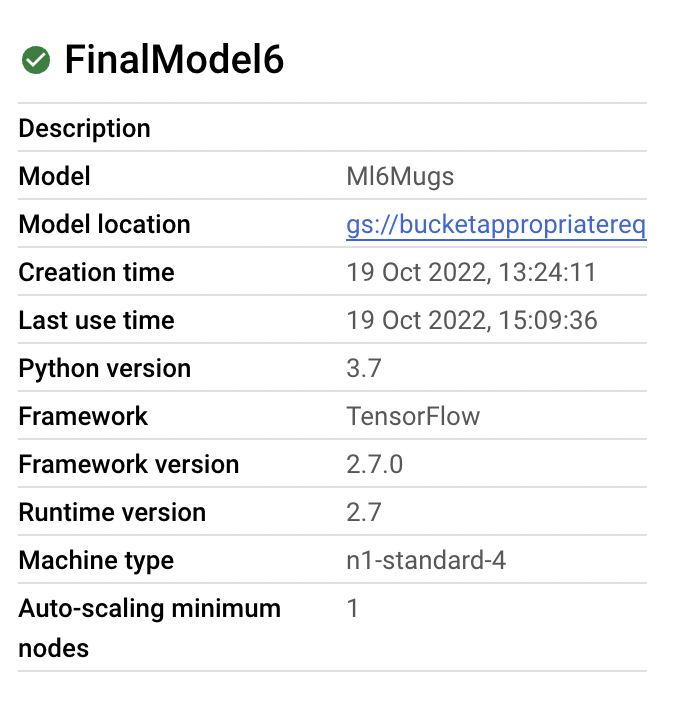

MODEL_NAME=ML6Mugs VERSION=FinalModel6 gcloud ai-platform predict \ --region europe-west1 \ --model $MODEL_NAME \ --version $VERSION \ --json-instances check_deployed_model/test.jsonWhat do I miss out? It's difficult to find something online about the issue. The only thing I found was this.

Architecture of my model

def model(input_layer): """Returns a compiled model. This function is expected to return a model to identity the different mugs. The model's outputs are expected to be probabilities for the classes and and it should be ready for training. The input layer specifies the shape of the images. The preprocessing applied to the images is specified in data.py. Add your solution below. Parameters: input_layer: A tf.keras.layers.InputLayer() specifying the shape of the input. RGB colored images, shape: (width, height, 3) Returns: model: A compiled model """ input_shape=(input_layer.shape[1], input_layer.shape[2], input_layer.shape[3]) base_model = tf.keras.applications.MobileNetV2(weights='imagenet', input_shape=input_shape, include_top=False) for layer in base_model.layers: layer.trainable = False model = models.Sequential() model.add(base_model) model.add(layers.GlobalAveragePooling2D()) model.add(layers.Dense(4, activation='softmax')) model.compile(optimizer="rmsprop", loss='sparse_categorical_crossentropy', metrics=["accuracy"]) return modelError

ERROR: (gcloud.ai-platform.predict) HTTP request failed. Response: { "error": { "code": 400, "message": "{\n \"error\": \"Could not find variable block_15_depthwise_BN/beta. This could mean that the variable has been deleted. In TF1, it can also mean the variable is uninitialized. Debug info: container=localhost, status error message=Container localhost does not exist. (Could not find resource: localhost/block_15_depthwise_BN/beta)\\n\\t [[{{function_node __inference__wrapped_model_15632}}{{node model/sequential/mobilenetv2_1.00_224/block_15_depthwise_BN/ReadVariableOp_1}}]]\"\n}", "status": "INVALID_ARGUMENT" } }CodePudding user response:

The issue is resolved. My problem was that I did added the wrong path to my bucket.

Wrong

gs://your_bucket_name/saved_model.pbCorrect

gs://your_bucket_name/model-dir/CodePudding user response:

I had a similar issue.

What I did wrong: I only uploaded the saved_model.pb .

Solution: You also need to upload the variables and assets folder that comes with it.

BUCKET_NAME=<bucket-path> MODEL_DIR=output/exported_model gsutil cp -r $MODEL_DIR $BUCKET_NAMEoutput/exported_model

is a folder containing the assets, variables folder and the saved_model.pb