CodePudding user response:

On this site, captcha can be solved without resorting to third-party services. When you click on the "Captcha Audio" button, a GET request is sent to the endpoint https://ipindiaservices.gov.in/PublicSearch/Captcha/CaptchaAudio The response is a dictionary {"CaptchaImageText":"hnnxd"} which you can access get from Selenium via the "Chrome Devtools Protocol" using the Network.getResponseBody method, or you can use the requests library.

To save data in csv, you can use, for example, the csv module included in the standard library.

Here is one possible solution:

import re

import csv

import json

from time import sleep

from typing import Generator

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver import DesiredCapabilities

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.chrome.webdriver import WebDriver

from selenium.webdriver.support import expected_conditions as EC

def get_captcha_text(log: dict, driver: WebDriver) -> dict:

log = json.loads(log["message"])["message"]

if all([

"Network.responseReceived" in log["method"],

"params" in log.keys(),

'CaptchaAudio' in str(log["params"].values())

]):

return driver.execute_cdp_cmd('Network.getResponseBody', {'requestId': log["params"]["requestId"]})

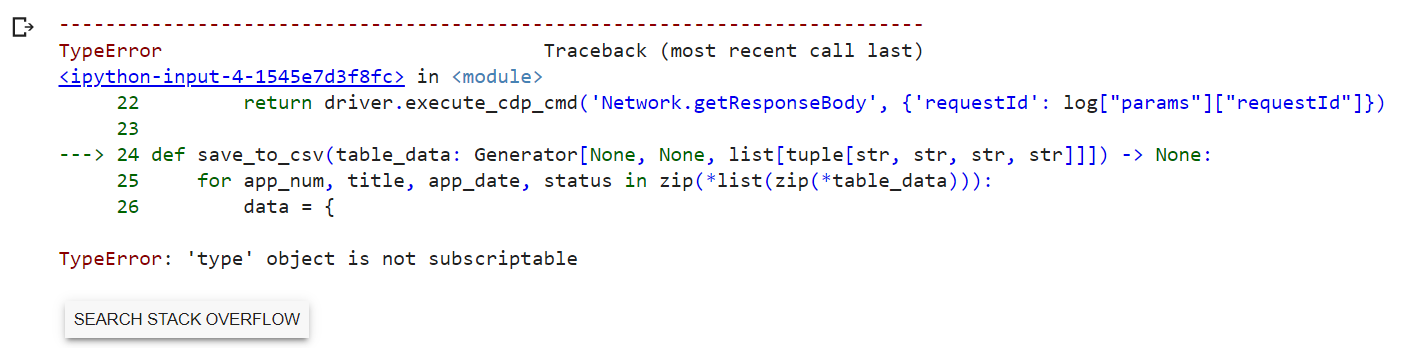

def save_to_csv(table_data: Generator[None, None, list[tuple[str, str, str, str]]]) -> None:

for app_num, title, app_date, status in zip(*list(zip(*table_data))):

data = {

'Application Number': app_num,

'Title': title,

'Application Date': app_date,

'Status': status

}

with open(file='ipindiaservices.csv', mode='a', encoding="utf-8") as f:

writer = csv.writer(f, lineterminator='\n')

writer.writerow([data['Application Number'], data['Title'], data['Application Date'], data['Status']])

def start_from_page(page_number: int, driver: WebDriver) -> None:

driver.execute_script(

f"""

document.querySelector('button.next').value = {page_number};

document.querySelector('button.next').click();

"""

)

def titles_validation(driver: WebDriver) -> None:

"""replace empty title name with '_'"""

driver.execute_script(

"""

let titles = document.querySelectorAll('#tableData>tbody>tr>td.title')

Array.from(titles).forEach((e) => {

if (!e.textContent.trim()) {

e.textContent = '_';

}

});

"""

)

options = webdriver.ChromeOptions()

options.add_experimental_option("excludeSwitches", ["enable-automation", "enable-logging"])

capabilities = DesiredCapabilities.CHROME

capabilities["goog:loggingPrefs"] = {"performance": "ALL"}

service = Service(executable_path="path/to/your/chromedriver.exe")

driver = webdriver.Chrome(service=service, options=options, desired_capabilities=capabilities)

wait = WebDriverWait(driver, 10)

# regular expression to search for data in a table

pattern = r'^([0-9A-Z\/\-,] ) (. )? ([0-9\/] ) (\w )'

driver.get('https://ipindiaservices.gov.in/PublicSearch/')

# sometimes an alert with an error message("") may appear, so a small pause is used

sleep(1)

driver.find_element(By.CSS_SELECTOR, 'img[title="Captcha Audio"]').click()

driver.find_element(By.ID, 'TextField6').send_keys('ltd')

# short pause is needed here to write the log, otherwise we will get an empty list

sleep(1)

logs = driver.get_log('performance')

# get request data that is generated when click on the button listen to the text of the captcha

responses = [get_captcha_text(log, driver) for log in logs if get_captcha_text(log, driver)]

# get captcha text

captcha_text = json.loads(responses[0]['body'])['CaptchaImageText']

# enter the captcha text and click "Search" button

driver.find_element(By.ID, 'CaptchaText').send_keys(captcha_text)

driver.find_element(By.CSS_SELECTOR, 'input[name="submit"]').click()

# the page where the search starts

start_from_page(1, driver)

while True:

# get current page number

current_page = wait.until(EC.presence_of_element_located((By.CSS_SELECTOR, 'span.Selected'))).text

# print the current page number to the console

print(f"Current page: {current_page}")

# get all fields of the table

table_web_elements = wait.until(EC.presence_of_all_elements_located((By.CSS_SELECTOR, '#tableData>tbody>tr')))

# check title name

titles_validation(driver)

# get all table data on current page

table_data = (re.findall(pattern, data.text)[0] for data in table_web_elements)

# save table data to csv

save_to_csv(table_data)

# if the current page is equal to the specified one, then stop the search and close the driver

if current_page == '3768':

break

# click next page

driver.find_element(By.CSS_SELECTOR, 'button.next').click()

driver.quit()

The performance of this solution is about 280sec per 100 pages.

It will take about 2.5-3 hours to collect all the data.

Therefore, the ability to stop data collection on a specific page has been added (by default, this is the last page):

if current_page == '3768':

break

And start collection data from the specified page (by default, this is the first page):

start_from_page(1, driver)

Output is ipindiaservices.csv

202247057786,NON-AQUEOUS ELECTROLYTE SECONDARY BATTERY,10/10/2022,Published

202247057932,"COMMUNICATION METHOD, APPARATUS AND SYSTEM",10/10/2022,Published

202247057855,POLYOLEFIN RESIN FILM,10/10/2022,Published

202247057853,CEMENT COMPOSITION AND CURED PRODUCT THEREOF,10/10/2022,Published

...

CodePudding user response:

You can use any capctha solving sites. On these sites, users usually fix it themselves, so they can be slow, but it does the job.

Sample website (I didn't receive any ads)

You can use selenium to pull the information. It will be enough to take and place the elements with the "id" tag on the site. Library for reading/writing excel in python