I have an AKS cluster, as well as a separate VM. AKS cluster and the VM are in the same VNET (as well as subnet).

I deployed a echo server with the following yaml, I'm able to directly curl the pod with vnet ip from the VM. But when trying that with load balancer, nothing returns. Really not sure what I'm missing. Any help is appreciated.

apiVersion: v1

kind: Service

metadata:

name: echo-server

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: echo-server

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: echo-deployment

spec:

replicas: 1

selector:

matchLabels:

app: echo-server

template:

metadata:

labels:

app: echo-server

spec:

containers:

- name: echo-server

image: ealen/echo-server

ports:

- name: http

containerPort: 8080

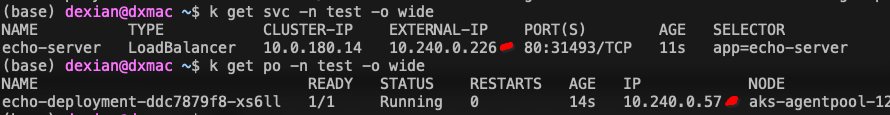

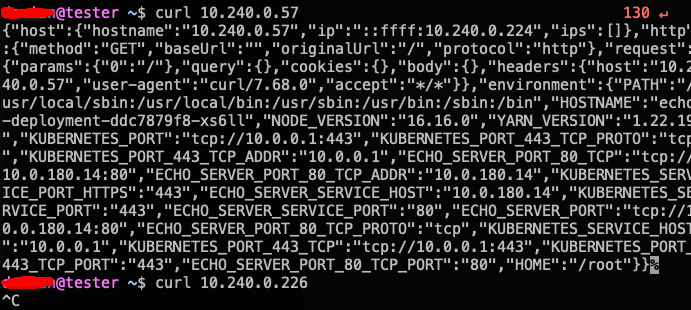

The following pictures demonstrate the situation

I'm expecting that when curl the vnet ip from load balancer, to receive the same response as I did directly curling the pod ip

CodePudding user response:

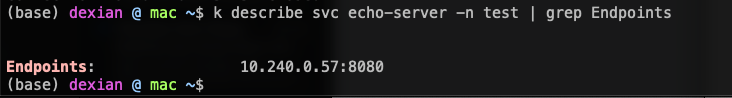

Have you checked whether the pod's IP is correctly mapped as an endpoint to the service? You can check it using,

k describe svc echo-server -n test | grep Endpoints

If not please check label and selectors with your actual deployment (rather the resources put in the description).

If it is correctly mapped, are you sure that the VM you are using (_@tester) is under the correct subnet which should include the iLB IP;10.240.0.226 as well?

CodePudding user response:

Can you check your internal-loadbalancer health probe.

"For Kubernetes 1.24 the services of type LoadBalancer with appProtocol HTTP/HTTPS will switch to use HTTP/HTTPS as health probe protocol (while before v1.24.0 it uses TCP). And / will be used as the default health probe request path. If your service doesn’t respond 200 for /, please ensure you're setting the service annotation service.beta.kubernetes.io/port_{port}_health-probe_request-path or service.beta.kubernetes.io/azure-load-balancer-health-probe-request-path (applies to all ports) with the correct request path to avoid service breakage." (ref: https://github.com/Azure/AKS/releases/tag/2022-09-11)

CodePudding user response:

Found the solution, the only thing I need to do is to add the following to the Service declaration:

externalTrafficPolicy: 'Local'

Full yaml as below

apiVersion: v1

kind: Service

metadata:

name: echo-server

annotations:

service.beta.kubernetes.io/azure-load-balancer-internal: "true"

spec:

type: LoadBalancer

externalTrafficPolicy: 'Local'

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: echo-server

previously it was set to 'Cluster'.

Just got off with azure support, seems like a specific bug on this (it happens with newer version of the AKS), posting the related link here: https://github.com/kubernetes/ingress-nginx/issues/8501