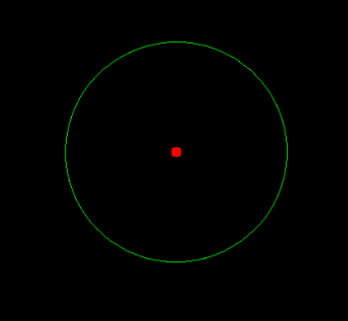

I'm trying to draw a horizontal line across a shape (ellipse in this instance with only the centroid and the boundary of the ellipse on a black background) starting from the centroid of the shape (ellipse) . I started off checking each and every pixel along x and -x axes from centroid and replacing each non-green pixel(boundary) to a white pixel (essentially drawing a line pixel by pixel) and stop converting as soon as I reach the first green pixel (boundary). Code is given at the end

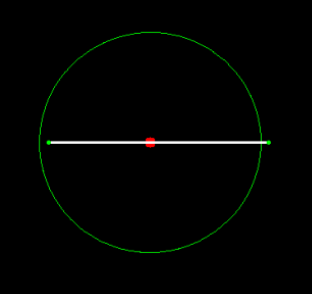

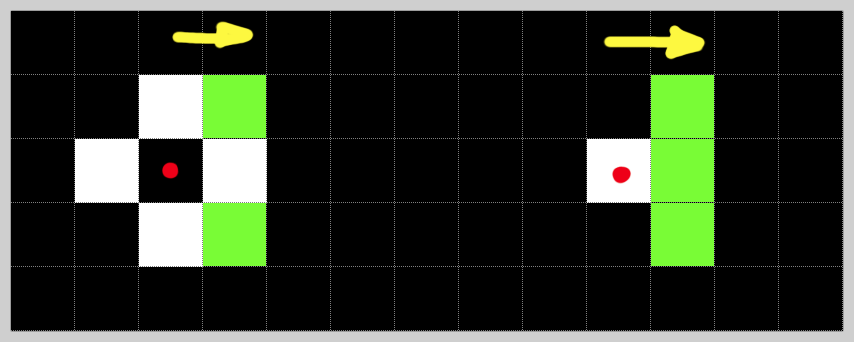

According to my logic, the line (created using points) should stop as soon as it reaches the boundary aka first green pixel along a particular axis but there is a slight offset of the detected boundary. In the given image, you can clearly see the right and left most points calculated by checking each and every pixel is slightly off center from the actual line

Images are enlarged for better view

I checked my code multiple times and I freshly drew ellipses every time to make sure there is no stray green pixels left on the image but the offset is consistent for each try

So my question will be: How do I get rid of this offset and make my line incident on the boundary perfectly? Is this a visual glitch or Am I doing something wrong?

Note: I know there are rectFitting and MinAreaRect functions which I can use to draw perfect bounding boxes to get points but I wanted to know why this is happening. I'm not looking for optimal method instead I'm looking for the cause and solution for this issue.

If you can suggest better/accurate title, its much appreciated. I think I have explained everything for the time being.

Code:

import cv2

import numpy as np

import matplotlib.pyplot as plt

%inline matplotlib

#Function to plot images

def display_img(img,name):

fig = plt.figure(figsize=(4,4))

ax = fig.add_subplot(111)

ax.imshow(img,cmap ="gray")

plt.title(name)

#A black canvas

canvas = np.zeros((1600,1200,3),np.uint8)

#Value obtained after ellipse fitting an object

val = ((654, 664),(264, 266),80)

centroid = (val[0][0],val[0][1])

#Drawing the ellipse on the canvas(green)

ell = cv2.ellipse(canvas,val,(0,255,0),1)

centroid_ = cv2.circle(canvas,centroid,1,(255,0,0),10) #High thickness to see it visibly (Red)

display_img(canvas,"Canvas w/ ellipse and centroid")

#variables for centers

y_center = centroid[1]

#Variables which iterate over time

right_pt = centroid[0]

left_pt = centroid[0]

#Using while loops to find the distance from the center to the

#nearby first green pixel (leftmost and rightmost boundary)

while(np.any(canvas[right_pt,y_center] != [0,255,0])):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

while(np.any(canvas[left_pt,y_center] != [0,255,0])):

cv2.circle(canvas,(left_pt,y_center),1,(255,255,255),1)

left_pt -= 1

#Drawing the obtained points

canvas = cv2.circle(canvas,(right_pt,y_center),1,(0,255,0),2)

canvas = cv2.circle(canvas,(left_pt,y_center),1,(0,255,0),2)

display_img(canvas,"Finale")

CodePudding user response:

There are couple of problems, one hiding neatly behind another.

The first issue is evident in this snippet of code extracted from your script:

# ...

val = ((654, 664),(264, 266),80)

centroid = (val[0][0],val[0][1])

y_center = centroid[1]

right_pt = centroid[0]

left_pt = centroid[0]

while(np.any(canvas[right_pt,y_center] != [0,255,0])):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

# ...

Notice that you use the X and Y coordinates of the point you want to process

(represented by right_pt and y_center respectively) in the same order

to do both of the following:

- index a numpy array:

canvas[right_pt,y_center] - specify point coordinate to an OpenCV function:

(right_pt,y_center)

That is a problem, because each of those libraries expects a different order:

- numpy indexing is by default row-major, i.e.

img[y,x] - points and sizes in OpenCV are column-major, i.e.

(x,y)

In this particular case, the error is in the order of indexes for the numpy array canvas.

To fix it, just switch them around:

while(np.any(canvas[y_center,right_pt] != [0,255,0])):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

# ditto for the second loop

Once you fix that, and run your script, it will crash with an error like

while(np.any(canvas[y_center,right_pt] != [0,255,0])):

IndexError: index 1200 is out of bounds for axis 1 with size 1200

Why didn't this happen before? Since the centroid was (654, 664)

and you had the coordinates swapped, you were looking 10 rows away

from where you were drawing.

The problem lies in the fact that you're drawing white circles

into the same image you're also searching for green pixels, combined

with perhaps mistaken interpretation of what the radius parameter of

cv2.circle does. I suppose the best way to show this with an image

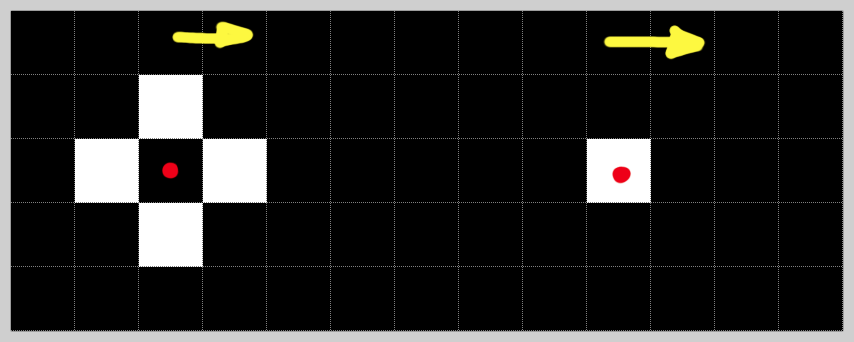

(representing 5 rows of 13 pixels):

The red dots are centers of respective circles, white squares are the pixels drawn, black squares are the pixels left untouched and the yellow arrows indicate the direction of iteration along the row. On the left side, you can see circle with radius 1, on the right radius 0.

Let's say we're approaching the green area we want to detect:

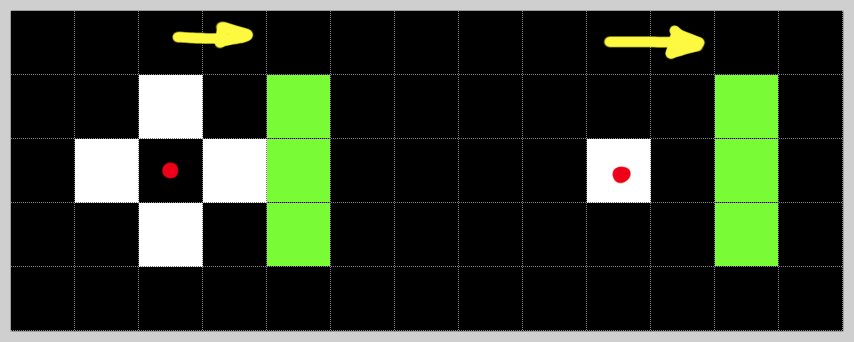

And make another iteration:

Oops, with radius of 1, we just changed the green pixel we're looking for to white. Hence we can never find any green pixels (with the exception of the first point tested, since at that point we haven't drawn anything yet, and only in the first loop), and the loop will run out of bounds of the image.

There are several options on how to resolve this problem. The simplest one, if you're fine with a thinner line, is to change the radius to 0 in both calls to cv2.circle. Another possibility would be to cache a copy of "the row of interest", so that any drawing you do on canvas won't effect the search:

target_row = canvas[y_center].copy()

while(np.any(target_row[right_pt] != [0,255,0])):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

or

target_row = canvas[y_center] != [0,255,0]

while(np.any(target_row[right_pt])):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

or even better

target_row = np.any(canvas[y_center] != [0,255,0], axis=1)

while(target_row[right_pt]):

cv2.circle(canvas,(right_pt,y_center),1,(255,255,255),1)

right_pt = 1

Finally, you could skip the drawing in the loops, and just use a single function call to draw a line connecting the two endpoints you found.

target_row = np.any(canvas[y_center] != [0,255,0], axis=1)

while(target_row[right_pt]):

right_pt = 1

while(target_row[left_pt]):

left_pt -= 1

#Drawing the obtained points

cv2.line(canvas, (left_pt,y_center), (right_pt,y_center), (255,255,255), 2)

cv2.circle(canvas, (right_pt,y_center), 1, (0, 255, 0), 2)

cv2.circle(canvas, (left_pt,y_center), 1, (0, 255, 0), 2)

Bonus: Let's get rid of the explicit loops.

left_pt, right_pt = np.where(np.all(canvas[y_center] == [0,255,0], axis=1))[0]

This will (obviously) work only if there are two matching pixels on the row of interest. However, it is trivial to extend this to find the first one from the ellipses center in each direction (you get an array of all X coordinates/columns that contain a green pixel for that row).

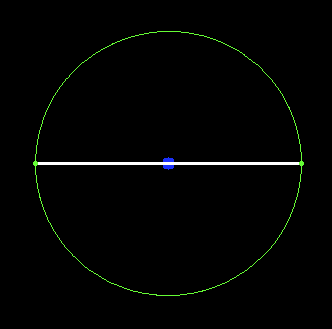

Cropped output (generated by cv2.imshow) of that implementation can be seen in the following image (the centroid is blue, since you used (255,0,0) to draw it, and OpenCV uses BGR order by default):