I'm trying to transform a csv file with year, lat, long and pressure into a 3 dimensional netcdf pressure(time, lat, long).

However, my list is with duplicate values as below:

year,lon,lat,pressure

1/1/00,79.4939,34.4713,11981569640

1/1/01,79.4939,34.4713,11870476671

1/1/02,79.4939,34.4713,11858633008

1/1/00,77.9513,35.5452,11254617090

1/1/01,77.9513,35.5452,11267424230

1/1/02,77.9513,35.5452,11297377976

1/1/00,77.9295,35.5188,1031160490

I have the same year, lon, lat for one pressure

My first attempt was using straight:

import pandas as pd

import xarray as xr

csv_file = '.csv'

df = pd.read_csv(csv_file)

df = df.set_index(["year", "lon", "lat"])

xr = df.to_xarray()

nc=xr.to_netcdf('netcdf.nc')`

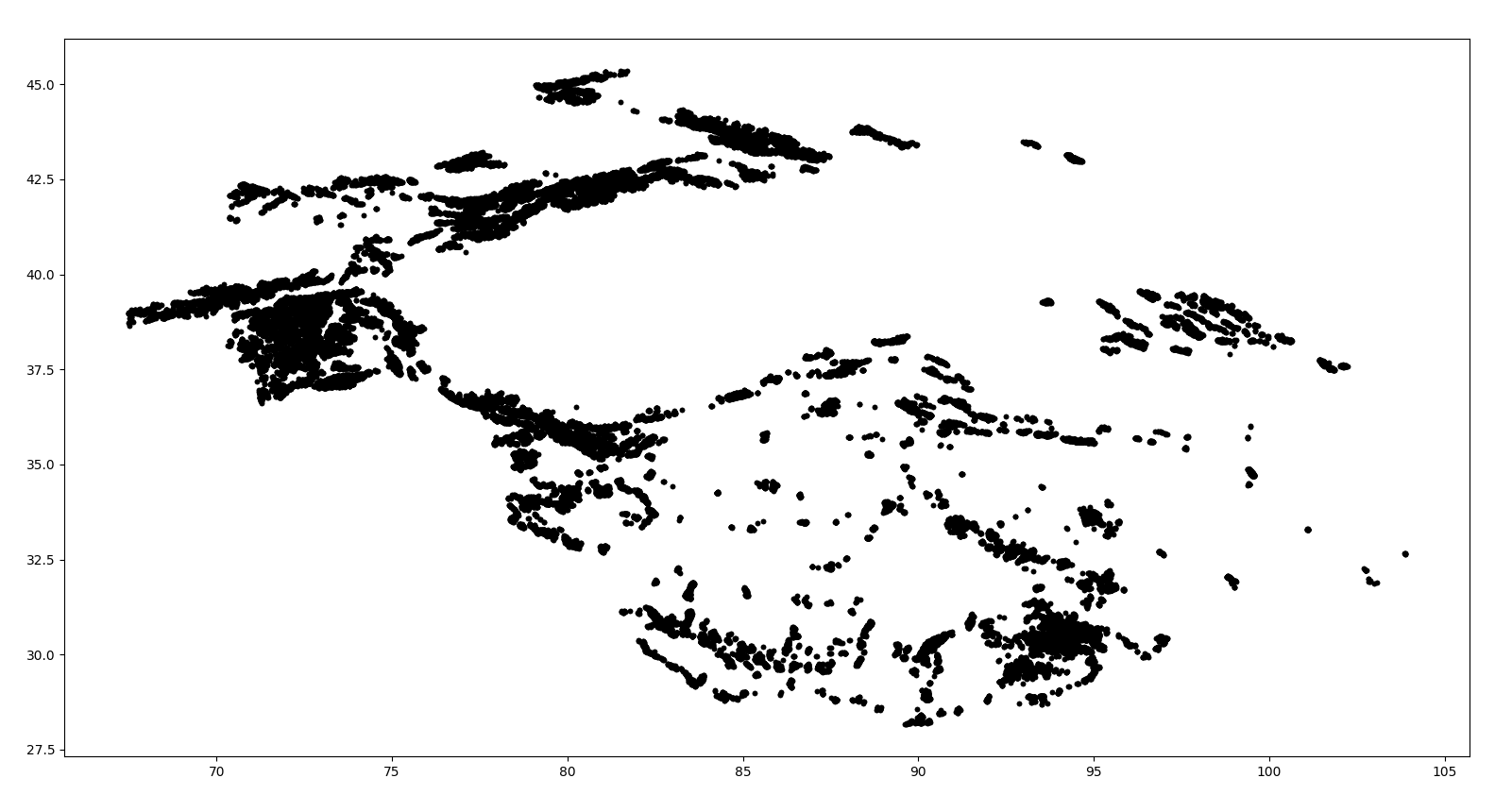

So, to make it to the regular grid, one should interpolate, but as the density of the data in some region is really high and in other region rather small, it's not wise to select regular grid spacing with very small step as there are more than ~40000 unique longitude and ~30000 unique latitude values. Basically, putting this to regular grid would mean array 40k x 30k .

I would suggest making just netCDF containing all the points (irregularly spaced) and using this dataset for further analysis.

Here is some code to turn the input xlsx file to netCDF:

#!/usr/bin/env ipython

import xarray as xr

import pandas as pd

import numpy as np

# -----------------

import pandas as pd

df = pd.read_excel('13.xlsx');

df.columns = ['date','lon','lat','pres'];

for cval in df.columns:

df[cval] = pd.to_numeric(df[cval],errors = 'coerce')

# --------------------------------------

ddf = xr.Dataset.from_dataframe(df);

ddf.to_netcdf('simple_netcdf.nc')