I am trying to display the game objects or prefabs generated by Unity overlaying all textures.

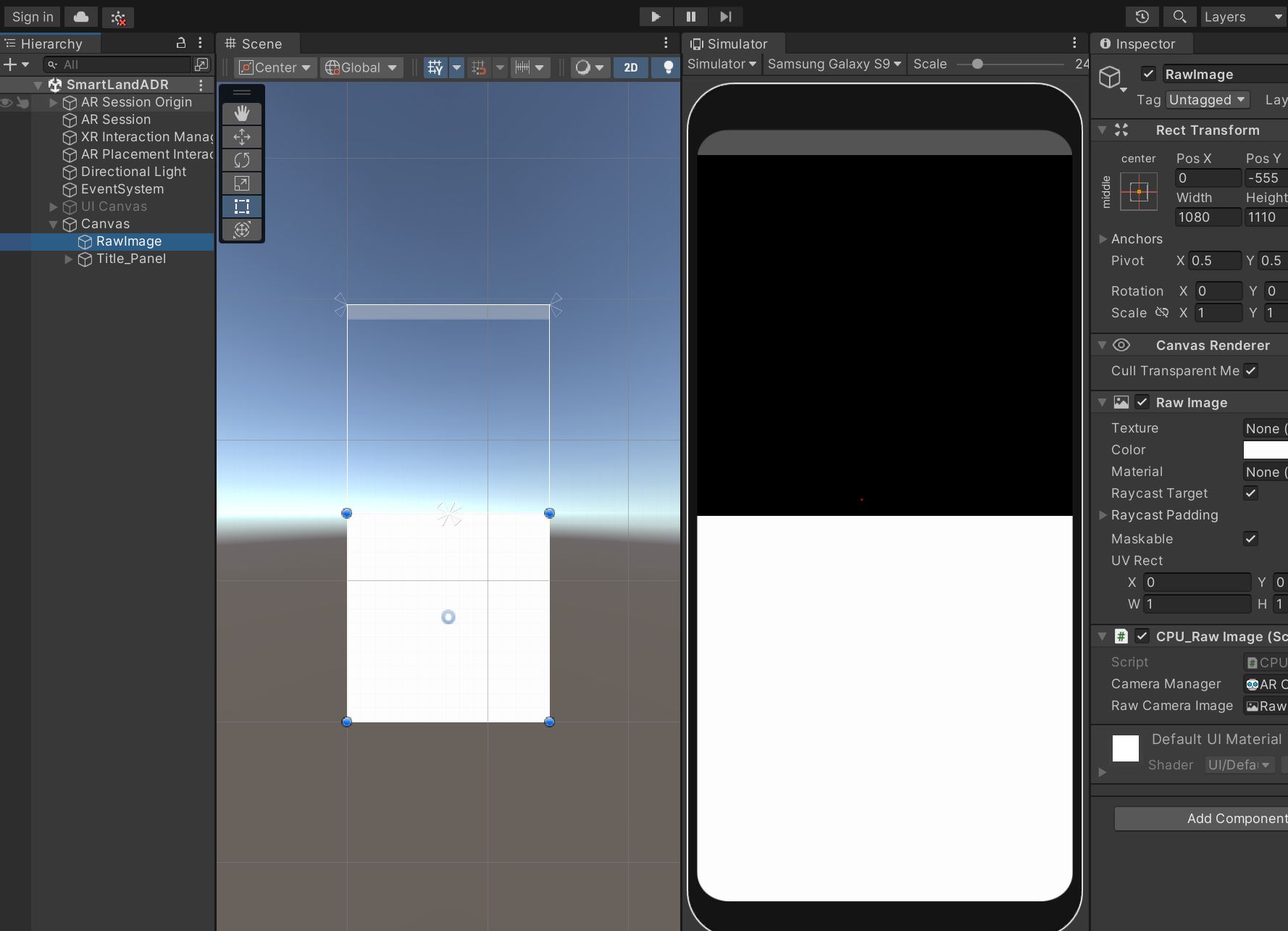

In detail: At the moment I have created an AR application for smartphones and inserted a RawImage into it:

A script CPU_RawImage.cs takes the live image from the camera and transfers it to this RawImage:

As you can see on the screenshot, it is unfortunately not possible to transfer the created virtual objects (in this case the house) to the RawImage.

Therefore my question is there a possibility to change this render order for the created objects, or any other possibility to make these objects visible ?

I have been trying to find a setting for the Render Order, so far I have not been able to locate such an option for 3D Application. I was also advised to try Render / Graphic - Pipline or Shaders, but I have no experience.

CodePudding user response:

Add a standard Unity Camera as a child of your AR Camera. This should make it such that these two cameras have the same view (except the Camera Background. Also set the camera clear flag such that the background will be transparent. Then set the render target of that new camera to a RenderTexture. (There should be a lot of guides online how to do that). Then display that render Texture in your UI as you see fit.

Because the second camera only sees the virtual objects, the RenderTexture will also only contain those. If something looks skewed, check if the FOV of the two cameras match during runtime.

CodePudding user response:

I followed the instructions from above. I created a second camera as a child of the AR camera and rendered Target Textzure to a RenderTexture.

In the UI I created a second RawImage and applied the RenderTexture to it. Unity_screenshot

The result on the smartphone display looks like this: Screenshot_Display

As you can see, the VR object is on top of the RawImage, but somehow pixelated and with an offset when you compare the top and bottom half. Any suggestions for the problem ?