I extracted data from an API using Airflow. The data is extracted from the API and saved on cloud storage in JSON format.

The next step is to insert the data into an SQL DB. I have a few questions:

- Should I do it on Airflow or using another ETL like AWS Glue/Azure Data factory?

- How to insert the data into the SQL DB? I google "how to insert data into SQL DB using python"?. I found a solution that loops all over JSON records and inserts the data 1 record at a time. It is not very efficient. Any other way I can do it?

- Any other recommendations and best practices on how to insert the JSON data into the SQL server?

I haven't decided on a specific DB so far, so feel free to pick the one you think fits best.

thank you!

CodePudding user response:

You can use Airflow just as a scheduler to run some python/bash scripts in defined time with some dependencies rules, but you can also take advantage of the operators and the hooks provided by Airflow community.

For the ETL part, Airflow isn't an ETL tool. If you need some ETL pipelines, you can run and manage them using Airlfow, but you need an ETL service/tool to create them (Spark, Athena, Glue, ...).

To insert data in the DB, you can create your own python/bash script and run it, or use the existing operators. You have some generic operators and hooks for postgress, MySQL and the different databases (MySQL, postgres, oracle, mssql), and there are some other optimized operators and hooks for each cloud service (AWS RDS, GCP Cloud SQL, GCP Spanner...), if you want to use one of the managed/serverless services, I recommend using its operators, and if you want to deploy your service on a VM or K8S cluster, you need to use the generic ones.

Airflow supports almost all the popular cloud services, so try to choose your cloud provider based on cost, performance, team knowledge and the other needs of your project, and you will surly find a good way to achieve your goal with Airlfow.

CodePudding user response:

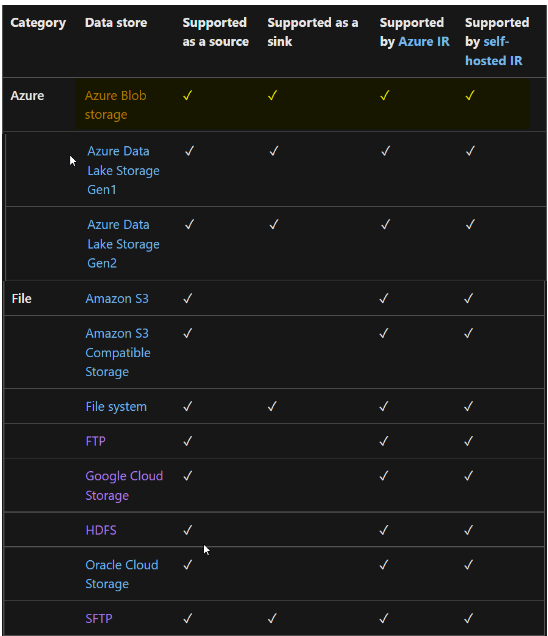

- You can use Azure Data Factory or Azure Synapse Analytics to move data in Json file to SQL server. Azure Data Factory supports 90 connectors as of now. (Refer MS doc on Connector overview - Azure Data Factory & Azure Synapse for more details about connectors that are supported by Data Factory).

Img:1 Some connectors which are supported by ADF.

Refer MS docs on pre-requisites and Required Permissions to connect Google cloud storage with ADF

Take source connector as Google Cloud storage in copy activity. Reference: Copy data from Google Cloud Storage - Azure Data Factory & Azure Synapse | Microsoft Learn

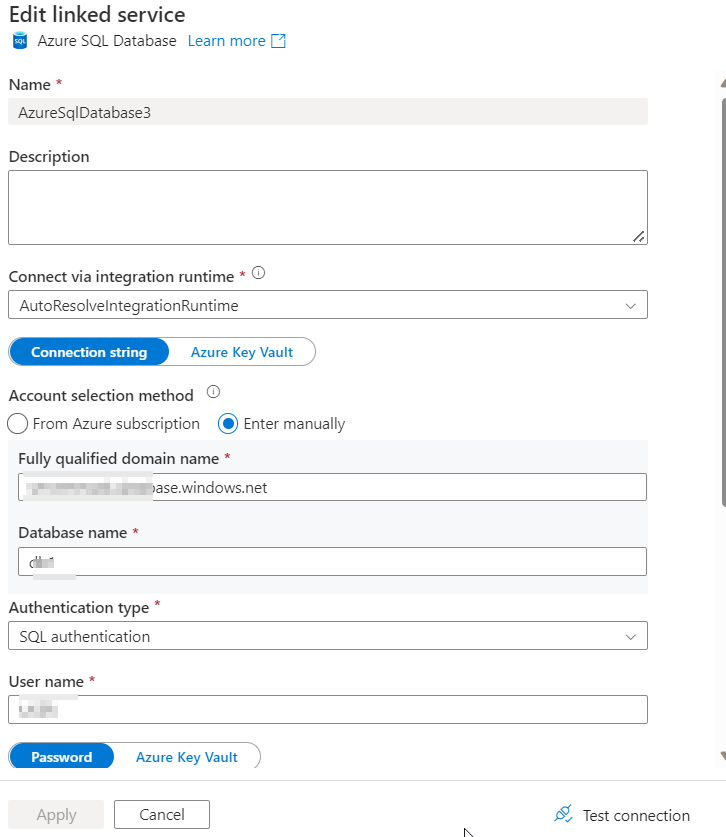

Take SQL DB connector for sink.

- ADF supports Auto create table option when there is no table created in Azure SQL database. Also, you can map the source and sink columns in mapping settings.