This is my attempt:

df['occupation']= df['occupation'].str.replace('?', '')

df.dropna(subset=['occupation'], inplace=True)

but it is not working, How do i remove all of the rows of the occupation column that i read from a csv file that contain a ? rather than an occupation

CodePudding user response:

If you're reading the csv with pd.read_csv(), you can pass na_values.

# to treat '?' as NaN in all columns:

pd.read_csv(fname, na_values='?')

# to treat '?' as NaN in just the occupation column:

pd.read_csv(fname, na_values={'occupation': '?'})

Then, you can dropna or fillna('') on that column as you see fit.

CodePudding user response:

Clean up the white space and use an 'unselect' filter:

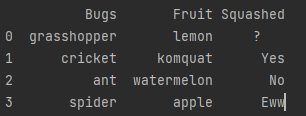

import pandas as pd

bugs = ['grasshopper','cricket','ant','spider']

fruit = ['lemon','komquat','watermelon','apple']

squashed = [' ? ','Yes','No','Eww']

df = pd.DataFrame(list(zip(bugs,fruit,squashed)), columns = ['Bugs','Fruit','Squashed'])

print(df.head())

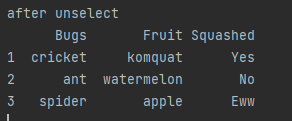

df = df[df['Squashed'].apply(lambda x: x.strip()) != '?']

print('after stripping white space and after unselect')

print(df.head())

Why

The dataframe method .dropna() won't detect blanks (ie '') but will look for Nan or NaT or None.

https://pandas.pydata.org/pandas-docs/stable/reference/api/pandas.DataFrame.dropna.html

However, using .replace() to set the value to missing won't work because .replace() requires the type to match and None doesn't match any type you'll have in a column already.

Better to clean up the white space (which is the simple case) using lambda on each entry to apply the string transformation.

CodePudding user response:

You can try this...

df = df[df.occupation != "?"]