I've been struggling with this for weeks, so I'm finally reaching out.

From what I understand, Azure DevOps pipelines are able to generate a start-to-finish YAML file that builds and pushes docker files into Azure Container Registry, and then employs Kubernetes to generate manifests files as artifacts and subsequently use the generated manifests files to deploy our multi-container application into Azure Kubernetes Service. Is that a bad understanding? Do I need to have my manifest files written myself before using the pipeline? If so, is there a better way to generate the manifests files? Currently I've tried doing it by hand, line by line, but I'm running into issues.

I've attached the auto-generated YAML file to this post - I've gone through and hidden personal/private details from the code. I've been able to get it to do the first stage without issue - composing/pushing docker files to ACR, but the deploy stage fails every time. For various reasons - I'm guessing because my manifest files are incorrectly written.

# Starter pipeline

# Start with a minimal pipeline that you can customize to build and deploy your code.

# Add steps that build, run tests, deploy, and more:

# https://aka.ms/yaml

trigger:

- master

resources:

- repo: self

variables:

# Container registry service connection established during pipeline creation

dockerRegistryServiceConnection: 'HIDDEN'

imageRepository: 'dec7'

containerRegistry: 'HIDDEN'

dockerfilePath: '**/Dockerfile'

buildContext: 1.x/trunk/src/

tag: '$(Build.BuildId)'

imagePullSecret: 'HIDDEN'

# Agent VM image name

vmImageName: 'ubuntu-20.04'

# Name of the new namespace being created to deploy the PR changes.

k8sNamespaceForPR: 'review-app-$(System.PullRequest.PullRequestId)'

stages:

- stage: Build

displayName: Build stage

jobs:

- job: Build

displayName: Build

pool:

vmImage: $(vmImageName)

steps:

- task: DockerCompose@0

displayName: 'Build services'

inputs:

containerregistrytype: 'Azure Container Registry'

azureSubscription: HIDDEN

azureContainerRegistry: 'HIDDEN'

dockerComposeFile: '1.x/trunk/src/docker-compose.yml'

dockerComposeFileArgs: 'DOCKER_BUILD_SOURCE='

action: 'Build services'

additionalImageTags: '$(Build.BuildId)'

- task: DockerCompose@0

displayName: 'Push services'

inputs:

containerregistrytype: 'Azure Container Registry'

azureSubscription: HIDDEN

azureContainerRegistry: 'HIDDEN'

dockerComposeFile: '1.x/trunk/src/docker-compose.yml'

dockerComposeFileArgs: 'DOCKER_BUILD_SOURCE='

action: 'Push services'

additionalImageTags: '$(Build.BuildId)'

- task: DockerCompose@0

displayName: 'Lock services'

inputs:

containerregistrytype: 'Azure Container Registry'

azureSubscription: HIDDEN

azureContainerRegistry: 'HIDDEN'

dockerComposeFile: '1.x/trunk/src/docker-compose.yml'

dockerComposeFileArgs: 'DOCKER_BUILD_SOURCE='

action: 'Lock services'

outputDockerComposeFile: '$(Build.StagingDirectory)/docker-compose.yml'

- upload: manifests

artifact: manifests

- stage: Deploy

displayName: Deploy stage

dependsOn: Build

jobs:

- deployment: Deploy

condition: and(succeeded(), not(startsWith(variables['Build.SourceBranch'], 'refs/pull/')))

displayName: Deploy

pool:

vmImage: $(vmImageName)

environment: HIDDEN

strategy:

runOnce:

deploy:

steps:

- checkout: self

- task: KubernetesManifest@0

displayName: Create imagePullSecret

inputs:

action: 'createSecret'

kubernetesServiceConnection: 'AKSServiceConnectionDec6'

secretType: 'dockerRegistry'

secretName: '$(imagePullSecret)'

dockerRegistryEndpoint: '$(dockerRegistryServiceConnection)'

- task: KubernetesManifest@0

displayName: Deploy to Kubernetes cluster

inputs:

action: 'deploy'

kubernetesServiceConnection: 'AKSServiceConnectionDec6'

manifests: |

$(Pipeline.Workspace)/manifests/deployment.yml

$(Pipeline.Workspace)/manifests/service.yml

containers: '$(containerRegistry)/$(imageRepository):$(tag)'

imagePullSecrets: '$(imagePullSecret)'

- deployment: DeployPullRequest

displayName: Deploy Pull request

condition: and(succeeded(), startsWith(variables['Build.SourceBranch'], 'refs/pull/'))

pool:

vmImage: $(vmImageName)

environment: 'HIDDEN$(k8sNamespaceForPR)'

strategy:

runOnce:

deploy:

steps:

- reviewApp: HIDDEN

- task: Kubernetes@1

displayName: 'Create a new namespace for the pull request'

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceEndpoint: 'AKSServiceConnectionDec6'

command: 'apply'

useConfigurationFile: true

secretType: 'dockerRegistry'

containerRegistryType: 'Azure Container Registry'

- task: KubernetesManifest@0

displayName: Create imagePullSecret

inputs:

action: createSecret

secretName: $(imagePullSecret)

namespace: $(k8sNamespaceForPR)

dockerRegistryEndpoint: $(dockerRegistryServiceConnection)

- task: KubernetesManifest@0

displayName: Deploy to the new namespace in the Kubernetes cluster

inputs:

action: 'deploy'

kubernetesServiceConnection: 'AKSServiceConnectionDec6'

namespace: '$(k8sNamespaceForPR)'

manifests: |

$(Pipeline.Workspace)/manifests/deployment.yml

$(Pipeline.Workspace)/manifests/service.yml

containers: '$(containerRegistry)/$(imageRepository):$(tag)'

imagePullSecrets: '$(imagePullSecret)'

- task: Kubernetes@1

name: get

displayName: 'Get services in the new namespace'

continueOnError: true

inputs:

connectionType: 'Kubernetes Service Connection'

kubernetesServiceEndpoint: 'AKSServiceConnectionDec6'

namespace: '$(k8sNamespaceForPR)'

command: 'get'

arguments: 'svc'

secretType: 'dockerRegistry'

containerRegistryType: 'Azure Container Registry'

outputFormat: 'jsonpath=''http://{.items[0].status.loadBalancer.ingress[0].ip}:{.items[0].spec.ports[0].port}'''

# Getting the IP of the deployed service and writing it to a variable for posing comment

- script: |

url="$(get.KubectlOutput)"

message="Your review app has been deployed"

if [ ! -z "$url" -a "$url" != "http://:" ]

then

message="${message} and is available at $url.<br><br>[Learn More](https://aka.ms/testwithreviewapps) about how to test and provide feedback for the app."

fi

echo "##vso[task.setvariable variable=GITHUB_COMMENT]$message"

I've tried generating new pipelines from scratch using both the classic editor as well as the new editor Microsoft provides. I get an issue with the build stage not being able to find the working directory. I fix this by specifying that manually. However, once the pipeline gets to the deploy stage I get the following error:

##[error]No manifest file(s) matching /home/vsts/work/1/manifests/deployment.yml,/home/vsts/work/1/manifests/service.yml was found.

This tells me that the pipeline isn't generating manifest files like I thought it was supposed to. So I wrote one myself, probably incorrectly, and it ran once - but timed out. Now I get the following error after running the deploy stage with an altered manifest file:

error: deployment "v4deployment" exceeded its progress deadline

##[error]Error: error: deployment "v4deployment" exceeded its progress deadline

CodePudding user response:

Is that a bad understanding?

Yes. You still have to author your deployment manifests. The pipeline can apply the manifests to the cluster, but it's not going to generate anything for you.

CodePudding user response:

Assmption: Your cluster is AKS

Is that a bad understanding?

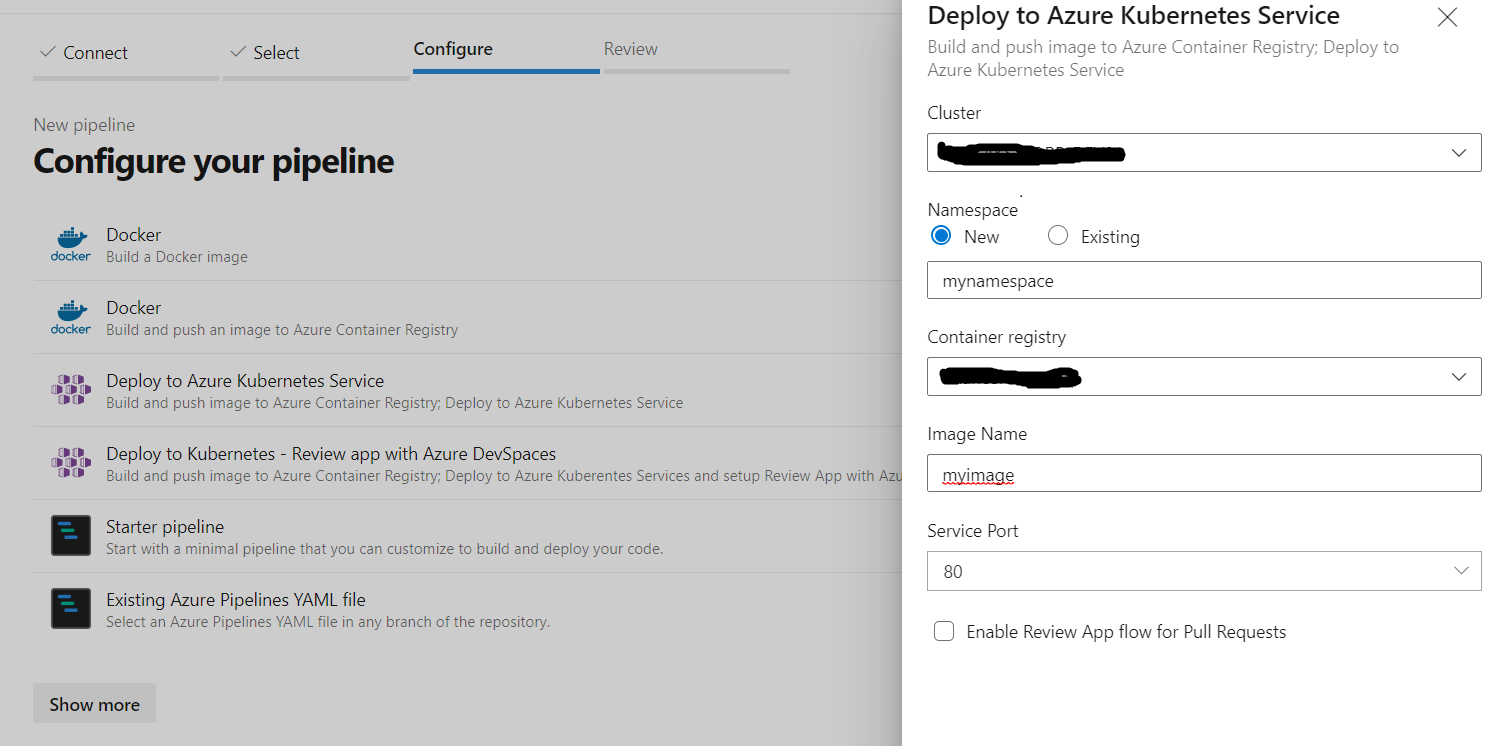

Nope its correct understading. When you create new pipeline and select option Deploy to Azure Kubernetes Service, this option will ask for Azure Subscription and seleting all option will generate pipeline yaml along with kubernetes manifest file inside manifest folder under root of your repository. You can modify/ updated these manifest files as per your need. I have removed some of personal details from snapshot.

##[error]No manifest file(s) matching /home/vsts/work/1/manifests/deployment.yml,/home/vsts/work/1/manifests/service.yml was found.

You have to check the path of manifest files generated inside your repo and provide the absolute path inside your pipeline yaml file. eg for our case manifest files were hosted under root --> manifests --> framework --> develop. So my yaml was like this.

- task: KubernetesManifest@0

displayName: Deploy to Kubernetes cluster

inputs:

action: deploy

manifests: |

$(Pipeline.Workspace)/manifests/framework/develop/deployment.yml

$(Pipeline.Workspace)/manifests/framework/develop/service.yml

imagePullSecrets: |

$(imagePullSecret)

containers: |

$(containerRegistry)/$(imageRepository):$(tag)

error: deployment "v4deployment" exceeded its progress deadline ##[error]Error: error: deployment "v4deployment" exceeded its progress deadline

This error indicates that your deployment is done. Now deployment is waiting for all application pods to be in running state but somehow pods are not getting ready due to error. To check the error you can access your cluster (kubeconfig or dashboard) and check the namespace events or you can check pod events / logs directly. These two commands will give you enough evidence why your pods are not healthy.

kubectl describe pod your-pod-name -n your-namespace

kubectl logs -f your-pod-name -n your-namespace