I am facing with one problem in Azure Databricks. In my notebook I am executing simple write command with partitioning:

df.write.format('parquet').partitionBy("startYear").save(output_path,header=True)

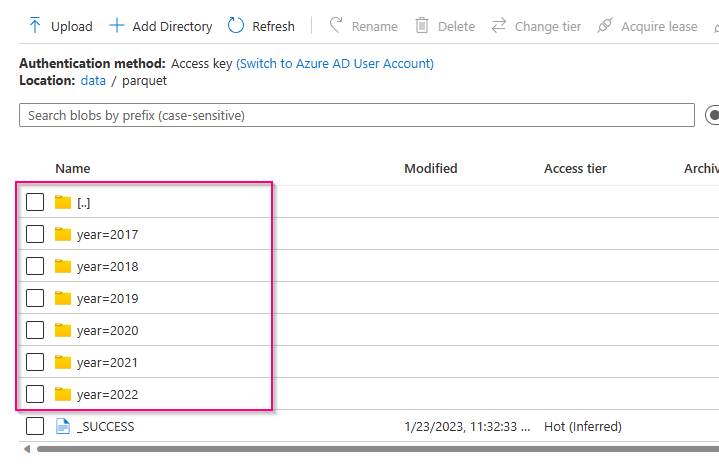

And I see something like this:

Can someone explain why spark is creating this additional empty files for every partition and how to disable it?

I tried different mode for write, different partitioning and spark versions

CodePudding user response:

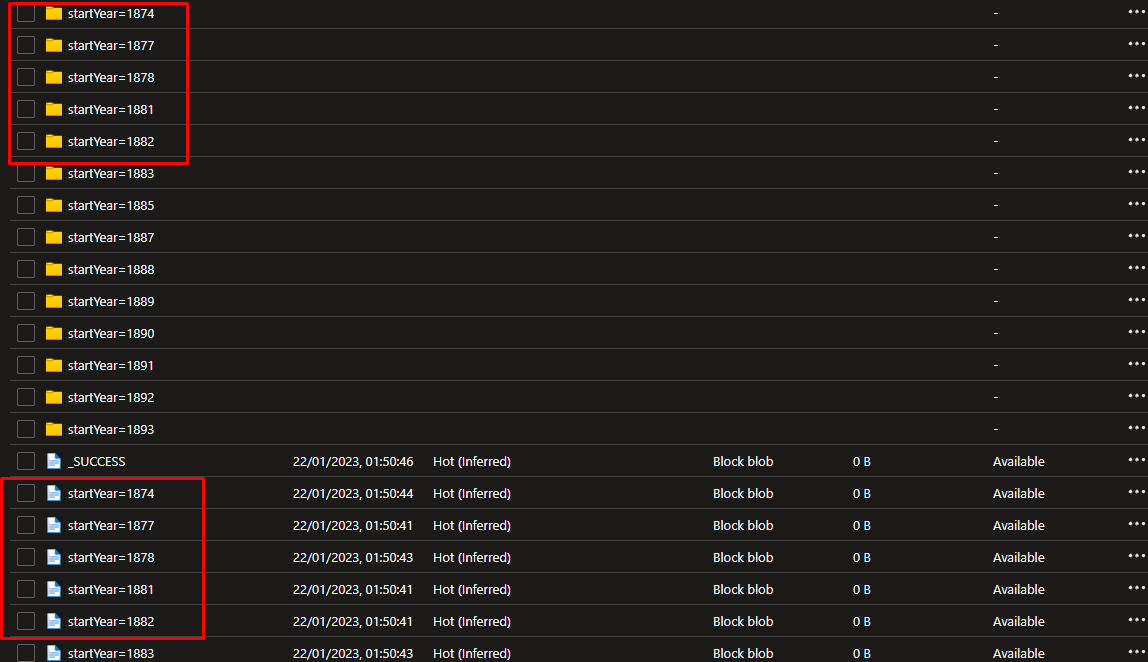

I reproduced the above and got the same results when I use Blob Storage.

Can someone explain why spark is creating this additional empty files for every partition and how to disable it?

Spark won't create these types of files. Blob Storage creates the blobs like above when we create parquet files by partitions.

We cannot avoid these if you use Blob Storage. You can avoid it by using ADLS Storage.

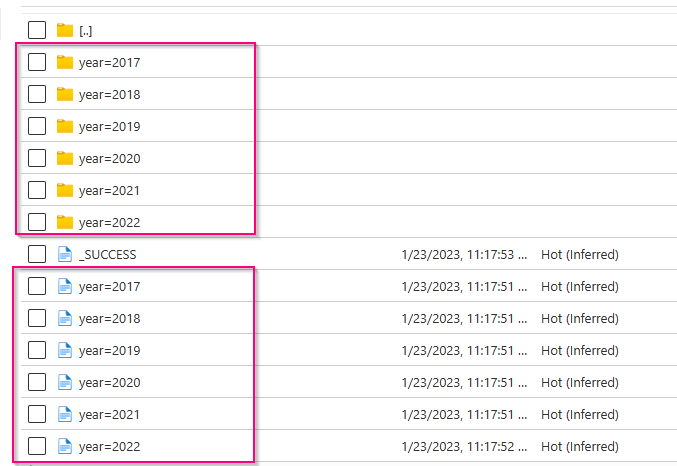

These are my Results with ADLS: