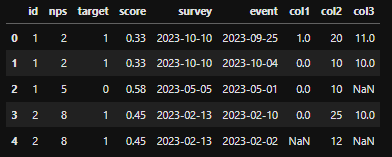

I have Data Frame in Python Pandas like below:

Input data: (columns survey and event are object date type)

data = [

(1, 2, 1, 0.33, '2023-10-10', '2023-09-25', 1, 20, 11),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-04', 0, 10, 10),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-01', 0, 10, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-10', 0, 25, 10),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-02', None, 12, None)

]

df = pd.DataFrame(data, columns=['id', 'nps', 'target', 'score', 'survey', 'event', 'col1', 'col2', 'col3'])

df

Requirements:

And I need to create new rows for each combination of values in columns: id, survey. New rows have to:

- For each combination of values in: id, survey create dates in the event column that do not exist for the given combination of values in: id, survey going back 31 days from date in survey column, for example if the combination id = 1 and survey = '2023-05-05' I need to have date rows in the event column from 2023-05-04 to 2023-04-04, i.e. 31 days backwards for that combination of: id, survey

- each new row need to has NaN values in columns: col1, col2, col3

- each new row need to has same values in columns: nps, target, score for each combination of values in: id, survey

Example output:

So, as a result I need to have something like below:

data = [

(1, 2, 1, 0.33, '2023-10-10', '2023-09-25', 1, 20, 11),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-04', 0, 10, 10),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-09', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-08', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-07', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-06', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-05', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-03', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-02', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-01', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-30', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-29', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-28', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-27', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-26', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-24', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-23', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-22', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-21', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-20', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-19', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-18', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-17', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-16', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-15', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-14', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-13', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-12', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-11', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-10', None, None, None),

(1, 2, 1, 0.33, '2023-10-10', '2023-09-09', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-01', 0, 10, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-04', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-03', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-02', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-30', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-29', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-28', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-27', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-26', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-25', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-24', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-23', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-22', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-21', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-20', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-19', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-18', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-17', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-16', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-15', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-14', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-13', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-12', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-11', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-10', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-09', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-08', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-07', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-06', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-05', None, None, None),

(1, 5, 0, 0.58, '2023-05-05', '2023-04-04', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-10', 0, 25, 10),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-02', None, 12, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-12', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-11', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-09', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-08', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-07', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-06', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-05', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-04', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-03', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-01', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-31', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-30', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-29', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-28', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-27', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-26', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-25', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-24', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-23', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-22', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-21', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-20', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-19', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-18', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-17', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-16', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-15', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-14', None, None, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-01-13', None, None, None),

]

df = pd.DataFrame(data, columns=['id', 'nps', 'target', 'score', 'survey', 'event', 'col1', 'col2', 'col3'])

df

How can I do that in Python Pandas ?

CodePudding user response:

Try:

df["event"] = pd.to_datetime(df["event"])

df["survey"] = pd.to_datetime(df["survey"])

def fn(g):

g = g.set_index("event")

d = g["survey"].min()

g = (

g.reindex(pd.date_range(d - pd.Timedelta(days=31), d))

.reset_index()

.rename(columns={"index": "event"})

)

g[["id", "nps", "target", "score", "survey"]] = (

g[["id", "nps", "target", "score", "survey"]].ffill().bfill()

)

return g

df = df.groupby(["id", "survey"], group_keys=False).apply(fn)

print(df)

Prints:

event id nps target score survey col1 col2 col3

0 2023-04-04 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

1 2023-04-05 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

2 2023-04-06 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

3 2023-04-07 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

4 2023-04-08 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

5 2023-04-09 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

6 2023-04-10 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

7 2023-04-11 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

8 2023-04-12 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

9 2023-04-13 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

10 2023-04-14 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

11 2023-04-15 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

12 2023-04-16 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

13 2023-04-17 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

14 2023-04-18 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

15 2023-04-19 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

16 2023-04-20 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

17 2023-04-21 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

18 2023-04-22 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

19 2023-04-23 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

20 2023-04-24 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

21 2023-04-25 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

22 2023-04-26 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

23 2023-04-27 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

24 2023-04-28 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

25 2023-04-29 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

26 2023-04-30 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

27 2023-05-01 1.0 5.0 0.0 0.58 2023-05-05 0.0 10.0 NaN

28 2023-05-02 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

29 2023-05-03 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

30 2023-05-04 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

31 2023-05-05 1.0 5.0 0.0 0.58 2023-05-05 NaN NaN NaN

0 2023-09-09 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

1 2023-09-10 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

2 2023-09-11 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

3 2023-09-12 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

4 2023-09-13 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

5 2023-09-14 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

6 2023-09-15 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

7 2023-09-16 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

8 2023-09-17 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

9 2023-09-18 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

10 2023-09-19 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

11 2023-09-20 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

12 2023-09-21 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

13 2023-09-22 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

14 2023-09-23 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

15 2023-09-24 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

16 2023-09-25 1.0 2.0 1.0 0.33 2023-10-10 1.0 20.0 11.0

17 2023-09-26 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

18 2023-09-27 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

19 2023-09-28 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

20 2023-09-29 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

21 2023-09-30 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

22 2023-10-01 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

23 2023-10-02 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

24 2023-10-03 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

25 2023-10-04 1.0 2.0 1.0 0.33 2023-10-10 0.0 10.0 10.0

26 2023-10-05 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

27 2023-10-06 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

28 2023-10-07 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

29 2023-10-08 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

30 2023-10-09 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

31 2023-10-10 1.0 2.0 1.0 0.33 2023-10-10 NaN NaN NaN

0 2023-01-13 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

1 2023-01-14 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

2 2023-01-15 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

3 2023-01-16 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

4 2023-01-17 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

5 2023-01-18 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

6 2023-01-19 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

7 2023-01-20 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

8 2023-01-21 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

9 2023-01-22 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

10 2023-01-23 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

11 2023-01-24 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

12 2023-01-25 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

13 2023-01-26 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

14 2023-01-27 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

15 2023-01-28 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

16 2023-01-29 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

17 2023-01-30 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

18 2023-01-31 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

19 2023-02-01 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

20 2023-02-02 2.0 8.0 1.0 0.45 2023-02-13 NaN 12.0 NaN

21 2023-02-03 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

22 2023-02-04 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

23 2023-02-05 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

24 2023-02-06 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

25 2023-02-07 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

26 2023-02-08 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

27 2023-02-09 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

28 2023-02-10 2.0 8.0 1.0 0.45 2023-02-13 0.0 25.0 10.0

29 2023-02-11 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

30 2023-02-12 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

31 2023-02-13 2.0 8.0 1.0 0.45 2023-02-13 NaN NaN NaN

CodePudding user response:

Creating those new rows in your Pandas DataFrame based on the given requirements can be done with Python. You can achieve this using the pandas library and some date calculations.

# import Libraries

import pandas as pd

import numpy as np

from datetime import timedelta

# create the dataframe using your data

data = [

(1, 2, 1, 0.33, '2023-10-10', '2023-09-25', 1, 20, 11),

(1, 2, 1, 0.33, '2023-10-10', '2023-10-04', 0, 10, 10),

(1, 5, 0, 0.58, '2023-05-05', '2023-05-01', 0, 10, None),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-10', 0, 25, 10),

(2, 8, 1, 0.45, '2023-02-13', '2023-02-02', None, 12, None)

]

df = pd.DataFrame(data, columns=['id', 'nps', 'target', 'score', 'survey', 'event', 'col1', 'col2', 'col3'])

df

# convert 'event' and 'survey' columns to datetime type

df['survey'] = pd.to_datetime(df['survey'])

df['event'] = pd.to_datetime(df['event'])

# Generate the date range list

date_range_list = [pd.date_range(end=survey_date, periods=32, freq='D')[::-1][1:] for survey_date in df['survey']]

# Create a new DataFrame with the desired values

new_data = []

for i, survey_date in enumerate(df['survey']):

for event_date in date_range_list[i]:

if pd.to_datetime(df.at[i, 'event']) == event_date:

new_data.append((df.at[i, 'id'], df.at[i, 'nps'], df.at[i, 'target'], df.at[i, 'score'], survey_date, event_date, df.at[i, 'col1'], df.at[i, 'col2'], df.at[i, 'col3']))

else:

new_data.append((df.at[i, 'id'], df.at[i, 'nps'], df.at[i, 'target'], df.at[i, 'score'], survey_date, event_date, np.NaN, np.NaN, np.NaN))

# Create a new DataFrame with the desired values

new_data = []

for i, survey_date in enumerate(df['survey']):

for event_date in date_range_list[i]:

if pd.to_datetime(df.at[i, 'event']) == event_date:

new_data.append((df.at[i, 'id'], df.at[i, 'nps'], df.at[i, 'target'], df.at[i, 'score'], survey_date, event_date, df.at[i, 'col1'], df.at[i, 'col2'], df.at[i, 'col3']))

else:

new_data.append((df.at[i, 'id'], df.at[i, 'nps'], df.at[i, 'target'], df.at[i, 'score'], survey_date, event_date, np.NaN, np.NaN, np.NaN))

# Create a new DataFrame from the new_data list

new_df = pd.DataFrame(new_data, columns=['id', 'nps', 'target', 'score', 'survey', 'event', 'col1', 'col2', 'col3'])

# Now, new_df contains the original data plus new rows with 'event' dates from the date range list

new_df

Here's the print result of the new_df:

id nps target score survey event col1 col2 col3

0 1 2 1 0.33 2023-10-10 2023-10-09 NaN NaN NaN

1 1 2 1 0.33 2023-10-10 2023-10-08 NaN NaN NaN

2 1 2 1 0.33 2023-10-10 2023-10-07 NaN NaN NaN

3 1 2 1 0.33 2023-10-10 2023-10-06 NaN NaN NaN

4 1 2 1 0.33 2023-10-10 2023-10-05 NaN NaN NaN

.. .. ... ... ... ... ... ... ... ...

150 2 8 1 0.45 2023-02-13 2023-01-17 NaN NaN NaN

151 2 8 1 0.45 2023-02-13 2023-01-16 NaN NaN NaN

152 2 8 1 0.45 2023-02-13 2023-01-15 NaN NaN NaN

153 2 8 1 0.45 2023-02-13 2023-01-14 NaN NaN NaN

154 2 8 1 0.45 2023-02-13 2023-01-13 NaN NaN NaN

[155 rows x 9 columns]

After this you can alse sort the dataFrame by the 'id' and 'survey' columns:

new_df = new_df.sort_values(by=['id', 'survey'])

print(new_df)

Print result:

id nps target score survey event col1 col2 col3

62 1 5 0 0.58 2023-05-05 2023-05-04 NaN NaN NaN

63 1 5 0 0.58 2023-05-05 2023-05-03 NaN NaN NaN

64 1 5 0 0.58 2023-05-05 2023-05-02 NaN NaN NaN

65 1 5 0 0.58 2023-05-05 2023-05-01 0.0 10.0 NaN

66 1 5 0 0.58 2023-05-05 2023-04-30 NaN NaN NaN

.. .. ... ... ... ... ... ... ... ...

150 2 8 1 0.45 2023-02-13 2023-01-17 NaN NaN NaN

151 2 8 1 0.45 2023-02-13 2023-01-16 NaN NaN NaN

152 2 8 1 0.45 2023-02-13 2023-01-15 NaN NaN NaN

153 2 8 1 0.45 2023-02-13 2023-01-14 NaN NaN NaN

154 2 8 1 0.45 2023-02-13 2023-01-13 NaN NaN NaN

[155 rows x 9 columns]

I hope this helps, Good luck with your project!