I have the following project:

database-migration/

migration.yml

kustomization.yml

Where kustomization.yml looks like:

resources:

- migration.yml

images:

- name: enterprise-server

newTag: 2020.12-6243

newName: example/enterprise-server

configMapGenerator:

- name: database-config

literals:

- ADMIN_REPOSITORY_URL=jdbc:postgresql://10.1.0.34:5432/app_db

- AGENT_REPOSITORY_URL=jdbc:postgresql://10.1.0.34:5432/app_db

- DB_CONNECTION_IDLE_TIMEOUT=60000

- DB_CONNECTION_MAX_LIFETIME_TIMEOUT=120000

secretGenerator:

- name: database-credentials

literals:

- ADMIN_REPOSITORY_USERNAME=app_admin

- [email protected]

- ADMIN_REPOSITORY_PASSWORD=12345

- AGENT_REPOSITORY_USERNAME=app_agent

- [email protected]

- AGENT_REPOSITORY_PASSWORD=23456

commonLabels:

app.kubernetes.io/version: 2020.12-6243

app.kubernetes.io/part-of: myapp

And where migration.yml looks like:

apiVersion: batch/v1

kind: Job

metadata:

name: database-migration

labels:

app.kubernetes.io/name: database-migration

app.kubernetes.io/component: database-migration

spec:

template:

metadata:

labels:

app.kubernetes.io/name: database-migration

app.kubernetes.io/component: database-migration

spec:

containers:

- name: database-migration

image: enterprise-server

env:

- name: CLOUD_ENVIRONMENT

value: KUBERNETES

envFrom:

- configMapRef:

name: database-config

- secretRef:

name: database-credentials

command: ['sh', '-c', '/usr/local/app_enterprise/bin/databaseMigration || [ $? -eq 15 ]; exit $?']

restartPolicy: Never

When I run:

kubectl create ns appdb

kubectl -n appdb apply -k database-migration

kubectl -n appdb wait --for=condition=complete --timeout=10m job/database-migration

It just hangs for 10 minutes and then fails:

error: timed out waiting for the condition on jobs/database-migration

I'd like to tail logs or just look at logs (even after the fact) but not sure how to get logs for a job that has failed, or how to tail logs for an ongoing job. Any ideas?

CodePudding user response:

A Job creates pods for execution. You can just check the pods (or all elements)

kubectl -n appdb get all

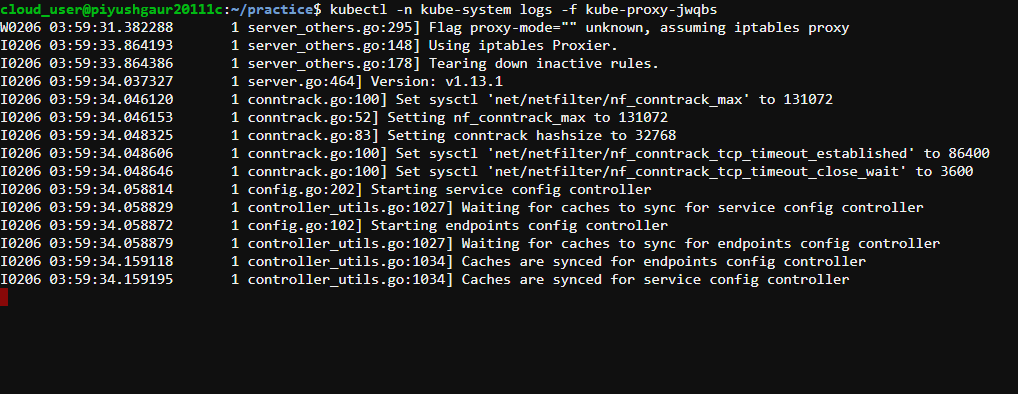

and then request the logs for the pod, for example

kubectl -n appdb logs -f database-migration-xyz123

CodePudding user response:

Few notes about Kustomization

- If you are using

Kustomizationyou can use it for the creation of theNamesapceas well obviously. - Add the namespace to your

kustomization.yamlfile so it will be added to all of your resources

I'd like to tail logs or just look at logs

In order to view or tail your logs:

# get the name of your Job Pod and get the logs

kubectl logs -n <namespace> jox-xxxxx

# To tail your logs (follow)

kubectl logs -n <namespace> -f jox-xxxxx

How to get logs of "crashed" jobs?

- Add the

--previousflag

# Add the `--previous` flag to your logs command

kubectl logs -n <namespace> jox-xxxxx --previous