I'm working with OpenCV for a while and experiment with the DNN extension. My model has the input shape [1, 3, 224, 244] with pixel-depth uint8. So I put my m_inputImg which has 3-channels and 8 bit pixel depth in the function:

cv::dnn::blobFromImage(m_inputImg, m_inputImgTensor, 1.0, cv::Size(), cv::Scalar(), false, false, CV_8U);

Now I'm interested in having a Idea how my input image "lay" inside the cv::Mat tensor. Theoretically I know how the tensor looks like, but I don't understand how OpenCV do it. So to understand this I want to extract one colour channel. I've tried this:

cv::Mat blueImg = cv::Mat(cp->getModelConfigs().model[0].input.height,

cp->getModelConfigs().model[0].input.width,

CV_8UC3,

blob.ptr<uint8_t>(0, 0);

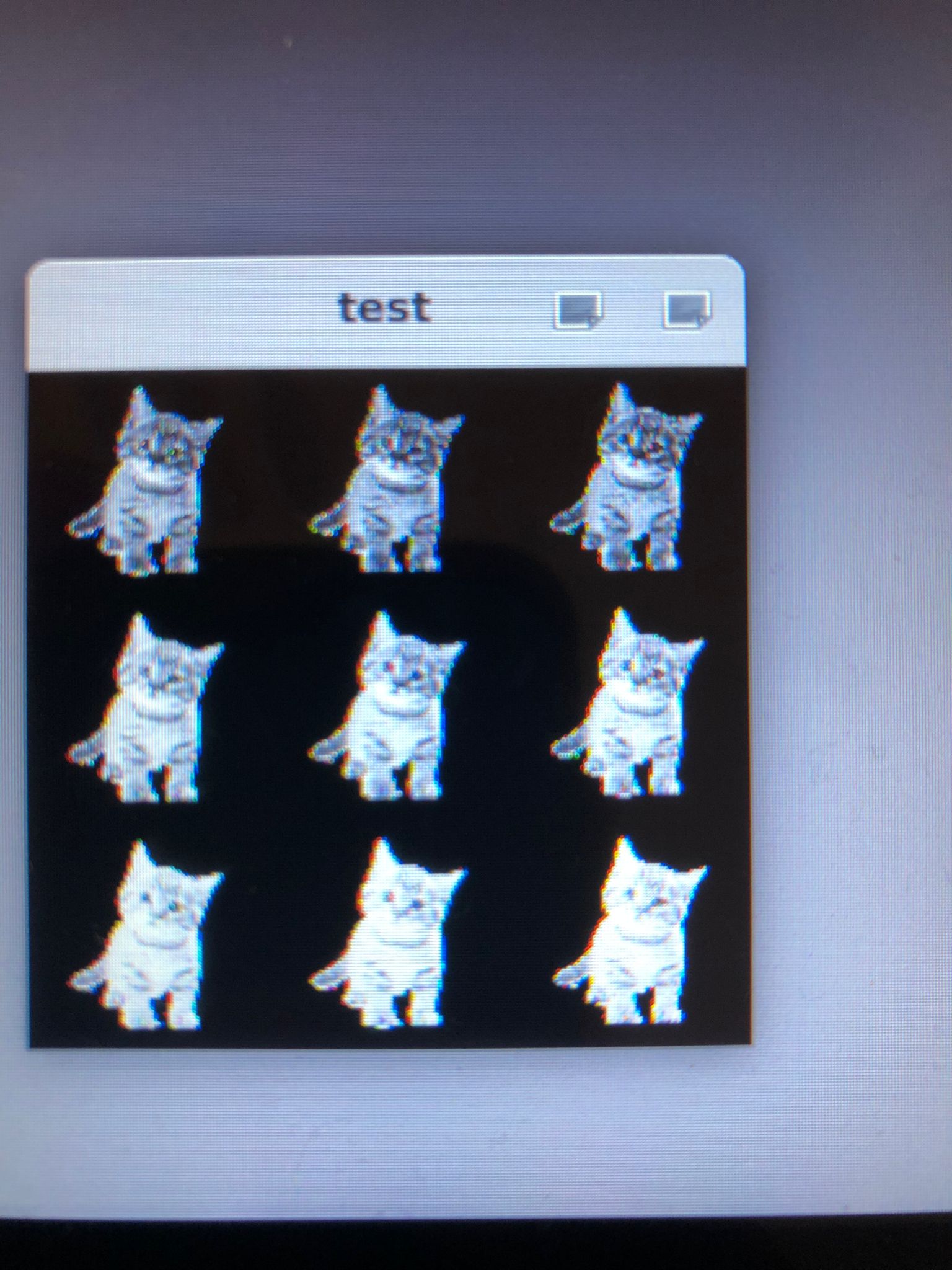

But what I get is something like that (see picture). I'm realy confused about that, can anybody help or has a good advice? Thanks

CodePudding user response:

cv::Size() will use the original image size. You are interpreting the data wrong. Here are 4 ways to interpret a 512x512 (cv::Size()) loaded blob-start from the lenna image:

input (512x512):

- blob-start as a 512x512 single channel image:

- blob-start as a 512x512 BGR image:

- blob-start as a 224x224 BGR image:

- blob-start as a 224x224 single channel:

here's the code:

int main()

{

cv::Mat img = cv::imread("C:/data/Lenna.png"); // 8UC3

cv::imshow("img", img);

cv::Mat blob;

cv::dnn::blobFromImage(img, blob, 1.0, cv::Size(), cv::Scalar(), false, false, CV_8U);

cv::Mat redImg = cv::Mat(img.rows,

img.cols,

CV_8UC1,

blob.ptr<uint8_t>(0, 0));

cv::imshow("blob 1", redImg);

cv::imwrite("red1.jpg", redImg);

cv::Mat redImg3C = cv::Mat(img.rows,

img.cols,

CV_8UC3,

blob.ptr<uint8_t>(0, 0));

cv::imshow("redImg3C", redImg3C);

cv::imwrite("red3C.jpg", redImg3C);

cv::Mat redImg224_3C = cv::Mat(224,

224,

CV_8UC3,

blob.ptr<uint8_t>(0, 0));

cv::imshow("redImg224_3C", redImg224_3C);

cv::imwrite("redImg224_3C.jpg", redImg224_3C);

cv::Mat redImg224_1C = cv::Mat(224,

224,

CV_8UC1,

blob.ptr<uint8_t>(0, 0));

cv::imshow("redImg224_1C", redImg224_1C);

cv::imwrite("redImg224_1C.jpg", redImg224_1C);

cv::waitKey(0);

}

Imho you have to do in your code:

cv::dnn::blobFromImage(m_inputImg, blob, 1.0, cv::Size(), cv::Scalar(), false, false, CV_8U);

cv::Mat blueImg = cv::Mat(m_inputImg.rows,

m_inputImg.cols,

CV_8UC3,

blob.ptr<uint8_t>(0, 0);

OR

cv::dnn::blobFromImage(m_inputImg, blob, 1.0, cv::Size(cp->getModelConfigs().model[0].input.width , cp->getModelConfigs().model[0].input.height), cv::Scalar(), false, false, CV_8U);

cv::Mat blueImg = cv::Mat(cp->getModelConfigs().model[0].input.height,

cp->getModelConfigs().model[0].input.width,

CV_8UC3,

blob.ptr<uint8_t>(0, 0);

In addition, here's the version of setting the spatial blob image size to a fixed size (e.g. the desired DNN input size):

cv::Mat blob2;

cv::dnn::blobFromImage(img, blob2, 1.0, cv::Size(224,224), cv::Scalar(), false, false, CV_8U);

cv::Mat blueImg224_1C = cv::Mat(224,

224,

CV_8UC1,

blob2.ptr<uint8_t>(0, 0));

cv::imshow("blueImg224_1C", blueImg224_1C);

cv::imwrite("blueImg224_1C.jpg", blueImg224_1C);