I wanted to compare the manual computations of the precision and recall with scikit-learn functions. However, recall_score() and precision_score() of scikit-learn functions gave me different results. Not sure why! Could you please give me some advice why I am getting different results? Thanks!

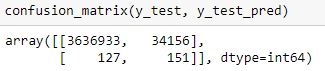

My confusion matrix:

tp, fn, fp, tn = confusion_matrix(y_test, y_test_pred).ravel()

print('Outcome values : \n', tp, fn, fp, tn)

Outcome values :

3636933 34156 127 151

FDR=tp/(tp fn) # TPR/Recall/Sensitivity

print('Recall: %.3f' % FDR)

Recall: 0.991

precision=tp/(tp fp)

print('Precision: %.3f' % precision)

Precision: 1.000

precision = precision_score(y_test, y_test_pred)

print('Precision: %f' % precision)

recall = recall_score(y_test, y_test_pred)

print('Recall: %f' % recall)

Precision: 0.004401

Recall: 0.543165

CodePudding user response:

It should be (check return value's ordering):

tn, fp, fn, tp = confusion_matrix(y_test, y_test_pred).ravel()

Please refer: here