I try to read an excel file into a pandas data frame. But I only need the columns till a marker called 'End'. This is just a string in a cell in an otherwise empty column, in the excel file. The number of columns before this marker can change in number, so I can't set fixed columns.

What I tried so far was to read the whole excel file into a data frame. Now I'm trying to drop all columns after one column contains the 'End' string or select all columns before the 'End' marker.

How can I achieve this?

Maybe something like this?

df = df.iloc[:, : df != 'End']

I tried to find the column containing 'End' like this:

end = [col for col in df.columns if 'End' in col]

But the list stays empty.

P.S. Some cells have a background color but contain no content nor formula. Somehow pandas reads them as just empty cells and I can't fill them with na values for some reason. I tried pd.read_excel(***, na_values='^\s $') and df.fillna('', inplace=True) in various variations with no success. Maybe you have an idea how to fix this as well.

EDIT:

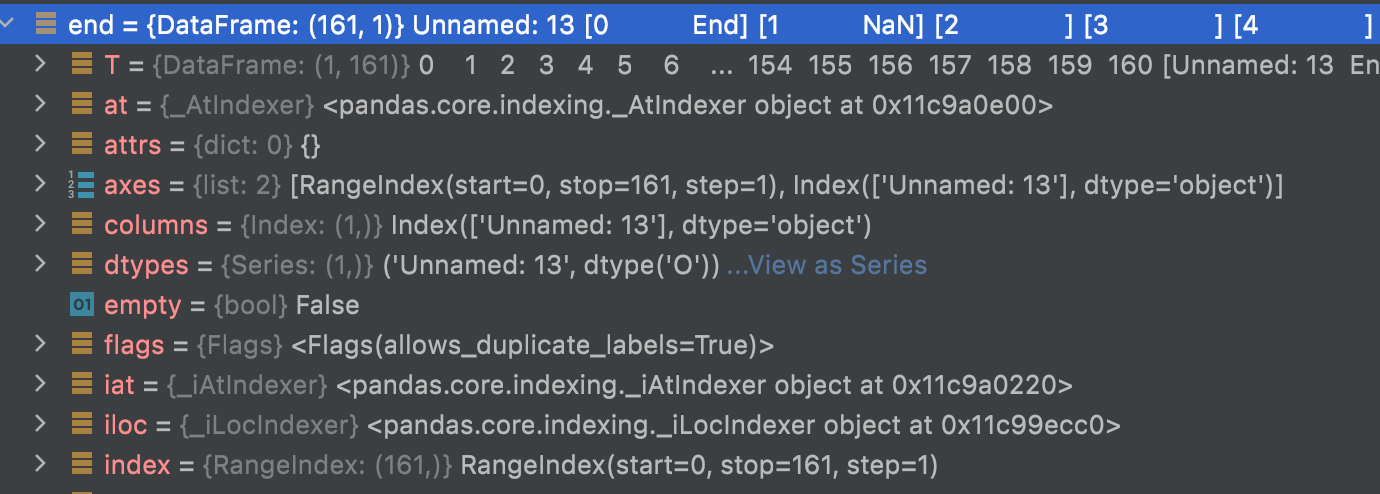

The 'End' is located in the first cell of the column. After import into a data frame it reads:

| Unnamed 13 |

|---|

| End |

| NaN |

| *empty |

With *empty meaning that this cell in the data frame is just blank, and I can't transform into nan. In the Excel file this column has a background color. Maybe that's why pandas gets confused. These empty cell show up wherever the Excel has an empty cell with background color.

CodePudding user response:

If I understand it right, you want to find first column containing 'End' and filtering columns from 0 till that column. If so, you could try:

Sample dataframe:

df = pd.DataFrame({'Column1': [1, 2, 3],

'Column2': [4, 5, 6],

'Column_End': [7, 8, 9],

'Column3': [10, 11, 12]})

Solution:

end_col = df.columns.get_loc([x for x in df.columns if 'End' in x][-1])

df = df.iloc[:, 0:end_col 1]

Output:

Column1 Column2 Column_End

0 1 4 7

1 2 5 8

2 3 6 9

By using list comprehension we found last column containing 'End' in the name. Then we took position of this column by using get_loc() function. When we know the exact position, we filtered certain columns from the dataframe by using iloc.

If it's possible that sometimes you have column with 'End' in the document and sometimes don't - you can add an exception for IndexError and assign len(df.columns) to end_col. Then you just get all columns from the document.

EDIT

If column names always start from second row in excel you can add skiprows=1 to read_excel() as a parameter and then use the solution I provided. If you want to keep 'Unnamed' columns, but filter through the first row below columns you can do:

end_col = df.iloc[0, :].to_list().index([x for x in df.iloc[0, :] if 'End' in x][-1])

...and then using iloc as above.

CodePudding user response:

After some time I found a solution which works for me. The reason why I had to differ from @Arkadiusz solution is that the 'End' substring was not in the column title of the data frame. Which I didn't make clear in the text of my question. Otherwise, their solution works perfectly fine. This is the code that worked for me in the end:

end = df.loc[:, (df == 'End').any()].columns

end_name = end.values[0]

df = df.loc[:, :end_name]

EDIT: The empty cells contained empty strings ''. Using this code:

df = df.replace(r'^\s $', np.nan, regex=True)

I was able to fix this issue as well.