I have implemented a CNN autoencoder which does not have square shape inputs. I am bit confused. Is it mandatory to have a squared shape input for autoencoders ? Each of the 2D image has a shape of 800x20. I have fed the data according to the shape. But somehow the shapes are not matching when the model is built. I have share the code of the model and the error message below. Need your expert advise. Thanks.

x = Input(shape=(800, 20,1))

# Encoder

conv1_1 = Conv2D(16, (3, 3), activation='relu', padding='same')(x)

pool1 = MaxPooling2D((2, 2), padding='same')(conv1_1)

conv1_2 = Conv2D(8, (3, 3), activation='relu', padding='same')(pool1)

pool2 = MaxPooling2D((2, 2), padding='same')(conv1_2)

conv1_3 = Conv2D(8, (3, 3), activation='relu', padding='same')(pool2)

h = MaxPooling2D((2, 2), padding='same')(conv1_3)

# Decoder

conv2_1 = Conv2D(8, (3, 3), activation='relu', padding='same')(h)

up1 = UpSampling2D((2, 2))(conv2_1)

conv2_2 = Conv2D(8, (3, 3), activation='relu', padding='same')(up1)

up2 = UpSampling2D((2, 2))(conv2_2)

conv2_3 = Conv2D(16, (3, 3), activation='relu', padding='same')(up2)

up3 = UpSampling2D((2, 2))(conv2_3)

r = Conv2D(1, (3, 3), activation='sigmoid', padding='same')(up3)

model = Model(inputs=x, outputs=r)

model.compile(optimizer='adadelta', loss='binary_crossentropy', metrics=['accuracy'])

results = model.fit(x_train, x_train, epochs = 500, batch_size=16,validation_data= (x_test, x_test))

Here is error:

ValueError: logits and labels must have the same shape ((16, 800, 24, 1) vs (16, 800, 20, 1))

CodePudding user response:

As the error trace indicates, the issue you are facing in trying to work with this autoencoder is that the model is expecting the input shape to be (?, 800, 20, 1) but, the output shape is (?, 796, 20, 1). While it is getting each image input and output as

(800, 20, 1). (Check your model summary's input and output shape!)

My Recommendations -

I have fixed with

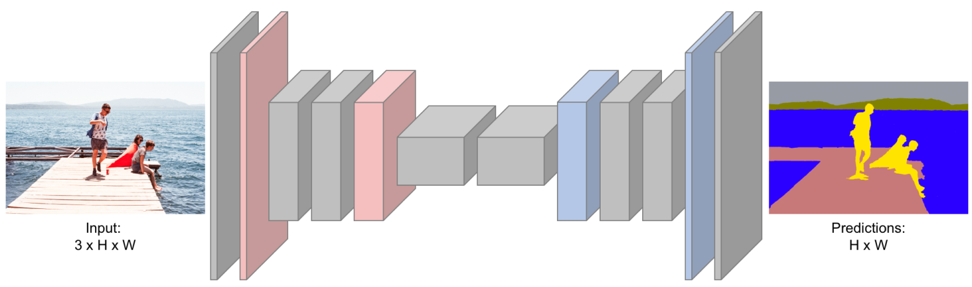

padding = 'same'and re-adjusted the shapes so that the input tensor shape and the output are the same. Check out the kernel sizes that I have modified to get the output shape as needed.On top of that, for a stacked conv encoder-decoder architecture (such as auto-encoder) it is recommended that you translate the spatial information into feature maps/filters/channels with subsequent layers. Right now you start with 8 filters then move to 4 in the encoder. It should be more like

4->8->16. Check this diagram for reference towards the intuition.

With the above recommendations, I have made the changes here.

x = Input(shape=(800, 20,1))

# Encoder

conv1_1 = Conv2D(4, (3, 3), activation='relu', padding='same')(x)

pool1 = MaxPooling2D((2, 2), padding='same')(conv1_1)

conv1_2 = Conv2D(8, (3, 3), activation='relu', padding='same')(pool1)

pool2 = MaxPooling2D((2, 2), padding='same')(conv1_2)

conv1_3 = Conv2D(16, (3, 3), activation='relu', padding='same')(pool2)

h = MaxPooling2D((2, 1), padding='same')(conv1_3) #<------

# # Decoder

conv2_1 = Conv2D(16, (3, 3), activation='relu', padding='same')(h)

up1 = UpSampling2D((2, 2))(conv2_1)

conv2_2 = Conv2D(8, (3, 3), activation='relu', padding='same')(up1)

up2 = UpSampling2D((2, 2))(conv2_2)

conv2_3 = Conv2D(4, (3, 3), activation='sigmoid', padding='same')(up2) #<--- ADD PADDING HERE

up3 = UpSampling2D((2, 1))(conv2_3)

r = Conv2D(1, (3, 3), activation='sigmoid', padding='same')(up3)

model = Model(inputs=x, outputs=r)

model.compile(optimizer='adadelta', loss='binary_crossentropy', metrics=['accuracy'])

model.summary()

Model: "model_13"

_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

input_16 (InputLayer) [(None, 800, 20, 1)] 0

_________________________________________________________________

conv2d_101 (Conv2D) (None, 800, 20, 4) 40

_________________________________________________________________

max_pooling2d_45 (MaxPooling (None, 400, 10, 4) 0

_________________________________________________________________

conv2d_102 (Conv2D) (None, 400, 10, 8) 296

_________________________________________________________________

max_pooling2d_46 (MaxPooling (None, 200, 5, 8) 0

_________________________________________________________________

conv2d_103 (Conv2D) (None, 200, 5, 16) 1168

_________________________________________________________________

max_pooling2d_47 (MaxPooling (None, 100, 5, 16) 0

_________________________________________________________________

conv2d_104 (Conv2D) (None, 100, 5, 16) 2320

_________________________________________________________________

up_sampling2d_43 (UpSampling (None, 200, 10, 16) 0

_________________________________________________________________

conv2d_105 (Conv2D) (None, 200, 10, 8) 1160

_________________________________________________________________

up_sampling2d_44 (UpSampling (None, 400, 20, 8) 0

_________________________________________________________________

conv2d_106 (Conv2D) (None, 400, 20, 4) 292

_________________________________________________________________

up_sampling2d_45 (UpSampling (None, 800, 20, 4) 0

_________________________________________________________________

conv2d_107 (Conv2D) (None, 800, 20, 1) 37

=================================================================

Total params: 5,313

Trainable params: 5,313

Non-trainable params: 0

_________________________________________________________________

To show that the model now runs let me create a random dataset with the same that you are looking at.

x_train = np.random.random((16,800,20,1))

model.fit(x_train, x_train, epochs=2)

Epoch 1/2

1/1 [==============================] - 0s 180ms/step - loss: 0.7526 - accuracy: 0.0000e 00

Epoch 2/2

1/1 [==============================] - 0s 189ms/step - loss: 0.7526 - accuracy: 0.0000e 00

<tensorflow.python.keras.callbacks.History at 0x7fa85a9896d0>