I want to import all data(around 200 000 rows) from csv file (which has 4 columns) into already created table (table has 5 columns) in sqlite3 database using Python (Pandas dataframe). Data types in csv and table satisfy with each other. Problem is that, in the table there is a one extra column which is index_of(Primary Key).

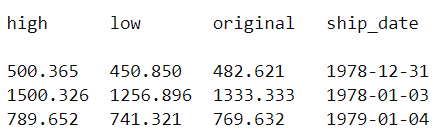

These are the first 3 lines of my csv file:

That's all I could do, I guess if it will work, it takes about 5-6 hours, because in this code I used for loop to read every row:

connection = _sqlite3.connect("db_name.sqlite")

cursor = connection.cursor()

with open('path_to_csv', 'r') as file:

no_records = 0

for row in file:

cursor.execute("INSERT INTO table_name (index_of, high, low, original, ship_date) VALUES (?,?,?,?,?)", row.split(","))

connection.commit()

no_records = 1

connection.close()

but it shows me an error: Exception has occurred: OperationalError 5 values for 4 columns

Please, can you help me with this:

How to import 200 000 rows fast using Python?

How to import all columns from csv file into the table's specific columns?

CodePudding user response:

You need to provide a default value for the 5th column.

You could also improve the performance of the script if you insert chunks of 100-200 rows in each SQL sentence.

CodePudding user response:

user3380595 has already pointed out in their answer that you need to provide a value for the column index_of.

cursor.execute("""

INSERT INTO Quotes (index_of, high, low, original, ship_date)

VALUES (?, ?, ?, ?, ?)

""", [index, *row])

I created 200,000 lines of test data and it loaded quite fast (less than 2 seconds). See first example using csv and sqlite3.

As user3380595 mentioned, you could load the data in chunks if you are concerned about memory and performance. This scenario was actually loaded slightly slower. See second example using pandas and sqlalchemy.

Using csv and sqlite3

Setup Test Environment

import csv

import sqlite3

import contextlib

import pandas as pd

test_data = r"/home/thomas/Projects/Playground/stackoverflow/data/test.csv"

test_db = r"/home/thomas/Projects/Playground/stackoverflow/data/test.db"

with contextlib.closing(sqlite3.connect(test_db)) as connection:

cursor = connection.cursor()

cursor.execute("DROP TABLE IF EXISTS Quotes;")

cursor.execute("""

CREATE TABLE IF NOT EXISTS Quotes (

index_of INTEGER, -- PRIMARY KEY,

high REAL,

low REAL,

original REAL,

ship_date TEXT

);

""")

connection.commit()

Load Data

with contextlib.closing(sqlite3.connect(test_db)) as connection:

cursor = connection.cursor()

with open(test_data, "r") as file:

for index, row in enumerate(csv.reader(file)):

cursor.execute("""

INSERT INTO Quotes (index_of, high, low, original, ship_date)

VALUES (?, ?, ?, ?, ?)

""", [index, *row])

connection.commit()

Using pandas and sqlalchemy

Setup Test Environment

import pandas as pd

from sqlalchemy import create_engine

test_data = r"/home/thomas/Projects/Playground/stackoverflow/data/test.csv"

test_db = r"sqlite:////home/thomas/Projects/Playground/stackoverflow/data/test.db"

engine = create_engine(test_db, echo=True)

with engine.begin() as connection:

engine.execute("DROP TABLE IF EXISTS Quotes;")

engine.execute("""

CREATE TABLE IF NOT EXISTS Quotes (

index_of INTEGER, -- PRIMARY KEY,

high REAL,

low REAL,

original REAL,

ship_date TEXT

);

""")

Load Data (in chunks)

with engine.begin() as connection:

reader = pd.read_csv(test_data, iterator=True, chunksize=50000)

for chunk in reader:

chunk["index_of"] = chunk.index

chunk.to_sql("Quotes", con=engine, if_exists="append", index=False)

Alternatively, instead of using pandas, you could also use sqlite3.Cursor.executemany and process chunks of rows.

Or, you could use dask.dataframe.to_sql (example assumes an existing database with a table Quotes) and write the data parallel. However, I don't think it's needed with your data.

import dask.dataframe as dd

test_data = r"/home/thomas/Projects/Playground/stackoverflow/data/test.csv"

test_db = r"sqlite:////home/thomas/Projects/Playground/stackoverflow/data/test.db"

df = dd.read_csv(test_data) # , blocksize=2e6

df["index_of"] = df.index

df.to_sql("Quotes", uri=test_db, if_exists="append", index=False, parallel=True)