I'm learning neural networks, and trying to use GPU for it. I'm using:

- Python 3.8

- tensorflow-gpu 2.6.0

- PyCharm

- Jupiter plugin for PyCharm

- Videocard NVIDIA 3080 TI - 12 Gb

I have installed CUDA 11.4 (and few others) and CudNN-v8.2.4.15

My starting code is pretty usual:

import os

os.environ['TF_FORCE_GPU_ALLOW_GROWTH'] = 'true'

import pandas as pd

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

from tensorflow import keras

from tensorflow.keras.optimizers import Adam

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Flatten, Conv1D

from tensorflow.keras.preprocessing.sequence import TimeseriesGenerator

from sklearn.preprocessing import MinMaxScaler, OneHotEncoder

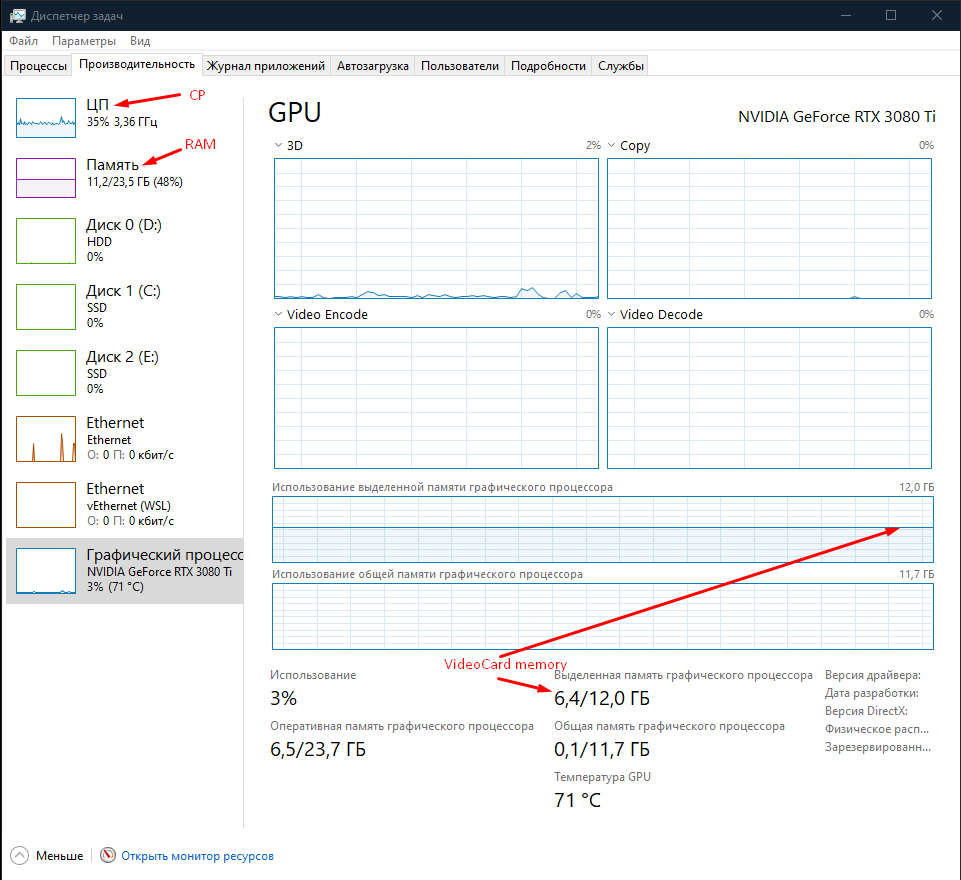

The problem is, when I starting NN learning, I'm checking resources in Task Manager and it looks weird, like it's using CPU instead of GPU. There is screenshot (sorry for Russian language):

So when I launch the process, CP usage grow from 5% to 40%, and Videocard memory grow from 2GB to 6GB.

Feels like it's using videocard memory, but using CPU for calculations. Is it possible?

UPD: Jupiter logs:

"E:\Program Files\JetBrains\PyCharm 2020.3\bin\runnerw.exe" C:\Users\levsh\AppData\Local\Programs\Python\Python38\python.exe -m jupyter notebook --no-browser --notebook-dir=C:/Users/levsh/PycharmProjects/ipynb

[W 2021-11-01 02:53:42.606 LabApp] 'notebook_dir' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[W 2021-11-01 02:53:42.607 LabApp] 'notebook_dir' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[W 2021-11-01 02:53:42.607 LabApp] 'notebook_dir' has moved from NotebookApp to ServerApp. This config will be passed to ServerApp. Be sure to update your config before our next release.

[I 2021-11-01 02:53:42.614 LabApp] JupyterLab extension loaded from c:\users\levsh\appdata\local\programs\python\python38\lib\site-packages\jupyterlab

[I 2021-11-01 02:53:42.614 LabApp] JupyterLab application directory is C:\Users\levsh\AppData\Local\Programs\Python\Python38\share\jupyter\lab

[I 02:53:42.620 NotebookApp] Serving notebooks from local directory: C:/Users/levsh/PycharmProjects/ipynb

[I 02:53:42.620 NotebookApp] Jupyter Notebook 6.4.3 is running at:

[I 02:53:42.620 NotebookApp] http://localhost:8888/?token=a34f13cb67f5cf89bff0a8b8242b69a8727197a98ddb298f

[I 02:53:42.620 NotebookApp] or http://127.0.0.1:8888/?token=a34f13cb67f5cf89bff0a8b8242b69a8727197a98ddb298f

[I 02:53:42.620 NotebookApp] Use Control-C to stop this server and shut down all kernels (twice to skip confirmation).

[C 02:53:42.625 NotebookApp]

To access the notebook, open this file in a browser:

file:///C:/Users/levsh/AppData/Roaming/jupyter/runtime/nbserver-9860-open.html

Or copy and paste one of these URLs:

http://localhost:8888/?token=a34f13cb67f5cf89bff0a8b8242b69a8727197a98ddb298f

or http://127.0.0.1:8888/?token=a34f13cb67f5cf89bff0a8b8242b69a8727197a98ddb298f

[I 02:53:42.626 NotebookApp] 302 GET /api/kernelspecs/ (127.0.0.1) 0.000000ms

[I 02:53:42.694 NotebookApp] Kernel started: 036ed1a8-7e7e-49bb-8c86-3efcfb4b993c, name: python3

[W 02:53:42.703 NotebookApp] No session ID specified

2021-11-01 02:53:49.739123: I tensorflow/core/platform/cpu_feature_guard.cc:142] This TensorFlow binary is optimized with oneAPI Deep Neural Network Library (oneDNN) to use the following CPU instructions in performance-critical operations: AVX AVX2

To enable them in other operations, rebuild TensorFlow with the appropriate compiler flags.

2021-11-01 02:53:50.367361: W tensorflow/core/common_runtime/gpu/gpu_bfc_allocator.cc:39] Overriding allow_growth setting because the TF_FORCE_GPU_ALLOW_GROWTH environment variable is set. Original config value was 0.

2021-11-01 02:53:50.367428: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1510] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 9440 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3080 Ti, pci bus id: 0000:1f:00.0, compute capability: 8.6

2021-11-01 02:53:50.526516: I tensorflow/compiler/mlir/mlir_graph_optimization_pass.cc:185] None of the MLIR Optimization Passes are enabled (registered 2)

2021-11-01 02:53:52.226233: I tensorflow/stream_executor/cuda/cuda_dnn.cc:369] Loaded cuDNN version 8204

2021-11-01 02:53:55.133752: I tensorflow/stream_executor/cuda/cuda_blas.cc:1760] TensorFloat-32 will be used for the matrix multiplication. This will only be logged once.

UPD2: Update for TYZ comment - no, it's not.

tf.debugging.set_log_device_placement(True)

print("Num GPUs Available: ", len(tf.config.list_physical_devices('GPU')))

# Num GPUs Available: 1

tf.config.list_physical_devices('GPU')

# [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]

sess = tf.compat.v1.Session(config=tf.compat.v1.ConfigProto(log_device_placement=True))

# Device mapping:

# /job:localhost/replica:0/task:0/device:GPU:0 -> device: 0, name: NVIDIA GeForce RTX 3080 Ti, pci bus id: 0000:1f:00.0, compute capability: 8.6

In the logs it looks good too:

2021-11-01 04:34:49.606386: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1510] Created device /device:GPU:0 with 9440 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3080 Ti, pci bus id: 0000:1f:00.0, compute capability: 8.6

2021-11-01 04:36:58.513741: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1510] Created device /job:localhost/replica:0/task:0/device:GPU:0 with 9440 MB memory: -> device: 0, name: NVIDIA GeForce RTX 3080 Ti, pci bus id: 0000:1f:00.0, compute capability: 8.6

CodePudding user response:

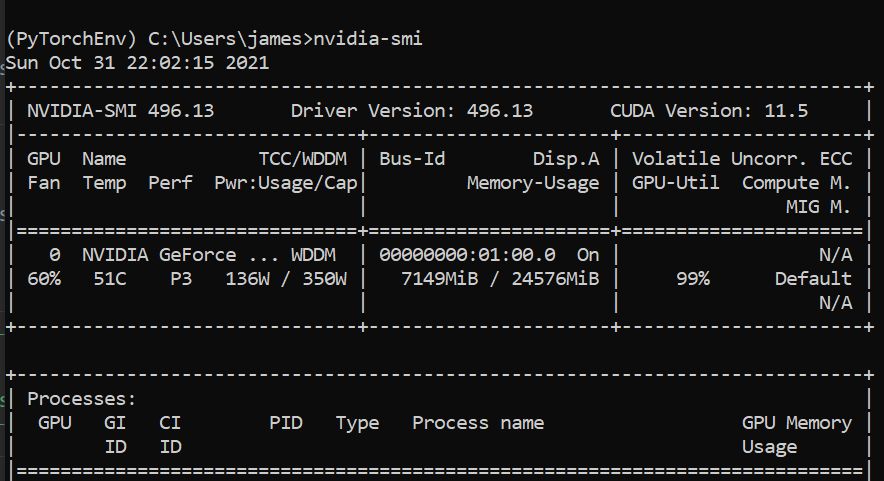

Check your nvidia-smi (nvidia system management interface) as the program runs, looking for Volatile GPU-util. The task manager is not a good indication of GPU usage (nor very accurate usages of other resources like RAM and temps imo...). The fact that your GPU temp is 71 degrees for a 3080 Ti indicates certainly the GPU is used (unless some other process is using it)

For instance, I am training right now with an RTX 3090 and my smi output from the command line looks like (truncating the processes from the screenshot):

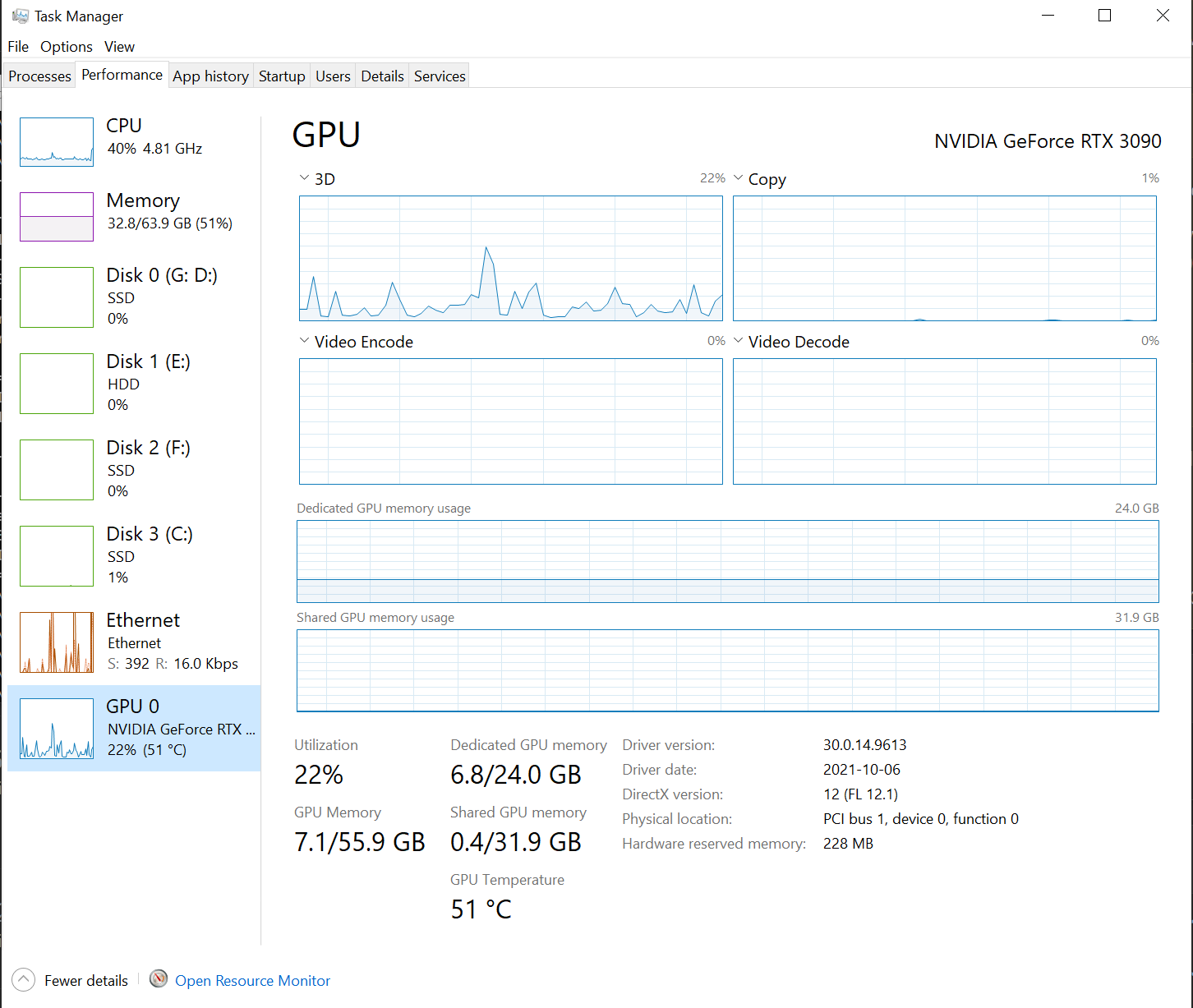

But my task manager looks like (note the gpu usage):

Now if you have some sort of I/O bottleneck, i.e. loading of tensors from the CPU taking too long so the GPU sits idle, well that is a different issue, and can be solved by profiling and other tools for making sure that the loading process is optimized.