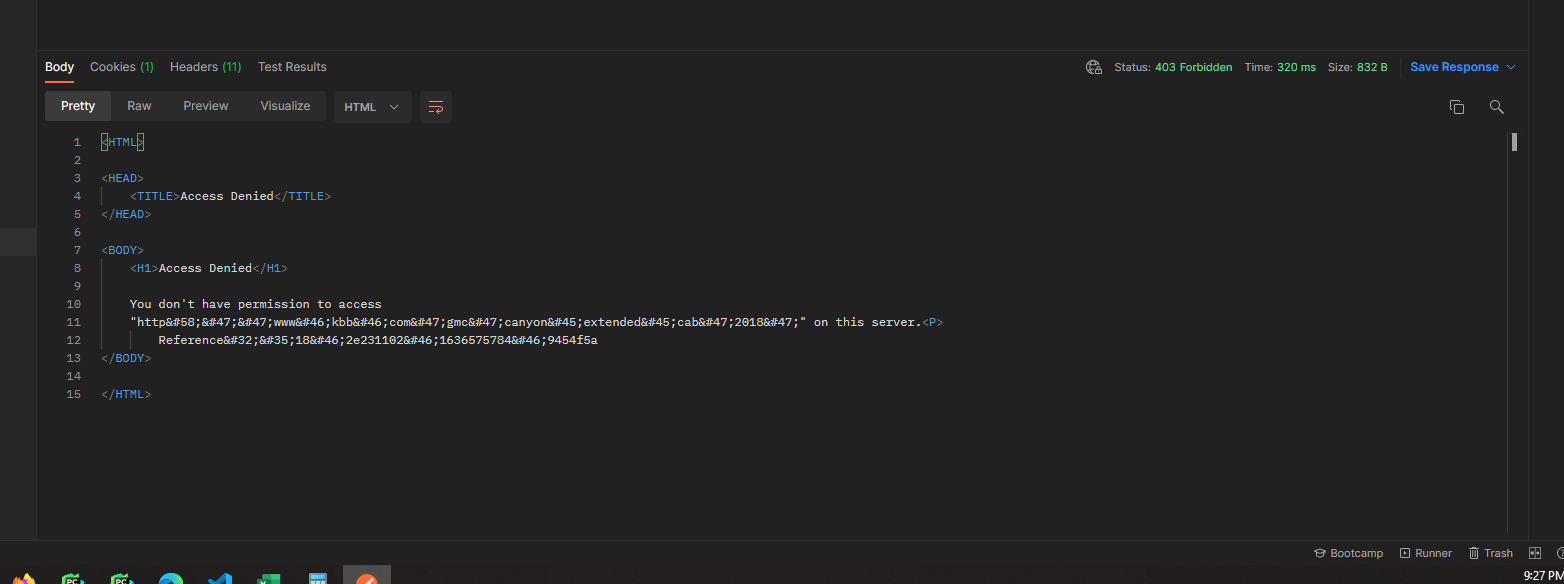

Thanks for reading. For a small reserach project, I'm trying to gather some data from KBB (

edit Solution

Setting a timeout litter longer it works. however I had to retry several times, because the proxy sometimes just dont' reponds

import urllib.request

proxy_host = '23.107.176.36:32180'

url = "https://www.kbb.com/gmc/canyon-extended-cab/2018/"

proxy_support = urllib.request.ProxyHandler({'https' : proxy_host})

opener = urllib.request.build_opener(proxy_support)

urllib.request.install_opener(opener)

res = urllib.request.urlopen(url, timeout=1000) # Set

print(res.read())

Result

b'<!doctype html><html lang="en"><head><meta http-equiv="X-UA-Compatible" content="IE=edge"><meta charset="utf-8"><meta name="viewport" content="width=device-width,initial-scale=1,maximum-scale=5,minimum-scale=1"><meta http-equiv="x-dns-prefetch-control" content="on"><link rel="dns-prefetch preconnect" href="//securepubads.g.doubleclick.net" crossorigin><link rel="dns-prefetch preconnect" href="//c.amazon-adsystem.com" crossorigin><link .........

Using Requests

import requests

proxy_host = '23.107.176.36:32180'

url = "https://www.kbb.com/gmc/canyon-extended-cab/2018/"

# NOTE: we need a loger timeout for the proxy t response and set verify sale for an ssl error

r = requests.get(url, proxies={"https": proxy_host}, timeout=90000, verify=False) # Timeout are in milliseconds

print(r.text)