I want to get names of the most important features for Logistic regression after transformation.

columns_for_encoding = ['a', 'b', 'c', 'd', 'e', 'f',

'g','h','i','j','k','l', 'm',

'n', 'o', 'p']

columns_for_scaling = ['aa', 'bb', 'cc', 'dd', 'ee']

transformerVectoriser = ColumnTransformer(transformers=[('Vector Cat', OneHotEncoder(handle_unknown = "ignore"), columns_for_encoding),

('Normalizer', Normalizer(), columns_for_scaling)],

remainder='passthrough')

I know that I can do this:

x_train, x_test, y_train, y_test = train_test_split(features, results, test_size = 0.2, random_state=42)

x_train = transformerVectoriser.fit_transform(x_train)

x_test = transformerVectoriser.transform(x_test)

clf = LogisticRegression(max_iter = 5000, class_weight = {1: 3.5, 0: 1})

model = clf.fit(x_train, y_train)

importance = model.coef_[0]

# summarize feature importance

for i,v in enumerate(importance):

print('Feature:

, Score: %.5f' % (i,v))

# plot feature importance

pyplot.bar([x for x in range(len(importance))], importance)

pyplot.show()

But with this I'm getting feature1, feature2, feature3...etc. And after transformation I have around 45k features.

How can I get the list of most important features (before transformation)? I want to know what are the best features for the model. I have a lot of categorical features with 100 different categories, so after encoding I'm having more features than rows in my dataset. So I want to find out what features can I exclude from my dataset and what features are the best for my model.

IMPORTANT

I have other features that are used but not transformed...because of that I put remainder='passthrough'

CodePudding user response:

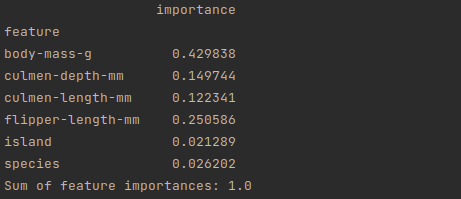

As you would already be aware that the whole idea of feature importances is bit tricky for the case of