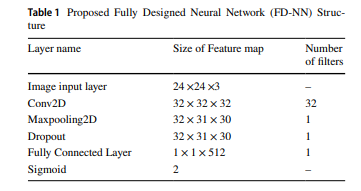

According to my understanding a maxpool layer works on convolution 2d layer and reduces the dimensions of the layer by half but the architecture of this model shows it in a different manner. Can anyone tell me how it got decreased only by a small dimension and not half as is expected? What I mean is if the maxpooling layer is applied, shouldn't the dimension be 16x16x32? Why is it 32x31x30? If there is a possibility of a custom output shape, I'd like to know why.

CodePudding user response:

It is clearly a typo. As stated in the linked paper (thanks Christoph), they mention the following with the max-pooling layer (emphasis mine):

The first network is a Fully Designed Neural Network (FD-NN). The architecture and layers of the model are displayed in Table 1. A 2D convolutional layer with 3×3 filter size used, and Relu assigned as an activation function. Maxpooling with the size of 2×2 applied to reduce the number of features.

If a 2 x 2 window is applied, you are correct where it should reduce the feature map from 32 x 32 x 32 to 16 x 16 x 32. In addition, the number of filters in the row is wrong. It should also still be 32. This paper is not formally published in any conferences or journals and is only available as a preprint on arXiv. This means that the paper was not formally vetted for errors or was proofread.

As such, if you want to seek clarity on the actual output dimensions I recommend you seek this from the original authors of the paper. However, the output dimensions for the max pooling and dropout from this table are completely incorrect.