I have a timeseries of half hourly electricity data that looks like this:

Date_Time Metered Electricity (MWh)

0 2016-03-27 00:00:00 8.644511

1 2016-03-27 00:30:00 6.808402

2 2016-03-27 01:00:00 6.507068

3 2016-03-27 01:30:00 5.271631

4 2016-03-27 02:00:00 2.313497

... ... ...

58122 2019-06-30 11:30:00 8.051935

58123 2019-06-30 12:00:00 3.520226

58124 2019-06-30 12:30:00 5.093964

I want to average all of the data points into an average for each half hourly timestep, ultimately so I can create a graph showing the average electricity produced throughout the day.

I've managed to do this for the hourly data using groupby which works fine:

mean_hourly = energy_2018.groupby(energy_2018["Date_Time"].dt.hour).mean()

which I can use if I can't work out how to do groupby half hourly, but it would mean I am missing out on half of all the data. Any idea how to use groupby half hourly so I can use all of the data?

Thank you!

CodePudding user response:

You can make groups and group by hour and minute. Since you have only recorded data in intervals of half an hour you get one distinct group per hour and per 30 minutes.

import pandas as pd

df = pd.DataFrame({

'time': ['2016-03-27 00:00:00',

'2016-03-27 00:00:00',

'2016-03-27 00:30:00',

'2016-03-27 01:00:00',

'2016-03-27 01:30:00',

'2019-06-30 11:30:00',

'2019-06-30 12:00:00',

'2019-06-30 12:30:00'],

'electricity': [8.644511,

6.808402,

6.507068,

5.271631,

2.313497,

8.051935,

3.520226,

5.093964]

})

df['time'] = pd.to_datetime(df['time'])

df['minutes'] = df['time'].apply(lambda x: x.minute)

df['hour'] = df['time'].apply(lambda x: x.hour)

df.groupby(['hour', 'minutes']).mean()

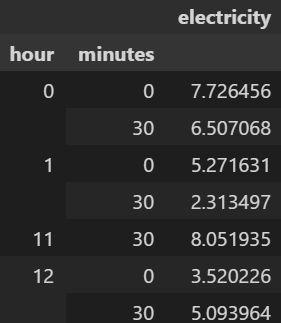

Output:

EDIT:

As indicated in Quang Hoang's comment, a better way to get the hours and the minutes would be

df['minutes'] = df['time'].dt.minute

df['hour'] = df['time'].dt.hour

It is better to use existing solutions from the standard library, to increase readability and performance. On the other hand lambda expressions do provide some flexibility and can be quite useful at times. If you are interested you can read more here:

Why are Python lambdas useful?

CodePudding user response:

df = pd.read_excel('test.xlsx')

print(df)

output:

Date_Time Metered Electricity (MWh)

0 2016-03-27 00:00:00 1

1 2016-03-27 00:29:00 2

2 2016-03-27 00:59:00 3

3 2016-03-27 00:57:00 4

4 2016-03-27 02:00:00 5

Then do this:

df.set_index('Date_Time',inplace=True)

df = df.resample("30T").mean().reset_index()

print(df)

output:

Date_Time Metered Electricity (MWh)

0 2016-03-27 00:00:00 1.5

1 2016-03-27 00:30:00 3.5

2 2016-03-27 01:00:00 NaN

3 2016-03-27 01:30:00 NaN

4 2016-03-27 02:00:00 5.0

EDIT

or just this:

df = df.set_index('Date_Time').resample("30T").mean().reset_index()

CodePudding user response:

energy_2018.groupby(energy_2018["Date_Time"].astype('int64') / 1e9 % 86400 / 3600).mean()

CodePudding user response:

To produce an aggregated value that is the same for all rows within that 'grouped aggregated time', use a combination of pd.groupby and pd.Grouper. The trick to return the aggregated value to every row instead of a reshaped dataframe is to use the transform function afterward (I used numpy's mean (np.mean) so be sure to import numpy as np in the code). If you need more specific flexibility (something like every 10 minutes but start the first minute at some offset minute instead of zero (e.g. 00:02:00 rather than 00:00:00) then additional parameters are needed.

import numpy as np

energy_2018["mean_hourly"] = energy_2018.groupby(pd.Grouper(key="Date_Time", freq="60Min")).transform(np.mean)

Date_Time Metered Electricity (MWh) mean_hourly

2016-03-27 00:03:00 8.644511 7.726456

2016-03-27 00:31:00 6.808402 7.726456

2016-03-27 01:00:00 6.507068 5.889349

2016-03-27 01:30:00 5.271631 5.889349

2016-03-27 02:00:00 2.313497 2.313497