Terraform plan always forces AKS cluster to be recreated if we increase worker node in node pool

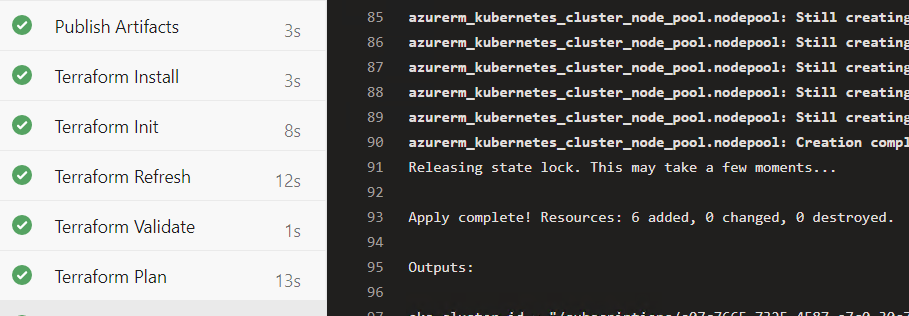

Trying Creating AKS Cluster with 1 worker node, via Terraform, it went well , Cluster is Up and running.

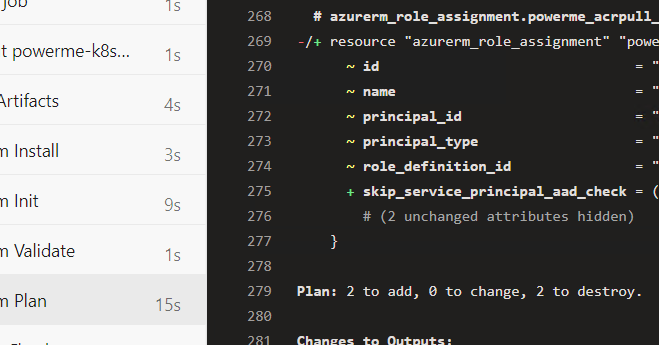

Post that, i tried to add one more worker node in my AKS, Terraform Show Plan: 2 to add, 0 to change, 2 to destroy.

Not Sure how can we increase worker node in aks node pool, if it delate the existing node pool.

default_node_pool {

name = var.nodepool_name

vm_size = var.instance_type

orchestrator_version = data.azurerm_kubernetes_service_versions.current.latest_version

availability_zones = var.zones

enable_auto_scaling = var.node_autoscalling

node_count = var.instance_count

enable_node_public_ip = var.publicip

vnet_subnet_id = data.azurerm_subnet.subnet.id

node_labels = {

"node_pool_type" = var.tags[0].node_pool_type

"environment" = var.tags[0].environment

"nodepool_os" = var.tags[0].nodepool_os

"application" = var.tags[0].application

"manged_by" = var.tags[0].manged_by

}

}

Error

Terraform used the selected providers to generate the following execution

plan. Resource actions are indicated with the following symbols:

-/ destroy and then create replacement

Terraform will perform the following actions:

# azurerm_kubernetes_cluster.aks_cluster must be replaced

-/ resource "azurerm_kubernetes_cluster" "aks_cluster" {

Thanks Satyam

CodePudding user response:

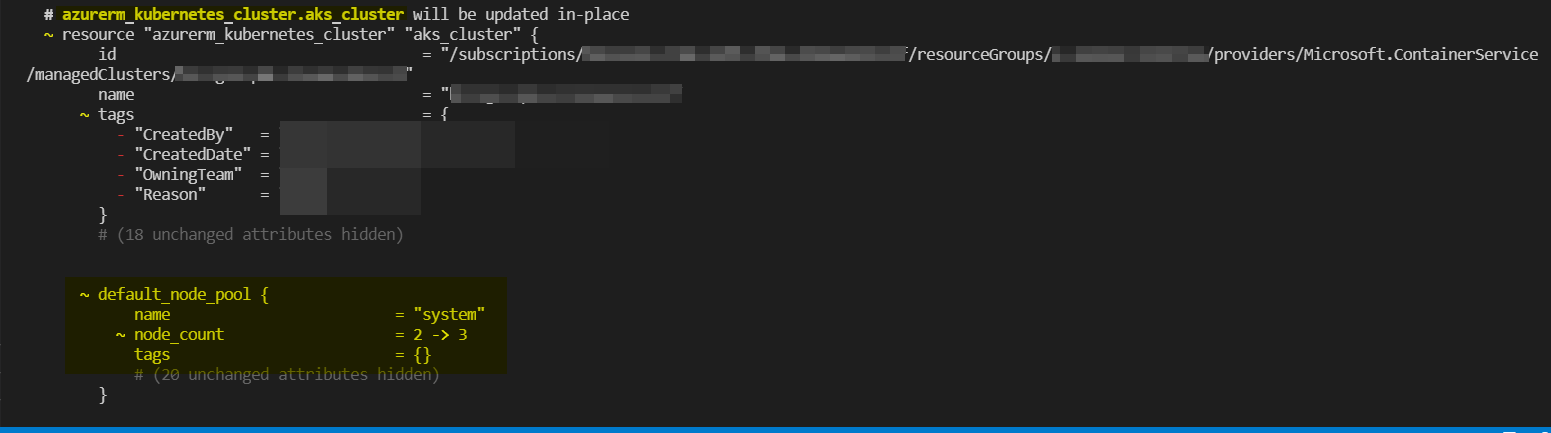

I tested the same in my environment by creating a cluster with 2 node counts and then changed it to 3 using something like below :

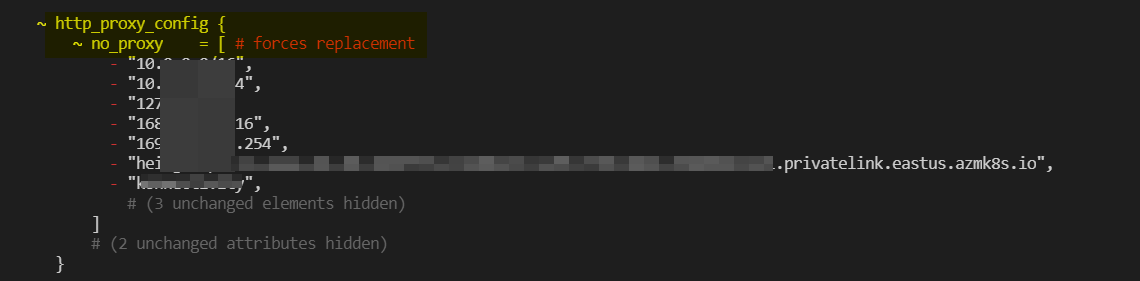

If you are using HTTP_proxy then it will by default force a replacement on that block and that's the reason the whole cluster will get replaced with the new configurations.

So, for a solution you can use lifecycle block in your code as I have done below:

lifecycle {

ignore_changes = [http_proxy_config]

}

The code will be :

resource "azurerm_kubernetes_cluster" "aks_cluster" {

name = "${var.global-prefix}-${var.cluster-id}-${var.envid}-azwe-aks-01"

location = data.azurerm_resource_group.example.location

resource_group_name = data.azurerm_resource_group.example.name

dns_prefix = "${var.global-prefix}-${var.cluster-id}-${var.envid}-azwe-aks-01"

kubernetes_version = var.cluster-version

private_cluster_enabled = var.private_cluster

default_node_pool {

name = var.nodepool_name

vm_size = var.instance_type

orchestrator_version = data.azurerm_kubernetes_service_versions.current.latest_version

availability_zones = var.zones

enable_auto_scaling = var.node_autoscalling

node_count = var.instance_count

enable_node_public_ip = var.publicip

vnet_subnet_id = azurerm_subnet.example.id

}

# RBAC and Azure AD Integration Block

role_based_access_control {

enabled = true

}

http_proxy_config {

http_proxy = "http://xxxx"

https_proxy = "http://xxxx"

no_proxy = ["localhost","xxx","xxxx"]

}

# Identity (System Assigned or Service Principal)

identity {

type = "SystemAssigned"

}

# Add On Profiles

addon_profile {

azure_policy {enabled = true}

}

# Network Profile

network_profile {

network_plugin = "azure"

network_policy = "calico"

}

lifecycle {

ignore_changes = [http_proxy_config]

}

}