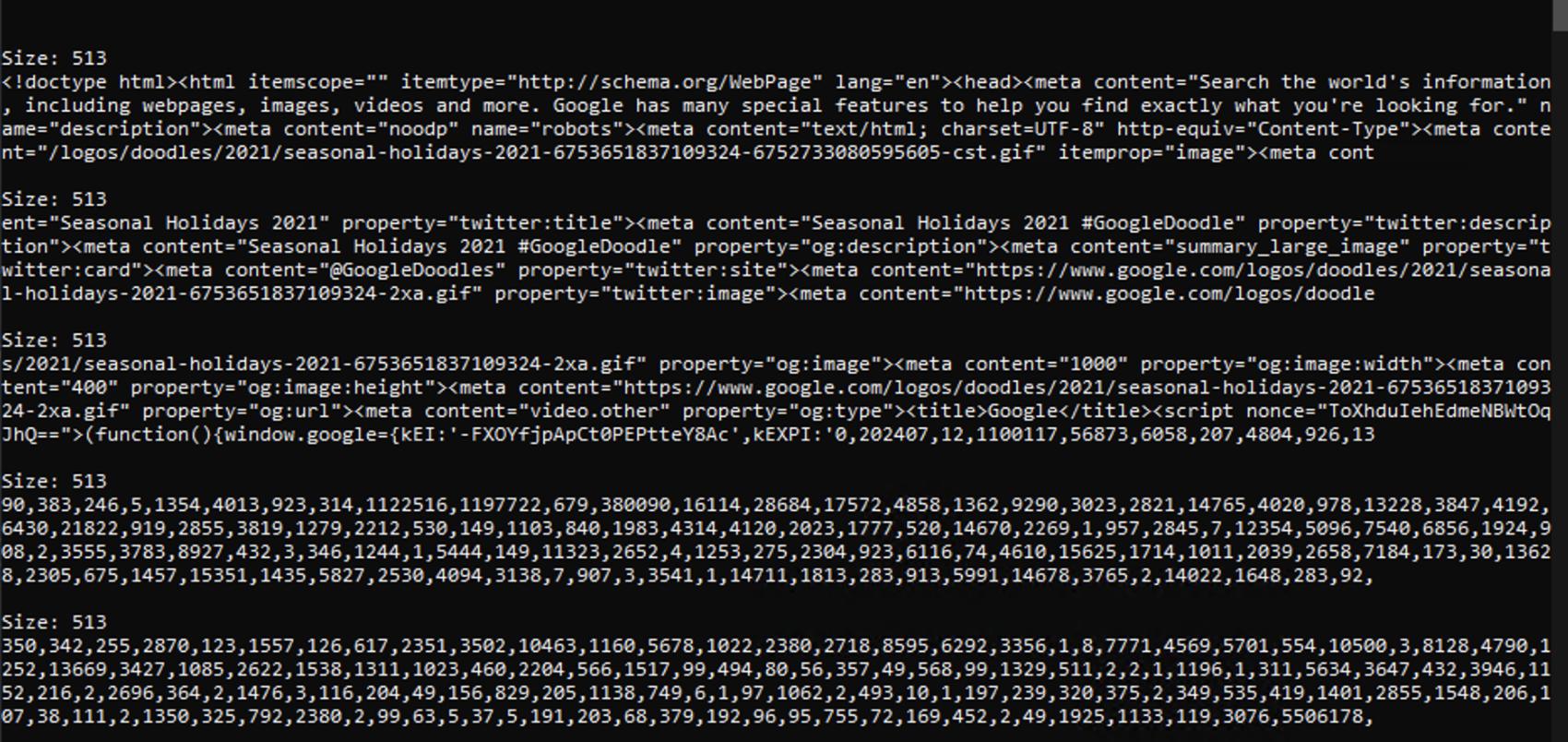

I am trying to fetch my server html text data using wininet, but it seems to be reading it and breaking it into 513 byte size chunks. However I would prefer the data to be fetched as a whole. Is there a way I can go around this?

Here is a full code for references:

Here is a full code for references:

#include <windows.h>

#include <wininet.h>

#include <stdio.h>

#include <iostream>

using namespace std;

#pragma comment (lib, "Wininet.lib")

int main(int argc, char** argv) {

HINTERNET hSession = InternetOpen(L"Mozilla/5.0", INTERNET_OPEN_TYPE_PRECONFIG, NULL, NULL, 0);

HINTERNET hConnect = InternetConnect(hSession, L"www.google.com", 0, L"", L"", INTERNET_SERVICE_HTTP, 0, 0);

HINTERNET hHttpFile = HttpOpenRequest(hConnect, L"GET", L"/", NULL, NULL, NULL, 0, 0);

while (!HttpSendRequest(hHttpFile, NULL, 0, 0, 0)) {

printf("Server Down.. (%lu)\n", GetLastError());

InternetErrorDlg(GetDesktopWindow(), hHttpFile, ERROR_INTERNET_CLIENT_AUTH_CERT_NEEDED, FLAGS_ERROR_UI_FILTER_FOR_ERRORS | FLAGS_ERROR_UI_FLAGS_GENERATE_DATA | FLAGS_ERROR_UI_FLAGS_CHANGE_OPTIONS, NULL);

}

DWORD dwFileSize;

dwFileSize = BUFSIZ;

char* buffer;

buffer = new char[dwFileSize 1];

while (true) {

DWORD dwBytesRead;

BOOL bRead;

bRead = InternetReadFile(hHttpFile, buffer, dwFileSize 1, &dwBytesRead);

if (dwBytesRead == 0) break;

if (!bRead) {

printf("InternetReadFile error : <%lu>\n", GetLastError());

}

else {

buffer[dwBytesRead] = 0;

std::string newbuff = buffer;

std::wcout << "\n\nSize: " << newbuff.size() << std::endl;

std::cout << newbuff;

}

}

InternetCloseHandle(hHttpFile);

InternetCloseHandle(hConnect);

InternetCloseHandle(hSession);

}

CodePudding user response:

dwFileSize = BUFSIZ;

If you look at your header files you will discover that BUFSIZ is #defined as 512.

buffer = new char[dwFileSize 1];

// ...

Read = InternetReadFile(hHttpFile, buffer, dwFileSize 1, &dwBytesRead);

Your program then proceeds to allocate a buffer of 513 bytes, and then read the input, 513 bytes at a time. And that's exactly the result you're observing. This is the only thing that the shown code knows how to do.

If you want to use a larger buffer size, you can easily change that (and there is no reason to new the buffer anyway, all that does is create an opportunity for memory leaks, just use std::vector, or a plain array for smaller buffers of this size).

Unless you have advance knowledge of the size of the file to retrieve you have no alternative but to read the file, in pieces, like that. Even though it's being retrieved in pieces it looks like the file is getting retrieved in a single request, and it's just that the shown code retrieve it one chunk at a time, which is perfectly fine. Switching to a large buffer size might improve the performance, but not dramatically.