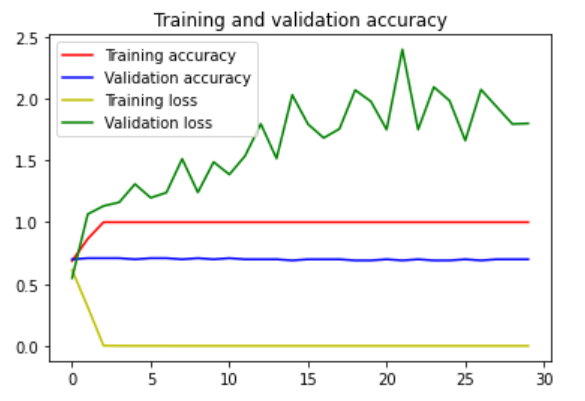

After training, I noticed -as show below - the validation lose is increasing. Is this normal? Also, its above one.

Below is my code:

# I omit data loading

from sklearn.utils import shuffle

# shuffle input

class_image, class_label = shuffle(class_image, class_label , random_state=0)

inputs = tf.keras.layers.Input(shape=(IMAGE_SIZE_X, IMAGE_SIZE_Y, 3), name="input_image")

x = keras.applications.resnet50.preprocess_input(inputs)

base_model = tf.keras.applications.ResNet50(input_tensor=x, weights=None, include_top=False,

input_shape=(IMAGE_SIZE_X, IMAGE_SIZE_Y, 3) )

x=base_model.output

x = tf.keras.layers.GlobalAveragePooling2D( name="avg_pool")(x)

x = tf.keras.layers.Dense(2, activation='softmax', name="predications")(x)

model = keras.models.Model(inputs=base_model.input, outputs=x)

base_learning_rate = 0.0001

loss = tf.keras.losses.SparseCategoricalCrossentropy()

model.compile(optimizer=tf.keras.optimizers.Adam(learning_rate=base_learning_rate),

loss=loss,

metrics=[ 'accuracy'])

history = model.fit(x=class_image ,

y=class_label,

epochs=30

,batch_size=1

, validation_split=0.2

)

# evlaute

import matplotlib.pyplot as plt

acc = history.history['accuracy']

val_acc = history.history['val_accuracy']

loss = history.history['loss']

val_loss = history.history['val_loss']

epochs = range(len(acc))

plt.plot(epochs, acc, 'r', label='Training accuracy')

plt.plot(epochs, val_acc, 'b', label='Validation accuracy')

plt.plot(epochs,loss, 'y', label='Training loss')

plt.plot(epochs, val_loss, 'g', label='Validation loss')

plt.title('Training and validation accuracy')

plt.legend(loc=0)

plt.figure()

CodePudding user response:

This often happens when a model overfits. You may not have enough training data to train such a large model (ResNet50 is huge), causing the model to simply memorize your dataset instead of actually learning to generalize. This is also evident by the training loss going straight to zero. Try a smaller model or more/varied training data.