I have 3 csv files in C:\temp. Trying to combine all 3 csv files to single file.

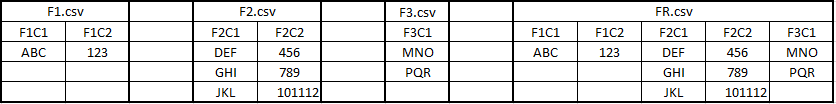

F1.csv, F2.csv, F3.csv [All having unique headers and different number of rows and columns]. Below are sample contents in the file.

F1.csv

F1C1 F1C2

ABC 123

F2.csv

F2C1 F2C2

DEF 456

GHI 789

JKL 101112

F3.csv

F3C1

MNO

PQR

I want the result csv file FR.csv to be like below FR.csv

F1C1 F1C2 F2C1 F2C2 F3C1

ABC 123 DEF 456 MNO

GHI 789 PQR

JKL 101112

I tried running the below script, but FR.csv gives output in single column.

Get-Content C:\temp\*csv | Add-Content C:\temp\FinalResult.csv

CodePudding user response:

The following solutions assume that Get-ChildItem *.csv enumerates the files to merge, in the desired order (which works with input files F1.csv, F2.csv, F3.csv in the current dir).

Plain-text solution, using .NET APIs, System.IO.StreamReaderand System.IO.StreamWriter:

This solution performs much better than the OO solution below, but the latter gives you more flexibility. Input files without a Unicode BOM are assumed to be UTF-8-encoded, and the output is saved to a BOM-less UTF8 file named FR.csv in the current dir. (the APIs used do allow you to specify different encodings, if needed).

$outFile = 'FR.csv'

# IMPORTANT: Always use *full* paths with .NET APIs.

# Writer for the output file.

$writer = [System.IO.StreamWriter] (Join-Path $Pwd.ProviderPath $outFile)

# Readers for all input files.

$readers = [System.IO.StreamReader[]] (Get-ChildItem *.csv -Exclude $outFile).FullName

# Read all files in batches of corresponding lines, join the

# lines of each batch with ",", and save to the output file.

$isHeader = $true

while ($readers.EndOfStream -contains $false) {

if ($isHeader) {

$headerLines = $readers.ReadLine()

$colCounts = $headerLines.ForEach({ ($_ -split ',').Count })

$writer.WriteLine($headerLines -join ',')

$isHeader = $false

} else {

$i = 0

$lines = $readers.ForEach({

if ($line = $_.ReadLine()) { $line }

else { ',' * ($colCounts[$i] - 1) }

$i

})

$writer.WriteLine($lines -join ',')

}

}

$writer.Close()

$readers.Close()

OO solution, using Import-Csv and ConvertTo-Csv / Export-Csv:

# Read all CSV files into an array of object arrays.

$objectsPerCsv =

Get-ChildItem *.csv -Exclude FR.csv |

ForEach-Object {

, @(Import-Csv $_.FullName)

}

# Determine the max. row count.

$maxCount = [Linq.Enumerable]::Max($objectsPerCsv.ForEach('Count'))

# Get all column names per CSV.

$colNamesPerCsv = $objectsPerCsv.ForEach({ , $_[0].psobject.Properties.Name })

0..($maxCount-1) | ForEach-Object {

$combinedProps = [ordered] @{}

$row = $_; $col = 0

$objectsPerCsv.ForEach({

if ($object = $_[$row]) {

foreach ($prop in $object.psobject.Properties) {

$combinedProps.Add($prop.Name, $prop.Value)

}

}

else {

foreach ($colName in $colNamesPerCsv[$col]) {

$combinedProps.Add($colName, $null)

}

}

$col

})

[pscustomobject] $combinedProps

} | ConvertTo-Csv

Replace ConvertTo-Csv with Export-Csv to export the data to a file; use the -NoTypeInformation parameter and -Encoding as needed; e.g. ... | Export-Csv -NoTypeInformation -Encoding utf8 Merged.csv