I need to automate the workflow after an event occurred. I do have experience in CRUD applications but not in Workflow/Batch processing. Need help in designing the system.

Requirement

The workflow involves 5 steps. Each step is a REST call and are dependent on previous step. EX of Steps: (VerifyIfUserInSystem, CreateUserIfNeeded, EnrollInOpt1, EnrollInOpt2,..)

My thought process is to maintain 2 DB Tables

WORKFLOW_STATUS Table which contains columns like (foreign key(referring to primary table), Workflow Status: (NEW, INPROGRESS, FINISHED, FAILED), Completed Step: (STEP1, STEP2,..), Processed Time,..)

EVENT_LOG Table to maintain the track of Events/Exceptions for a particular record (foreign key, STEP, ExceptionLog)

Question

#1. Is this a correct approach to orchestrate the system(which is not that complex)?

#2. As the steps involve REST Calls, I might have to stop the process when a service is not available and resume the process in a later point of time. I am not sure for many retry attempts should be made and how to maintain the no of attempts made before marking it as FAILED. (Guessing create another column in the WORKFLOW_STATUS table called RETRY_ATTEMPT and set some limit before marking it Failed)

#3 Is the EVENT_LOG Table a correct design and what datatype(clob or varchar(2048)) should I be using for exceptionlog? Every step/retry attempts will be inserted as a new record to this table.

#4 How to reset/restart a FAILED entry after a dependent service is back up.

Please direct me to an blogs/videos/resources if available. Thanks in advance.

CodePudding user response:

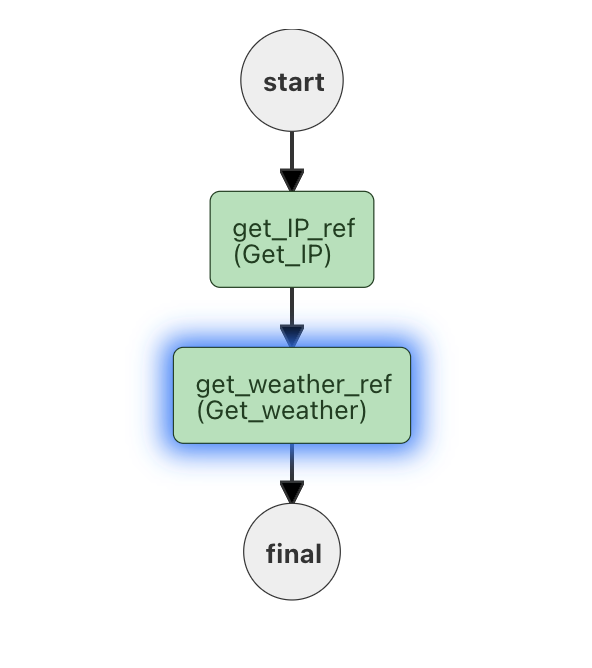

Have you considered using a workflow orchestration engine like Netflix's Conductor?

Input supplies an IP address (and a Accuweather API key)

{

"ipaddress": "98.11.11.125"

}

HTTP request 1 locates the zipCode of the IP address.

HTTP request 2 uses the ZipCode (and the apikey) to report the weather.

The output from this workflow is:

{

"zipcode": "04043",

"forecast": "rain"

}

Your questions:

- I'd use an orchestration tool like Conductor.

- Each of these tasks (defined in Conductor) have retry logic built in. How you implement will vary on expected timings etc. Since the 2 APIs I'm calling here are public (and relatively fast), I don't wait very long between retries:

"retryCount": 3,

"retryLogic": "FIXED",

"retryDelaySeconds": 5,

Inside the connection, there are more parameters you can tweak:

"connectionTimeOut": 1600,

"readTimeOut": 1600

There is also exponential retry logic if desired.

- The event log is stored in ElasticSearch.

- You can build error pathways for all your workflows.

I have this workflow up and running in the Conductor Playground called "Stack_overflow_sequential_http". Create a free account. Run the workflow - click "run workflow, select "Stack_overflow_sequential_http" and use the JSON above to see it in action.

The get_weather connection is a very slow API, so it may fail a few times before succeeding. Copy the workflow, and play with the timeout values to improve the success.