I'm trying to build a simple word generator. However, I encounter some difficulty with the sliding windows.

here is my actual code:

files = glob("transfdata/*")# a list of text files

dataset = tf.data.TextLineDataset(files) # all files are one line

dataset = dataset.map(lambda x: tf.strings.split(x)) # tokenize

dataset = dataset.window(6,1,1, drop_remainder=False)

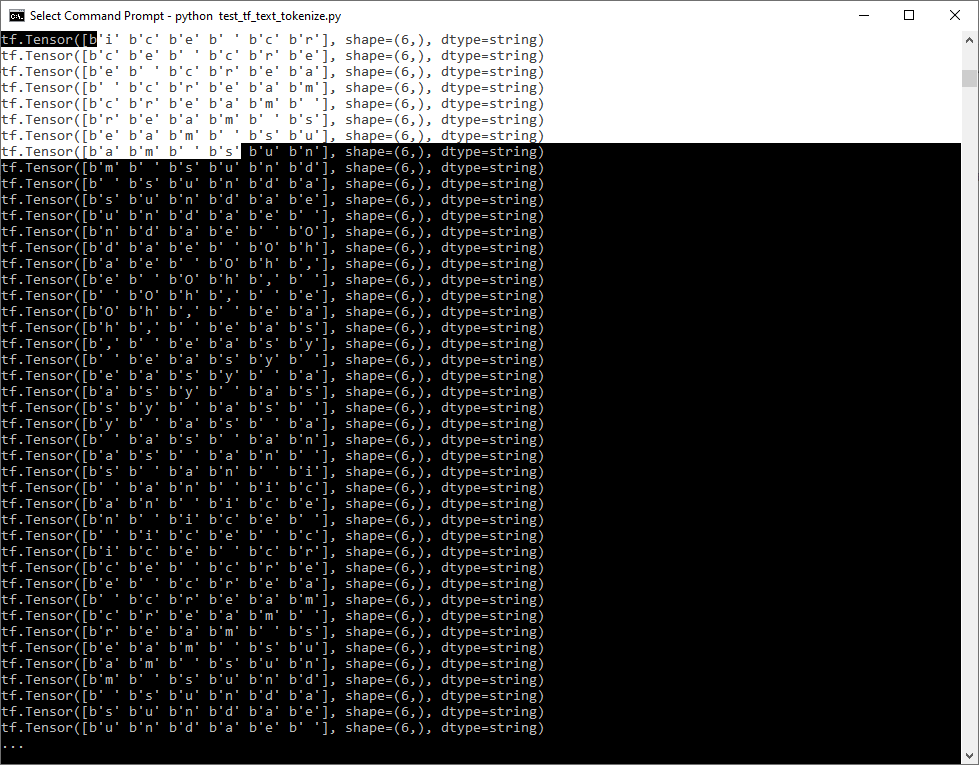

The code doesn't work as I expected and adds a sliding window to text level (normal behavior). However, i want to window on a token level inside a text.

I did find a nonoptimal solution. The code works but i have a sliding window over all the documents. From methodological point of view, it shouldn't (different authors, topics, etc ). Is there any way to apply a window to a tensor and not a dataset?

files = glob("transfdata/*")

dataset = tf.data.TextLineDataset(files)

dataset = dataset.map(lambda x: tf.strings.split(x))

t = dataset.flat_map( lambda x: tf.data.Dataset.from_tensor_slices(x))

t = t.window(6,1,1, drop_remainder=False)

Any help would be appreciated, thanks!

CodePudding user response: