I'm a novice at openCV, currently i'm following

and the following python code:

from __future__ import print_function

import cv2

import numpy as np

MAX_FEATURES = 500

GOOD_MATCH_PERCENT = 0.15

def alignImages(im1, im2):

# Convert images to grayscale

im1Gray = cv2.cvtColor(im1, cv2.COLOR_BGR2GRAY)

im2Gray = cv2.cvtColor(im2, cv2.COLOR_BGR2GRAY)

# Detect ORB features and compute descriptors.

orb = cv2.ORB_create(MAX_FEATURES)

keypoints1, descriptors1 = orb.detectAndCompute(im1Gray, None)

keypoints2, descriptors2 = orb.detectAndCompute(im2Gray, None)

# Match features.

matcher = cv2.DescriptorMatcher_create(

cv2.DESCRIPTOR_MATCHER_BRUTEFORCE_HAMMING)

matches = list(matcher.match(descriptors1, descriptors2, None))

# Sort matches by score

matches.sort(key=lambda x: x.distance, reverse=False)

# Remove not so good matches

numGoodMatches = int(len(matches) * GOOD_MATCH_PERCENT)

matches = matches[:numGoodMatches]

# Draw top matches

imMatches = cv2.drawMatches(im1, keypoints1, im2, keypoints2, matches, None)

cv2.imwrite("matches.jpg", imMatches)

# Extract location of good matches

points1 = np.zeros((len(matches), 2), dtype=np.float32)

points2 = np.zeros((len(matches), 2), dtype=np.float32)

for i, match in enumerate(matches):

points1[i, :] = keypoints1[match.queryIdx].pt

points2[i, :] = keypoints2[match.trainIdx].pt

# Find homography

h, mask = cv2.findHomography(points1, points2, cv2.RANSAC)

# Use homography

height, width, channels = im2.shape

im1Reg = cv2.warpPerspective(im1, h, (width, height))

return im1Reg, h

if __name__ == '__main__':

# Read reference image

refFilename = "template.jpg"

print("Reading reference image : ", refFilename)

imReference = cv2.imread(refFilename, cv2.IMREAD_COLOR)

# Read image to be aligned

imFilename = "test_image.jpg"

print("Reading image to align : ", imFilename)

im = cv2.imread(imFilename, cv2.IMREAD_COLOR)

print("Aligning images ...")

# Registered image will be resotred in imReg.

# The estimated homography will be stored in h.

imReg, h = alignImages(im, imReference)

# Write aligned image to disk.

outFilename = "aligned.jpg"

print("Saving aligned image : ", outFilename)

cv2.imwrite(outFilename, imReg)

# Print estimated homography

print("Estimated homography : \n", h)

I get the following results after i ran the script:

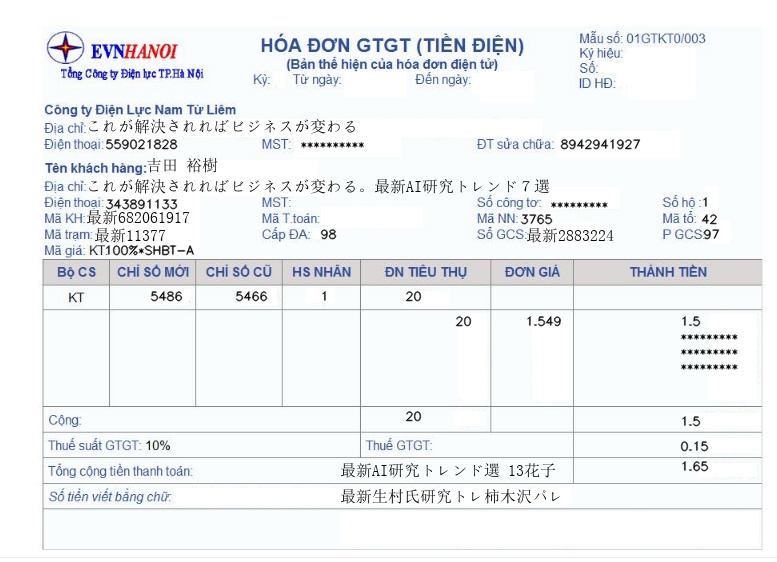

matches.jpg:

UPDATE:

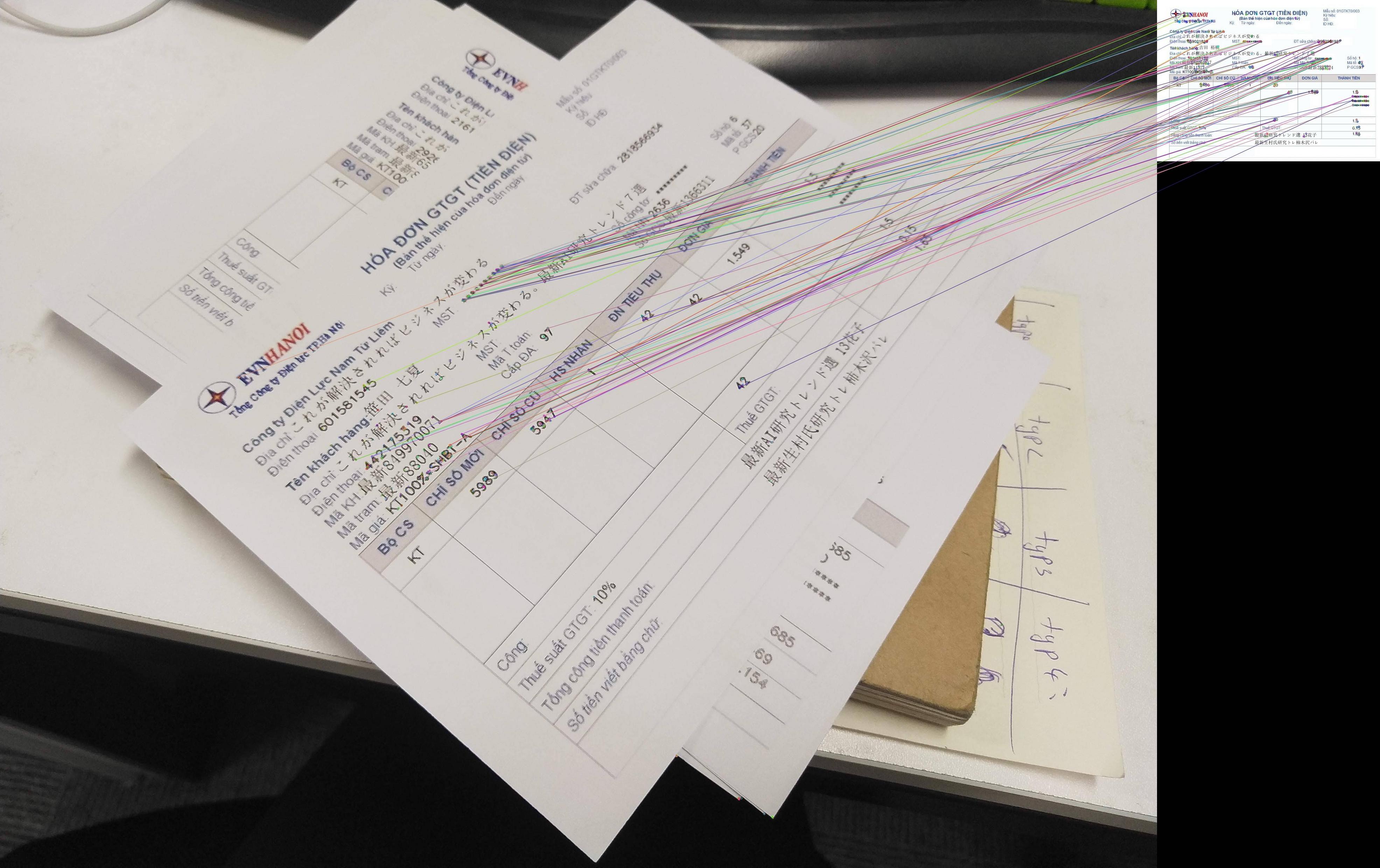

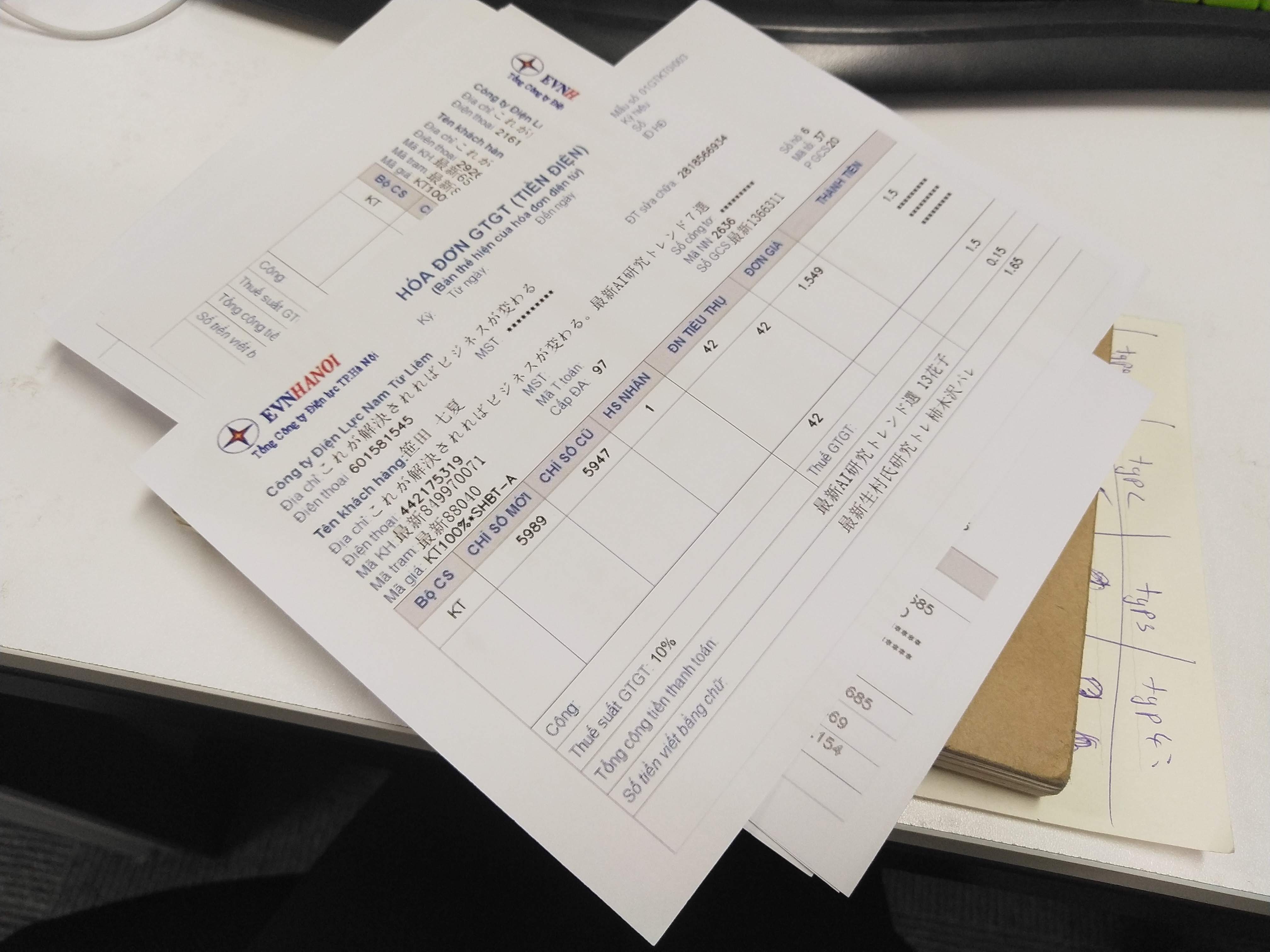

I was able to get the image when i increase the amount of orb features to 2000

But the homography is still not rotating the image, how can i rotate the image to the same position as the template?

CodePudding user response:

There are two types of forms to finding a homography (forward and backward), but if you already found the homography, applying it can be done without using opencv as follows:

import numpy as np

from scipy.interpolate import griddata

# creating the homogenious coordinates

src_h, src_w, _ = src_image.shape

values = np.matrix.reshape(src_image, (-1, 3), order='F')

yy, xx = np.meshgrid(np.arange(src_h), np.arange(src_w))

input_flat = np.concatenate((xx.reshape((1, -1)), yy.reshape((1, -1)), np.ones_like(xx.reshape((1, -1)))), axis=0)

# applying the homography and converting back to homogenious coordinates

points = np.matmul(homography, input_flat)

points_homogeneous = points[0:2, :] / points[2, :]

# interpolating the result to nicely fit the grid coordinates

dst_image_shape = [400, 400] # could be any number here

yy, xx = np.meshgrid(np.arange(dst_image_shape[1]), np.arange(dst_image_shape[0]))

src_image_warp = griddata(np.transpose(points_homogeneous ), values_relevant, (yy, xx), method='linear')

#numerical rounding

src_image_warp[np.isnan(src_image_warp)] = 0

src_image_warp[src_image_warp > 255] = 255

src_image_warp = np.uint8(src_image_warp)

Note that this is done for a 1 channel image, for RGB image this has to be done for each channel searately. In addition, this could be made to run faster by interpolating only the relevant coordinates since the interpolation is the most time-consuming operation.

With opencv this can be done by:

import cv2

image_dst = cv2.warpPerspective(image_src, homography, size) # size is a tuple (width, height) of the destination image

Read more on homographies and the opencv implementation here.

Finding the homography

The homography can be found without using opencv but that requires knowlage in linear algebra adn the explanation is a bit lengthy, if needed I will post it as an edit. For any practical case however, the homography can be found using opencv as follows:

homography, status = cv2.findHomography(pts_src, pts_dst)

where pts_src are coordinates in the original image and pts_dst are their matching location in the destination image. Since you already found the point pairs, this will yield you the homography (opencv optimizes the hmography for minimal distortion in the backward operation which is the correct way to perform homography computations).

CodePudding user response:

You have a homography h calculated from findHomography and you can use warpPerspective to transform the template to have the same perspective as the photo.

Now you just need to invert the homography, and apply it to the photo instead of the template.

Either use np.linalg.inv for that, or pass the WARP_INVERSE_MAP flag to warpPerspetive instead.