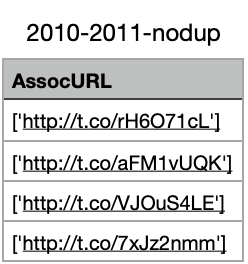

I have several GB of CSV files where values in one of the columns look like this:

Which is a consequence of this:

urls.append(re.findall(r'http\S ', hashtags_rem))

...

merger = {'Content': clean, 'AttrURL': urls}

cleandf = pd.DataFrame(merger)

...

df.insert(3, "AssocURL", cleandf['AttrURL'])

It took me a while to generate these files and, looking back, I'd certainly write this part differently, but doing it again is a very time-consuming and simply unnecessary endeavour.

Is there another efficient way to remove [' and '] from this column using pandas or csv?

CodePudding user response:

You can use pandas.DataFrame.apply to remove the squared parentheses. It should be something like this:

df.apply(lambda string: string[2:-2])

CodePudding user response:

Not a very attractive answer, but how about just with .str.replace ?

df['AssocURL'].str.replace("\'","").str.replace("[","").str.replace("]","")

CodePudding user response:

From the question it's unclear if the column is a string or if it contains elements which are themselves a list which contains a single string. re.findall returns the second option. If it is the second option eg.,

df = pd.DataFrame({'AssocURL': [['link1'], ['link2']]})

# AssocURL

# 0 [link1]

# 1 [link2]

You can use explode:

df['AssocURL'] = df['AssocURL'].explode()

# AssocURL

# 0 link1

# 1 link2

CodePudding user response:

Super simple, just do:

df['AssocURL'].replace("['", '').replace("']", '')