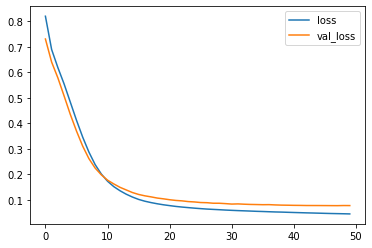

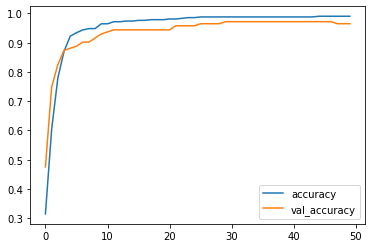

I am running a Keras model on the Breast Cancer dataset. I got around 96% accuracy with it, but the confusion matrix is completely off. Here are the graphs:

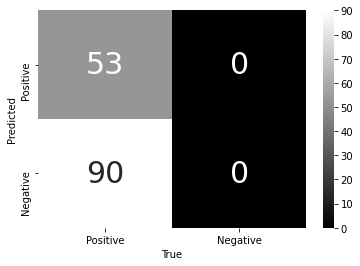

And here is my confusion matrix:

The matrix is saying that I have no true negatives and they're actually false negatives, when I believe that it's the reverse. Another thing that I noticed is that when the amount of true values are added up and divided by the length of the testing set, the result does not reflect the score that is calculated from the model. Here is the whole code:

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

from sklearn.datasets import load_breast_cancer

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from tensorflow import keras

from tensorflow.math import confusion_matrix

from keras import Sequential

from keras.layers import Dense

breast = load_breast_cancer()

X = breast.data

y = breast.target

#Split data into training and testing sets

X_train, X_test, y_train, y_test = train_test_split(X, y, random_state=0)

#Scale data

sc = StandardScaler()

X_train = sc.fit_transform(X_train)

X_test = sc.fit_transform(X_test)

#Create and fit keras model

model = Sequential()

model.add(Dense(8, activation='relu', input_shape=[X.shape[1]]))

model.add(Dense(4, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.compile(optimizer='adam', loss='binary_crossentropy', metrics=['accuracy'])

history = model.fit(X_train, y_train, validation_data=(X_test, y_test), batch_size=16, epochs=50, verbose=1)

history = pd.DataFrame(history.history)

#Display loss visualization

history.loc[:,['loss','val_loss']].plot();

history.loc[:,['accuracy','val_accuracy']].plot();

#Create confusion matrix

y_pred = model.predict(X_test)

conf_matrix = confusion_matrix(y_test,y_pred)

cm = sns.heatmap(conf_matrix, annot=True, cmap='gray', annot_kws={'size':30})

cm_labels = ['Positive','Negative']

cm.set_xlabel('True')

cm.set_xticklabels(cm_labels)

cm.set_ylabel('Predicted')

cm.set_yticklabels(cm_labels);

Am I doing something wrong here? Am I missing something?

CodePudding user response:

Check the confusion matrix values from the sklearn.metrics.confusion_matrix official documentation. The values are so organized:

TN: upper left cornerFP: upper right cornerFN: lower left cornerTP: lower right corner

You're getting 53 true negatives and 90 false negatives from the current confusion matrix.