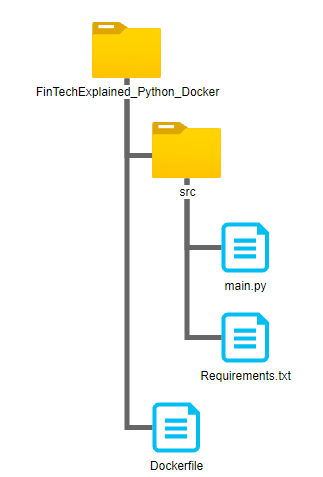

My folder structure looked like this:

My Dockerfile looked like this:

FROM python:3.8-slim-buster

WORKDIR /src

COPY src/requirements.txt requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

COPY src/ .

CMD [ "python", "main.py"]

When I ran these commands:

docker build --tag FinTechExplained_Python_Docker .

docker run free

my main.pyfile ran and gave the correct print statements as well. Now, I have added another file tests.py in the src folder. I want to run the tests.py first and then main.py.

I tried modifying the cmdwithin my docker file like this:

CMD [ "python", "test.py"] && [ "python", "main.py"]

but then it gives me the print statements from only the first test.pyfile.

I read about docker-compose and added this docker-compose.yml file to the root folder:

version: '3'

services:

main:

image: free

command: >

/bin/sh -c 'python tests.py'

main:

image: free

command: >

/bin/sh -c 'python main.py'

then I changed my docker file by removing the cmd:

FROM python:3.8-slim-buster

WORKDIR /src

COPY src/requirements.txt requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

COPY src/ .

Then I ran the following commands:

docker compose build

docker compose run tests

docker compose run main

When I run these commands separately, I get the correct print statements for both testsand main. However, I am not sure if I am using docker-composecorrectly or not.

- Am I supposed to run both scripts separately? Or is there a way to run one after another using a single docker command?

- How is my Dockerfile supposed to look like if I am running the python scripts from the

docker-compose.ymlinstead?

Edit:

Ideally looking for solutions based on docker-compose

CodePudding user response:

You have to change CMD to ENTRYPOINT. And run the 1st script as daemon in the background using &.

ENTRYPOINT ["/docker_entrypoint.sh"]

docker_entrypoint.sh

#!/bin/bash

set -e

exec python tests.py &

exec python main.py

CodePudding user response:

In general, it is a good rule of thumb that a container should only a single process and that essential process should be pid 1

Using an entrypoint can help you do multiple things at runtime and optionally run user-defined commands using exec, as according to the best practices guide.

For example, if you always want the tests to run whenever the container starts, then execute the defined command in CMD.

First, create an entrypoint script (be sure to make it executable with chmod x):

#!/usr/bin/env bash

# always run tests first

python /src/tests.py

# then run user-defined command

exec "$@"

Then configure the dockerfile to copy the script and set it as the entrypoint:

#...

COPY entrypoint.sh /docker-entrypoint.sh

ENTRYPOINT ["/docker-entrypoint.sh"]

CMD ["python", "main.py"]

Then when you build an image from this dockerfile and run it, the entrypoint will first execute the tests then run the command to run main.py

The command can also still be overridden by the user when running the image like docker run ... myimage <new command> which will still result in the entrypoint tests being executed, but the user can change the command being run.

CodePudding user response:

You can achieve this by creating a bash script(let's name entrypoint.sh) which is containing the python commands. If you want, you can create background processes of those.

#!/usr/bin/env bash

set -e

python tests.py

python main.py

Edit your docker file as follows:

FROM python:3.8-slim-buster

# Create workDir

RUN mkdir code

WORKDIR code

ENV PYTHONPATH = /code

#upgrade pip if you like here

COPY requirements.txt .

RUN pip install -r requirements.txt

# Copy Code

COPY . .

RUN chmod x entrypoint.sh

ENTRYPOINT ["./entrypoint.sh"]

In the docker compose file, add the following line to the service.

entrypoint: [ "./entrypoint.sh" ]