so I was wondering if there is a way to stitch two parts of an image at the right position using key-points and homography matrix.

as an example here are the two images.

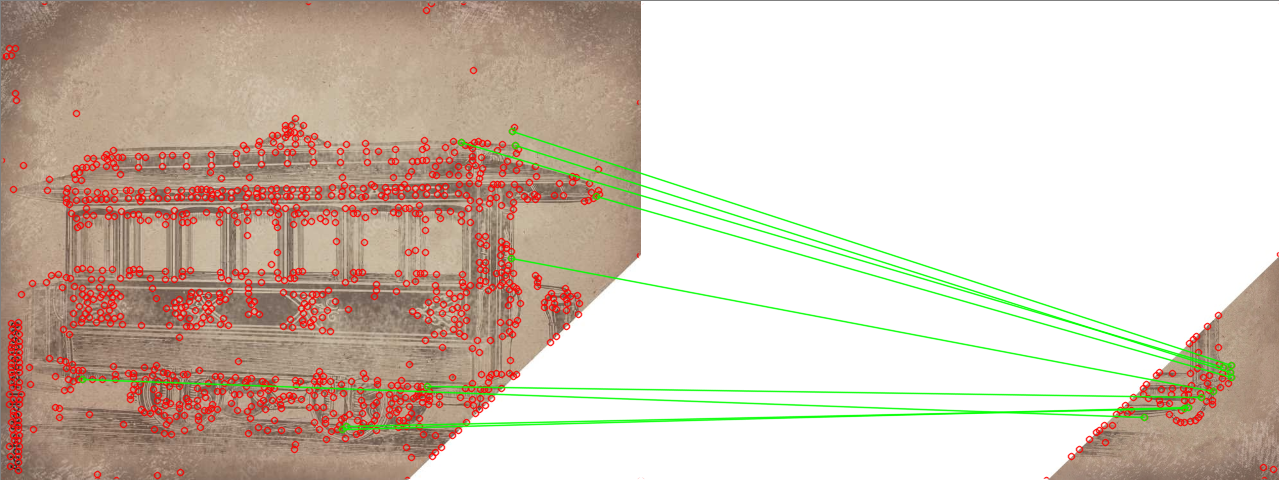

so for the keypoints detection I am using "Superpoint" and I am getting the following result.

so I am searching for a way to maybe use the cv2.warpAffine function to align the images together, all my attempts so far didn't work. The goal is to let the program decide where to place the second image in the first one.

here is the code I am using:

enter code here

import argparse

from pathlib import Path

import cv2

import numpy as np

import tensorflow as tf # noqa: E402

from superpoint.settings import EXPER_PATH # noqa: E402

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

def extract_superpoint_keypoints_and_descriptors(keypoint_map, descriptor_map,

keep_k_points=1000):

def select_k_best(points, k):

""" Select the k most probable points (and strip their proba).

points has shape (num_points, 3) where the last coordinate is the proba. """

sorted_prob = points[points[:, 2].argsort(), :2]

start = min(k, points.shape[0])

return sorted_prob[-start:, :]

# Extract keypoints

keypoints = np.where(keypoint_map > 0)

prob = keypoint_map[keypoints[0], keypoints[1]]

keypoints = np.stack([keypoints[0], keypoints[1], prob], axis=-1)

keypoints = select_k_best(keypoints, keep_k_points)

keypoints = keypoints.astype(int)

# Get descriptors for keypoints

desc = descriptor_map[keypoints[:, 0], keypoints[:, 1]]

# Convert from just pts to cv2.KeyPoints

keypoints = [cv2.KeyPoint(p[1], p[0], 1) for p in keypoints]

return keypoints, desc

def match_descriptors(kp1, desc1, kp2, desc2):

# Match the keypoints with the warped_keypoints with nearest neighbor search

bf = cv2.BFMatcher(cv2.NORM_L2, crossCheck=True)

matches = bf.match(desc1, desc2)

matches_idx = np.array([m.queryIdx for m in matches])

m_kp1 = [kp1[idx] for idx in matches_idx]

matches_idx = np.array([m.trainIdx for m in matches])

m_kp2 = [kp2[idx] for idx in matches_idx]

return m_kp1, m_kp2, matches

def compute_homography(matched_kp1, matched_kp2):

matched_pts1 = cv2.KeyPoint_convert(matched_kp1)

matched_pts2 = cv2.KeyPoint_convert(matched_kp2)

H, inliers = cv2.findHomography(matched_pts1[:, [1, 0]],

matched_pts2[:, [1, 0]],cv2.RANSAC,5.0)

inliers = inliers.flatten()

print(H)

return H, inliers

def preprocess_image(img_file, img_size):

img = cv2.imread(img_file, cv2.IMREAD_COLOR)

img = cv2.resize(img, img_size)

img_orig = img.copy()

img = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

img = np.expand_dims(img, 2)

img = img.astype(np.float32)

img_preprocessed = img / 255.

return img_preprocessed, img_orig

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser = argparse.ArgumentParser(description='Compute the homography \

between two images with the SuperPoint feature matches.')

parser.add_argument('weights_name', type=str)

parser.add_argument('img1_path', type=str)

parser.add_argument('img2_path', type=str)

parser.add_argument('--H', type=int, default=480,

help='The height in pixels to resize the images to. \

(default: 480)')

parser.add_argument('--W', type=int, default=640,

help='The width in pixels to resize the images to. \

(default: 640)')

parser.add_argument('--k_best', type=int, default=1000,

help='Maximum number of keypoints to keep \

(default: 1000)')

args = parser.parse_args()

weights_name = args.weights_name

img1_file = args.img1_path

img2_file = args.img2_path

img_size = (args.W, args.H)

keep_k_best = args.k_best

weights_root_dir = Path(EXPER_PATH, 'saved_models')

weights_root_dir.mkdir(parents=True, exist_ok=True)

weights_dir = Path(weights_root_dir, weights_name)

graph = tf.Graph()

with tf.Session(graph=graph) as sess:

tf.saved_model.loader.load(sess,

[tf.saved_model.tag_constants.SERVING],

str(weights_dir))

input_img_tensor = graph.get_tensor_by_name('superpoint/image:0')

output_prob_nms_tensor = graph.get_tensor_by_name('superpoint/prob_nms:0')

output_desc_tensors = graph.get_tensor_by_name('superpoint/descriptors:0')

img1, img1_orig = preprocess_image(img1_file, img_size)

out1 = sess.run([output_prob_nms_tensor, output_desc_tensors],

feed_dict={input_img_tensor: np.expand_dims(img1, 0)})

keypoint_map1 = np.squeeze(out1[0])

descriptor_map1 = np.squeeze(out1[1])

kp1, desc1 = extract_superpoint_keypoints_and_descriptors(

keypoint_map1, descriptor_map1, keep_k_best)

img2, img2_orig = preprocess_image(img2_file, img_size)

out2 = sess.run([output_prob_nms_tensor, output_desc_tensors],

feed_dict={input_img_tensor: np.expand_dims(img2, 0)})

keypoint_map2 = np.squeeze(out2[0])

descriptor_map2 = np.squeeze(out2[1])

kp2, desc2 = extract_superpoint_keypoints_and_descriptors(

keypoint_map2, descriptor_map2, keep_k_best)

# Match and get rid of outliers

m_kp1, m_kp2, matches = match_descriptors(kp1, desc1, kp2, desc2)

H, inliers= compute_homography(m_kp1, m_kp2)

matches = np.array(matches)[inliers.astype(bool)].tolist()

matched_img = cv2.drawMatches(img1_orig, kp1, img2_orig, kp2, matches,

None, matchColor=(0, 255, 0),

singlePointColor=(0, 0, 255))

I appreciate your help!

CodePudding user response:

So for closure, using homographies or affine transformations to stitch these two images together is not the right way to do this. There are too many interest points that are candidates between each portion that are highly ambiguous which would not allow this stitching to be successful.

The only way for this to have worked would be if your feature matches were specified at the boundaries of where the two images were disconnected from each other. However, these points would never be interest points as the local neighbourhoods do not offer any unique information. Also, edges by definition are not interest points so any detector would never yield those as proper points.

I apologize if this wasn't the answer you were looking for!

CodePudding user response:

This should hopefully be what you are looking for. The addWeighted function is the function in cv2 for merging images

import cv2

img1 = cv2.imread('7FgBZ.jpg',1)

img2 = cv2.imread('qqiH8.jpg',1)

print(img2)

dst = cv2.addWeighted(img1,0.2,img2,0.2,0)

print(dst)

cv2.imshow('dst',dst)

cv2.waitKey(0)

cv2.destroyAllWindows()