Install VS2017 first, and then from the website, download and Install the latest version openvino 2019 R3. According to the official website of the Install Intel Distribution of openvino toolkit for Windows 10 tutorial download installation must rely on libraries and the Model of zoo Model file,

After installed can compile openvino own sample routine,

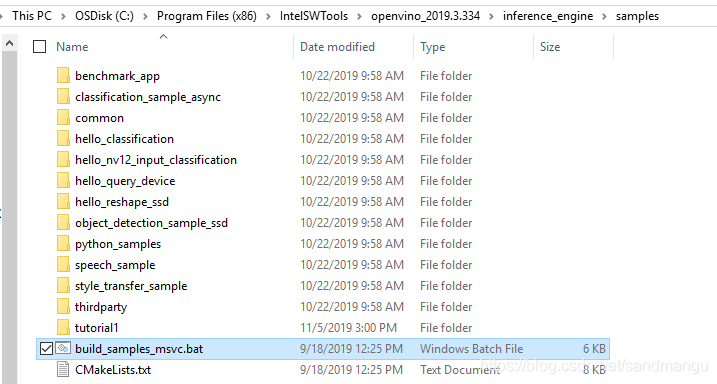

OpenVINO very thoughtful provides a batch file (as shown in the figure below), in the file manager double-click to run the bat file that can be installed according to the current system of VS2015 VS2017/VS2019 version automatically generate the corresponding VC project files and compiled code,

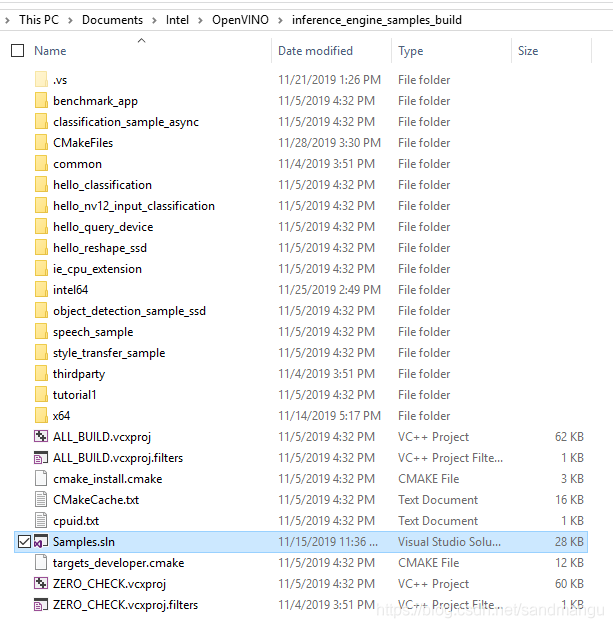

The generated project file Samples. The SLNS in detected the Documents \ Intel \ OpenVINO \ inference_engine_samples_build directory,

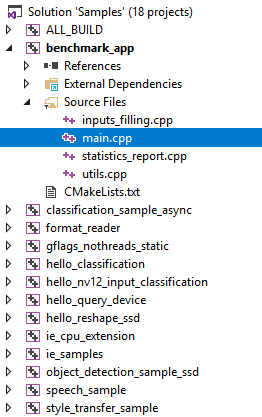

The benchmark_app project was the official test OpenVINO reasoning performance reference project, can learn the official code to understand how best mining hardware performance, higher reasoning

Benchmark benchmark usage can refer to website (c + + Tool, if you want to measure the performance of the CPU, the most simple command is

./benchmark_app -m & lt; Model> -i & lt; input> - d CPU

- the model file, m behind - I followed a directory name, directory put some pictures in need of reasoning

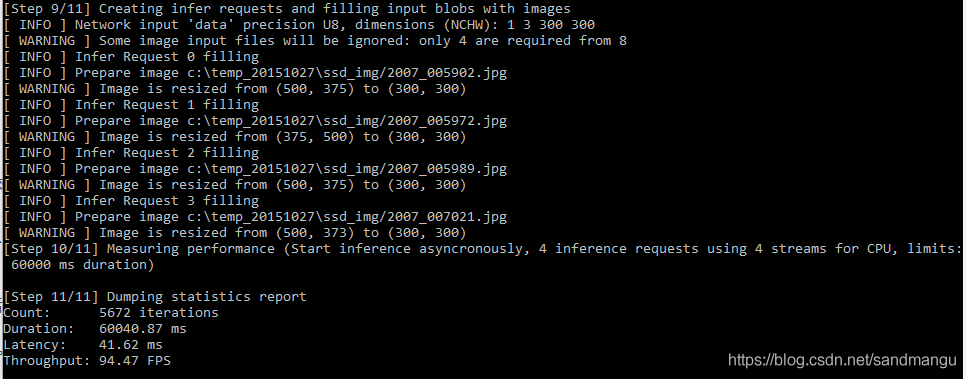

I use mobilenet - SSD to test here,

Benchmark_app. Exe - m - c: \ temp \ cvt_model \ mobilenet - SSD - fp32 \ mobilenet - SSD. XML - I c: \ temp \ ssd_img

Test program will automatically run a minute model by default reasoning, how many images, statistical inference in 1 minute, on average, the time needed for each image,

Here focus on the value of Latency and Throughput

Average Latency refers to the reasoning the time needed for a picture, Throughput refers to every second can reasoning how many images at the same time,

In practical scenario, if we want to use the fastest speed to a picture get reasoning results, needs to be smaller Latency as far as possible; If we want to fully tap the potential of hardware, in unit time as much as possible of processing data, you need to Throughput as far as possible, these two parameters are usually opposite to each other, if you want to Lantency smaller, just need to focus all of the hardware resources to do one thing; If Throughput, you need to all hardware resource maximum mobilize work, don't let every piece of circuit is free, which means at the same time run a lot of things, but everything will get long processing time,