I have a dataframe in python, df, that i want to pass to be able to use in % scala.

I have tried -

%python

pyDf.createOrReplaceTempView("testDF") // error message

CodePudding user response:

Just query it with spark.sql:

val scalaDf = spark.sql("select * from testDF")

CodePudding user response:

it's not too difficult. I am sharing a sample code pls try. It's working in Pycharm or databricks.

from pyspark.sql import *

import pandas as pd

spark = SparkSession.builder.master("local").appName("testing").getOrCreate()

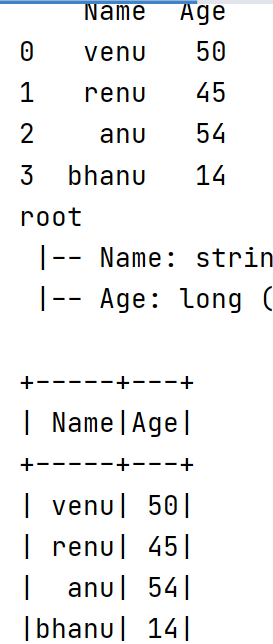

data = [['venu', 50], ['renu', 45], ['anu', 54],['bhanu',14]]

Create the pandas DataFrame

pdf= pd.DataFrame(data, columns = ['Name', 'Age'])

print(pdf)

Python Pands convert to Spark Dataframe.

sparkDF=spark.createDataFrame(pdf)

sparkDF.printSchema()

sparkDF.show()