I am reading the mariadb table from spark which has date and datetime fields. Spark is throwing error while reading.

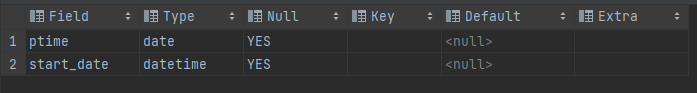

Below is the schema of mariadb table:

Spark code to read mariadb table:

val df = spark.read.format("jdbc").option("driver", "org.mariadb.jdbc.Driver").option("url", "jdbc:mariadb://xxxx:xxxx/db").option("user", "user").option("password", "password").option("dbtable", "select * from test_ankur").load()

df.select("ptime").show()

This throw below error for date field:

Caused by: java.sql.SQLTransientConnectionException: Could not get object as Date : Unparseable date: "ptime"

at org.mariadb.jdbc.internal.util.exceptions.ExceptionFactory.createException(ExceptionFactory.java:79)

at org.mariadb.jdbc.internal.util.exceptions.ExceptionFactory.create(ExceptionFactory.java:183)

at org.mariadb.jdbc.internal.com.read.resultset.rowprotocol.TextRowProtocol.getInternalDate(TextRowProtocol.java:546)

at org.mariadb.jdbc.internal.com.read.resultset.SelectResultSet.getDate(SelectResultSet.java:1065)

Getting below error for datetime field:

Caused by: java.sql.SQLException: cannot parse data in timestamp string 'start_date'

at org.mariadb.jdbc.internal.com.read.resultset.rowprotocol.TextRowProtocol.getInternalTimestamp(TextRowProtocol.java:645)

at org.mariadb.jdbc.internal.com.read.resultset.SelectResultSet.getTimestamp(SelectResultSet.java:1125)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$org$apache$spark$sql$execution$datasources$jdbc$JdbcUtils$$makeGetter$12.apply(JdbcUtils.scala:452)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anonfun$org$apache$spark$sql$execution$datasources$jdbc$JdbcUtils$$makeGetter$12.apply(JdbcUtils.scala:451)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anon$1.getNext(JdbcUtils.scala:356)

at org.apache.spark.sql.execution.datasources.jdbc.JdbcUtils$$anon$1.getNext(JdbcUtils.scala:338)

CodePudding user response:

I had similar issue when I was trying get date type values. I added "nullCatalogMeansCurrent=true" in the URL and it worked.

url "jdbc:mariadb://xxxx:3306/datalake_test?useSSL=FALSE&nullCatalogMeansCurrent=true"

CodePudding user response:

I got this working by changing the connection string to mysql:

jdbc:mysql://xxxx:xxxx/db

As per mariadb documentation MariaDB Column Store with Spark

Currently Spark does not correctly recognize mariadb specific jdbc connect strings and so the jdbc:mysql syntax must be used.