let's assume I have N number of points, for example:

A = [2, 3]

B = [3, 4]

C = [3, 3]

etc. (In fact there are a lot of them, that's why numpy)

They're hold in numpy.array = [A, B, C,...] which gives structure as: arr = np.array([[2, 3], [3, 4], [3, 3]])

I need as output, combination of their distances in BFS (Breadth First Search) order to track which distance is it, like: A->B, A->C, B->C in such case, there doesn't matter how they gonna be rounded, if there will be 2nd floating point or plain floor integer, for above example data, result would look like: [1.41, 1.0, 1.0].

So far I tried many approaches with np.linalg.norm, np.sqrt np.square arr.reshape, math.hypot and so on... I get my hands on iterative solution, however, this is not efficient in numpy, is there any idea how to approach it with numpy to prevent iterative solutions?

I can transform my data however it is necessary, to form of two arrays where one containing X cords and second Y cords but execution of non-linear approach using numpy features is my mental bottleneck right now. Any advise is welcome, I don't beg only for ready solution, but these also will be welcome :)

@Edit: I have to accomplish it with numpy or core libraries.

CodePudding user response:

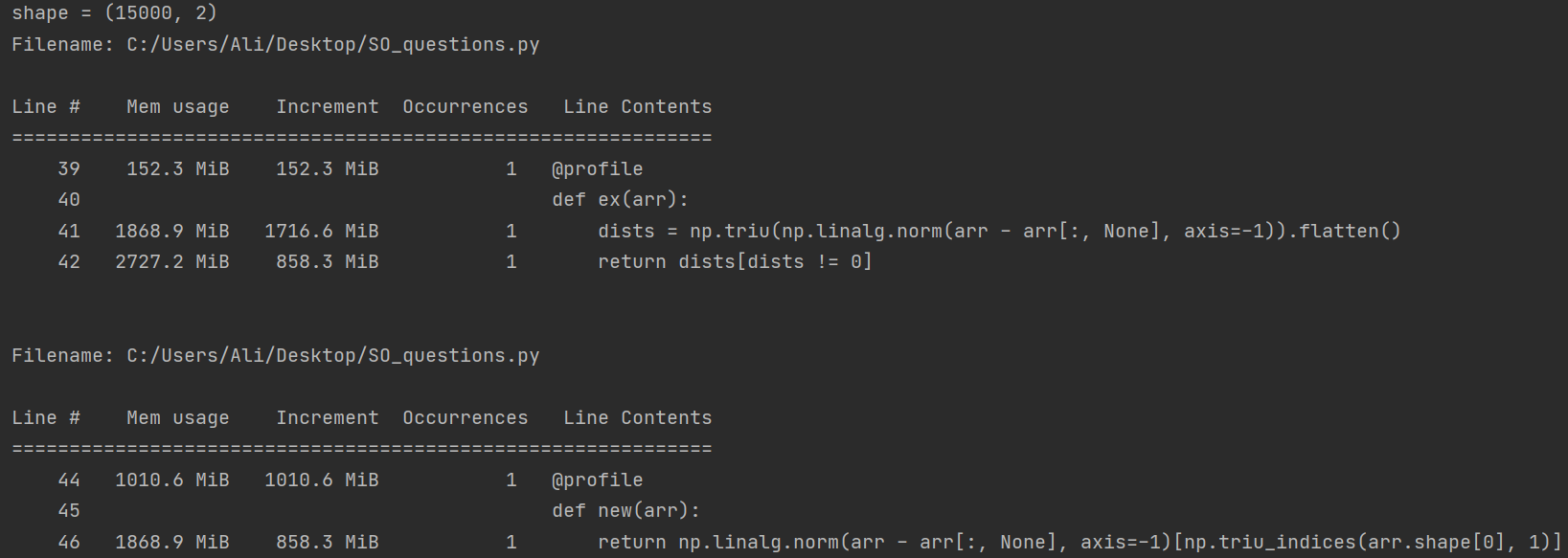

Here's a numpy-only solution (fair warning: it requires a lot of memory, unlike pdist)...

dists = np.triu(np.linalg.norm(arr - arr[:, None], axis=-1)).flatten()

dists = dists[dists != 0]

Demo:

In [4]: arr = np.array([[2, 3], [3, 4], [3, 3], [5, 2], [4, 5]])

In [5]: pdist(arr)

Out[5]:

array([1.41421356, 1. , 3.16227766, 2.82842712, 1. ,

2.82842712, 1.41421356, 2.23606798, 2.23606798, 3.16227766])

In [6]: dists = np.triu(np.linalg.norm(arr - arr[:, None], axis=-1)).flatten()

In [7]: dists = dists[dists != 0]

In [8]: dists

Out[8]:

array([1.41421356, 1. , 3.16227766, 2.82842712, 1. ,

2.82842712, 1.41421356, 2.23606798, 2.23606798, 3.16227766])

Timings (with the solution above wrapped in a function called triu):

In [9]: %timeit pdist(arr)

7.27 µs ± 738 ns per loop (mean ± std. dev. of 7 runs, 100000 loops each)

In [10]: %timeit triu(arr)

25.5 µs ± 4.58 µs per loop (mean ± std. dev. of 7 runs, 10000 loops each)

CodePudding user response:

If you can use it, SciPy has a function for this:

In [2]: from scipy.spatial.distance import pdist

In [3]: pdist(arr)

Out[3]: array([1.41421356, 1. , 1. ])

CodePudding user response: