I'm new to Kubernetes and am experiencing a weird issue with my nginx-ingress router. My cluster is run locally on a raspberry pi using Microk8s, where I have 4 different deployments. The cluster uses an ingress router to route packets for the UI and API.

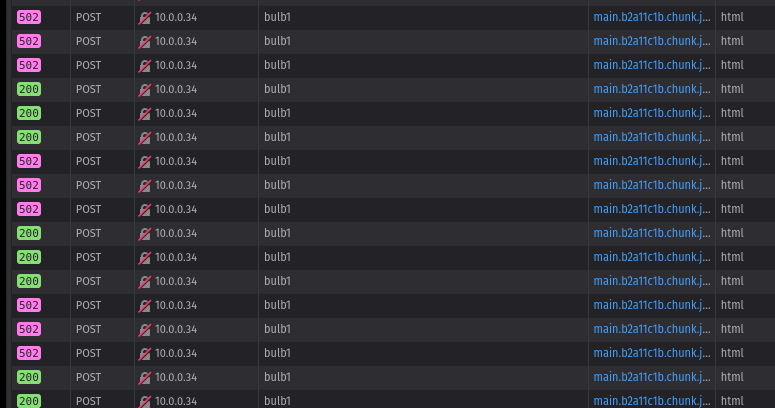

In short, my issue is that I receive intermittent 502 errors on calls from the UI to the backend. Intermittent meaning that for every 3 successful POSTs, there are 3 unsuccessful 502 requests (regardless of how quickly these requests are called). E.g.,

I've applied the following Ingress configuration to my cluster:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-router

annotations:

nginx.ingress.kubernetes.io/enable-cors: "true"

nginx.ingress.kubernetes.io/cors-allow-methods: "PUT, GET, POST, OPTIONS"

nginx.ingress.kubernetes.io/cors-allow-credentials: "true"

nginx.ingress.kubernetes.io/use-regex: "true"

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: ui

port:

number: 80

- http:

paths:

- path: /lighting/.*

pathType: Prefix

backend:

service:

name: api

port:

number: 8000

And the deployments for UI and API are as follow:

- UI:

apiVersion: apps/v1

kind: Deployment

metadata:

name: ui

spec:

selector:

matchLabels:

app: iot-control-center

replicas: 3

template:

metadata:

labels:

app: iot-control-center

spec:

containers:

- name: ui-container

image: canadrian72/iot-control-center:ui

imagePullPolicy: Always

ports:

- containerPort: 80

- API:

apiVersion: apps/v1

kind: Deployment

metadata:

name: api

spec:

selector:

matchLabels:

app: iot-control-center

replicas: 3

template:

metadata:

labels:

app: iot-control-center

spec:

containers:

- name: api-container

image: canadrian72/iot-control-center:api

imagePullPolicy: Always

ports:

- containerPort: 8000

- containerPort: 1883

After looking around online, I found this Reddit post which most closely resembles my issue, although I'm not too sure where to go from here. I have a feeling it's a load issue for either the pods or the ingress controller, so I tried adding 3 replicas to each pod (it was 1 before), but this only decreased the frequency of 502 errors.

Any sort of insight or guidance would be greatly appreciated! Thanks.

EDIT: edited to add it’s not necessarily 1 to one for 200 and 502 responses, it’s fairly random but about an even distribution of 502 and 200 responses. Also to add that I had configured this same setup with LoadBalancer (metallb) and everything worked like a charm, except for CORS. Which is why I went for Ingress to deal with CORS.

CodePudding user response:

Leaving this up if anyone has a similar issue. My issue was that in the ingress configuration, the backend services referenced the deployments themselves instead of a nodeport/clusterIP service.

I instead created two cluster IP services for the UI and API, like follows:

- UI

apiVersion: v1

kind: Service

metadata:

name: ui-cluster-ip

spec:

type: ClusterIP

selector:

app: iot-control-center

svc: ui

ports:

- port: 80

- API

apiVersion: v1

kind: Service

metadata:

name: lighting-api-cluster-ip

spec:

type: ClusterIP

selector:

app: iot-control-center

svc: lighting-api

ports:

- port: 8000

And then referenced these services from the ingress yaml as follows:

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-router

annotations:

kubernetes.io/ingress.class: public

nginx.ingress.kubernetes.io/configuration-snippet: |

more_set_headers "Access-Control-Allow-Origin: $http_origin";

nginx.ingress.kubernetes.io/cors-allow-credentials: "true"

nginx.ingress.kubernetes.io/cors-allow-methods: PUT, GET, POST,

OPTIONS, DELETE, PATCH

nginx.ingress.kubernetes.io/enable-cors: "true"

nginx.ingress.kubernetes.io/use-regex: "true"

spec:

rules:

- http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: ui-cluster-ip

port:

number: 80

- http:

paths:

- path: /lighting/.*

pathType: Prefix

backend:

service:

name: lighting-api-cluster-ip

port:

number: 8000

I'm not sure why the previous configuration posted resulted in some 503 responses and some 200 (in my mind it should've all been 503), but in any case this solution works.