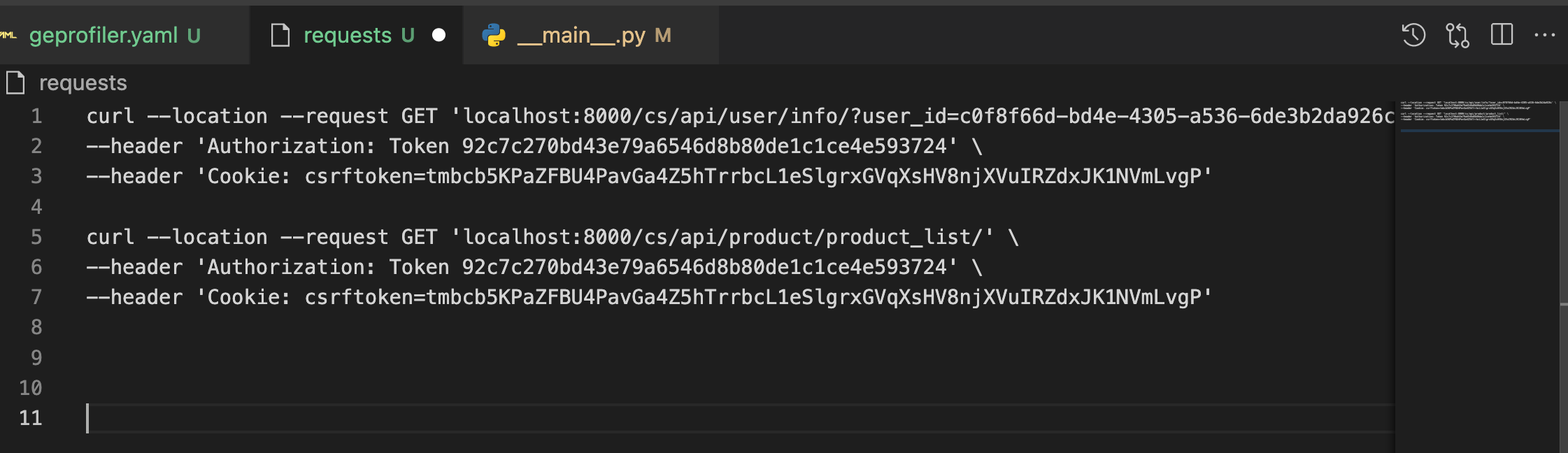

I have been writing a Python script to read from a file that contains multiple curl commands and then make HTTP requests to get responses (JSON).

I have done the step of reading the file and saving it to a curl array. Then I'm stuck at the step of using that curl array to make HTTP requests to get responses. Is there any Python library that supports a function that receives a curl string as a parameter and returns an HTTP response?

I have found one solution on StackOverflow is to use "subprocess" to execute the command but its drawback is that it requires "curl" to be installed on the machine which runs the script:

def call_curl(curl):

args = shlex.split(curl)

process = subprocess.Popen(args, shell=False,

stdout=subprocess.PIPE, stderr=subprocess.PIPE)

stdout, stderr = process.communicate()

return json.loads(stdout.decode('utf-8'))

CodePudding user response:

If you're using Python, you don't need cURL at all, as HTTP clients exist for Python, like Requests.

CodePudding user response:

Actually I agree with @ndc85430. There is no need to use curl. You can modify this sample code.

import requests as req

HEADERS = {'User-Agent': 'Mozilla/5.0',

'Cookie': 'XXXXXXXXXX'}

GET_APIS_NO_PARAMS = ["/v2/sample1", "/v2/sample2" ]

MAIN_URL = 'http://localhost:8000'

for idx, api in enumerate(GET_APIS_NO_PARAMS):

session = req.Session()

resp = session.get(MAIN_URL api, headers=HEADERS)

print(resp.text,resp.status_code)

time.sleep(2)

CodePudding user response:

Try using uncurl to parse curl command and then use requests to fetch the resource:

import uncurl

import requests

ctx = uncurl.parse_context("curl 'https://pypi.python.org/pypi/uncurl' -H 'Accept-Encoding: gzip,deflate,sdch'")

r = requests.request(ctx.method.upper(), ctx.url, data=ctx.data, cookies=ctx.cookies, headers=ctx.headers, auth=ctx.auth, verify=(not ctx.insecure))

r.status_code

see https://docs.python-requests.org/en/latest/user/advanced/#custom-verbs and https://github.com/spulec/uncurl/blob/master/uncurl/api.py#L107 to get some idea about how the code works.