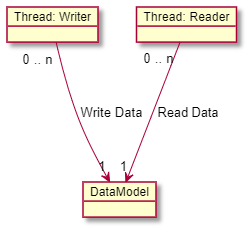

I have a scenario, where I have a shared data model between several threads. Some threads are going to write to that data model cyclically and other threads are reading from that data model cyclically. But it is guaranteed that writer threads are only writing and reader threads are only reading.

Now the scenario is, that reading data shall have higher priority than writing data due to real time constraints on the reader side. So it is not acceptable that e.g. a writer is locking the data for a too long time. But a lock with a guaranteed locking time would be acceptable (e.g. it would be acceptable for the reader to wait max 1 ms until the data is synchronized and available).

So I'm wondering how this is achievable, because the "traditional" locking mechanisms (e.g. std::lock) wouldn't give those real time guarantees.

CodePudding user response:

Normally in such a scenario you use a reader-writer-lock. This allows either a read by all readers in parallel or a write by a single writer.

But that does nothing to stop a writer from holding the lock for minutes if it so desires. Forcing the writer out of the lock is probably also not a good idea. The object is probably in some inconsistent state mid changed.

There is another synchronization method called read-copy-update that might help. This allows writers to modify element without being blocked by readers. The drawback is that you might get some readers still reading the old data and others reading the new data for some time.

It also might be problematic with multiple writers if they try to change the same member. The slower writer might have computed all the needed updates only to notice some other thread changes the object. It then has to start over wasting all the time it already spend.

Note: copying the element can be done in constant time, certainly under 1ms. So you can guarantee readers are never blocked for long. By releasing the write lock first you guarantee readers to read between any 2 writes, assuming the RW lock is designed with the same principle.

So I would suggest another solution I call write-intent-locking:

You start with a RW lock but add a lock to handle write-intent. Any writer can acquire the write-intent lock at any time, but only one of them, it's exclusive. Once a write holds the write-intent lock it copies the element and starts modifying the copy. It can take as long as it wants to do that as it's not blocking any readers. It does block other writers though.

When all the modifications are done the writer acquires the write lock and then quickly copies, moves or replaces the element with the prepared copy. It then releases the write and write-intent lock, unblocking both the readers and writers that want to access the same element.

CodePudding user response:

Well, you've got readers, and you've got writers, and you need a lock, so.... how about a readers/writer lock?

The reason I mention that up-front is because (a) you might not be aware of it, but more importantly (b) there's no standard RW lock in C (EDIT: my mistake, one was added in C 14), so your thinking about this is perhaps being done in the context of std::mutex. Once you've decided to go with a RW lock, you can benefit from other people's thinking about those locks.

In particular, there's a number of different options for prioritizing threads contending over RW locks. With one option, a thread acquiring a write lock waits until all current reader threads drop the lock, but readers who start waiting after the writer don't get the lock until the writer's done with it.

With that strategy, as long as the writer thread releases and reacquires the lock after each transaction, and as long as the writer completes each transaction within your 1 ms target, readers don't starve.

And if your writer can't promise that, then there is zero alternative but to redesign the writer: either doing more processing before acquiring the lock, or splitting a transaction into multiple pieces where it's safe to drop the lock between each.

If, on the other hand, your writer's transactions take much less than 1 ms, then you might consider skipping the release/reacquire between each one if less than 1 ms has elapsed (purely to reduce the processing overhead of doing so).... but I wouldn't advise it. Adding complexity and special cases and (shudder) wall clock time to your implementation is rarely the most practical way to maximize performance, and rapidly increases the risk of bugs. A simple multithreading system is a reliable multithreading system.

CodePudding user response:

The way I would approach this is to have two identical copies of the dataset; call them copy A and copy B.

Readers always read from copy B, being careful to lock a reader/writer lock in read-only mode before accessing it.

When a writer-thread wants to update the dataset, it locks copy A (using a regular mutex) and updates it. The writer-thread can take as long as it likes to do this, because no readers are using copy A.

When the writer-thread is done updating copy A, it locks the reader/writer lock (in exclusive/writer-lock mode) and swaps dataset A with dataset B. (This swap should be done by exchanging pointers, and is therefore O(1) fast).

The writer-thread then unlocks the reader/writer-lock (so that any waiting reader-threads can now access the updated data-set), and then updates the other data-set the same way it updated the first data-set. This can also take as long as the writer-thread likes, since no reader-threads are waiting on this dataset anymore.

Finally the writer-thread unlocks the regular mutex, and we're done.