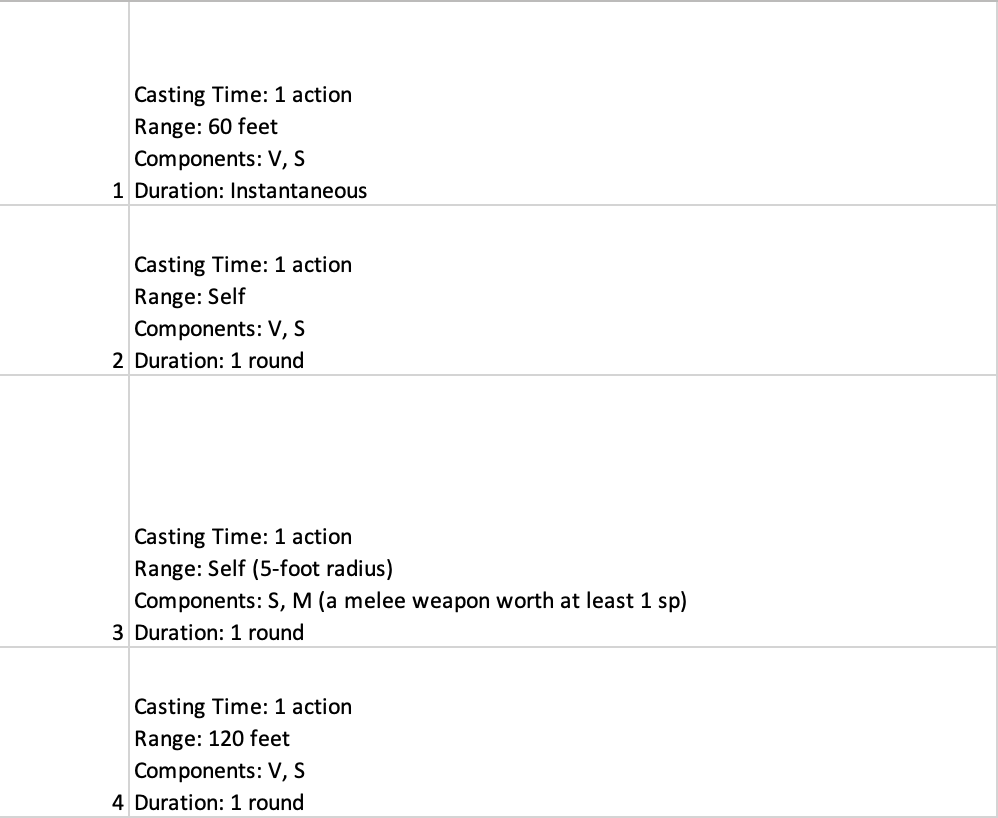

I'm trying to load a .csv file into my SQL table, but one of the columns has data on multiple lines, and for some reason SQL doesn't like that. I've attached a picture of what my data looks like below, and I'm using the following command to load the data in:

load data local infile 'Users/.../info.csv'

into table spell_info fields terminated by ','

enclosed by ' ' lines terminated by '\n';

For some reason, it only loads the first row, basically it seems to just pop the first item. The response it gives me is

1 row(s) affected, 1 warning(s): 1265 Data truncated for column 'spellInfo' at row 1 Records: 1 Deleted: 0 Skipped: 0 Warnings: 1

any help is appreciated. I'm not sure if it's the way the information in the csv file is listed or if it's my command, or some combination of the two.

CodePudding user response:

If the file to be imported uses more than one row per record then you have a lot of variants how to import the data correctly. I'll describe 2 of them.

Variant 1.

The file is loaded into temporary table of the next structure:

CREATE TEMPORARY TABLE tmp (

id BIGINT UNSIGNED AUTO_INCREMENT PRIMARY KEY,

raw_data TEXT

);

The file is loaded into this table, the whole row is imported into one column. Like

LOAD DATA INFILE 'filename.ext'

INTO TABLE tmp

FIELDS TERMINATED BY '' -- or use terminating char which is absent in the file

LINES TERMINATED BY '\n' -- use correct lines terminator

(raw_data);

Autoincremented column enumerates rows according to their loading order, i.e. according to their posession in the file.

After the data is loaded you may process this data and save the data into working table. If the amounts of lines per record is static then you may group the rows in temptable by (id - 1) MOD @rows_per_record and extract each separate column from definite row in a group. Or you may join according amount of rows by t1.id = t2.id-1 = t3.id-2 = .. AND (tN.id MOD @rows_per_record)=0. If the record data may occupy variable amount of rows then recursive CTE or iterative procedure may be the only option.

Variant 2.

You use LOAD DATA with input preprocessing by the same way but load the whole line into user-defined variable. In SET clause you concat loaded value to saved one (in another variable) from the previous line loading and check if the line is complete. If so then you extract the values and save them into columns, and clear the variable which collects the data, if not then you fill the columns with fake but correct values. After the file is loaded you simply delete the rows with fake values.

If the file is not huge then you may load the whole file into one user-defined variable (use LOAD_FILE() function) and then parse it to separate records and columns.