Have a simple program as shown below

import pyspark

builder = (

pyspark.sql.SparkSession.builder.appName("MyApp")

.config("spark.sql.extensions", "io.delta.sql.DeltaSparkSessionExtension")

.config(

"spark.sql.catalog.spark_catalog",

"org.apache.spark.sql.delta.catalog.DeltaCatalog",

)

)

spark = builder.getOrCreate()

spark._jsc.hadoopConfiguration().set(

"fs.gs.impl", "com.google.cloud.hadoop.fs.gcs.GoogleHadoopFileSystem"

)

spark._jsc.hadoopConfiguration().set(

"fs.AbstractFileSystem.gs.impl", "com.google.cloud.hadoop.fs.gcs.GoogleHadoopFS"

)

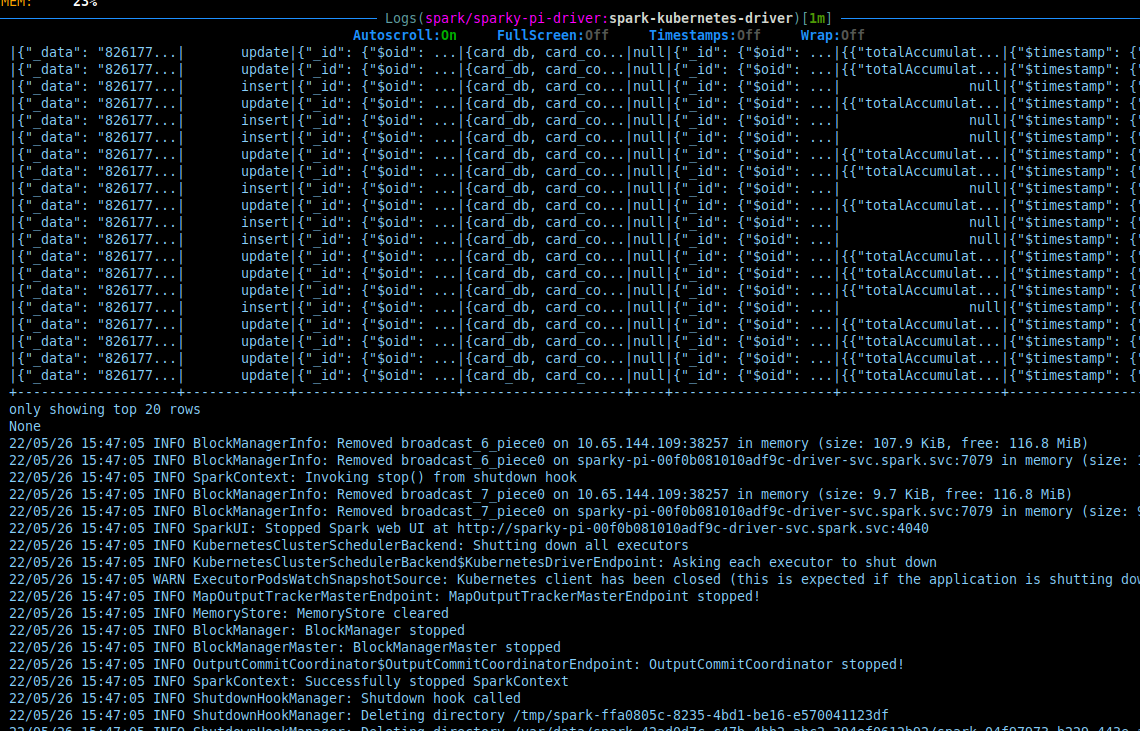

df = spark.read.format("delta").load(

"gs://org/delta/bronze/mongodb/registration/audits"

)

print(df.show())

This is packaged into a container using the below Dockerfile

FROM varunmallya/spark-pi:3.2.1

USER root

ADD gcs-connector-hadoop2-latest.jar $SPARK_HOME/jars

WORKDIR /app

COPY main.py .

This app is then deployed as a SparkApplication on k8s using the

I think here the base image datamechanics/spark:3.1.1-hadoop-3.2.0-java-8-scala-2.12-python-3.8-dm17 is key. Props to the folks who put it together!