I have a dataframe which contains life logging data gathered over several years from 44 unique individuals.

Int64Index: 77171 entries, 0 to 4279

Data columns (total 4 columns):

# Column Non-Null Count Dtype

--- ------ -------------- -----

0 start 77171 non-null datetime64[ns]

1 end 77171 non-null datetime64[ns]

2 labelName 77171 non-null category

3 id 77171 non-null int64

The start column contains granular datetimes of the format 2020-11-01 11:00:00, in intervals of 30 minutes. The labelName column has 14 different categories.

Categories (14, object): ['COOK', 'EAT', 'GO WALK', 'GO TO BATHROOM', ..., 'DRINK', 'WAKE UP', 'SLEEP', 'WATCH TV']

Here's a sample user's head, which is [2588 rows x 4 columns] and spans from 2020 to 2021. There are also gaps in the data, occasionally.

start end labelName id

0 2020-08-05 00:00:00 2020-08-05 00:30:00 GO TO BATHROOM 486

1 2020-08-05 06:00:00 2020-08-05 06:30:00 WAKE UP 486

2 2020-08-05 09:00:00 2020-08-05 09:30:00 COOK 486

3 2020-08-05 11:00:00 2020-08-05 11:30:00 EAT 486

4 2020-08-05 12:00:00 2020-08-05 12:30:00 EAT 486

.. ... ... ... ...

859 2021-03-10 12:30:00 2021-03-10 13:00:00 GO TO BATHROOM 486

861 2021-03-10 13:30:00 2021-03-10 14:00:00 GO TO BATHROOM 486

862 2021-03-10 18:30:00 2021-03-10 19:00:00 COOK 486

864 2021-03-11 08:00:00 2021-03-11 08:30:00 EAT 486

865 2021-03-11 12:30:00 2021-03-11 13:00:00 COOK 486

I want a sum of each unique labelNames per user per month, but I'm not sure how to do this.

I would first split the data frame by id, which is easy. But how do you split these start datetimes when it records every 30 minutes over several years of data— and then create 14 new columns which record the sums?

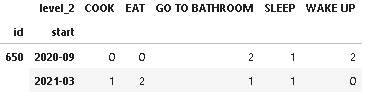

The final data frame might look something like this (with fake values):

| user | month | SLEEP | ... | WATCH TV |

|---|---|---|---|---|

| 486 | jun20 | 324 | ... | 23 |

| 486 | jul20 | 234 | ... | 12 |

The use-case for this data frame is training a few statistical and machine-learning models.

How do I achieve something like this?

CodePudding user response:

Because there are 30 minutes data you can count them by

Demonstration:

#Preparing Data

string = """start end labelName id

2020-09-21 14:30:00 2020-09-21 15:00:00 WAKE UP 650

2020-09-21 15:00:00 2020-09-21 15:30:00 GO TO BATHROOM 650

2020-09-21 15:30:00 2020-09-21 16:00:00 SLEEP 650

2020-09-29 17:00:00 2020-09-29 17:30:00 WAKE UP 650

2020-09-29 17:30:00 2020-09-29 18:00:00 GO TO BATHROOM 650

2021-03-11 13:00:00 2021-03-11 13:30:00 EAT 650

2021-03-11 14:30:00 2021-03-11 15:00:00 GO TO BATHROOM 650

2021-03-11 15:00:00 2021-03-11 15:30:00 COOK 650

2021-03-11 15:30:00 2021-03-11 16:00:00 EAT 650

2021-03-11 16:00:00 2021-03-11 16:30:00 SLEEP 650"""

data = [x.split(' ') for x in string.split('\n')]

df = pd.DataFrame(data[1:], columns = data[0])

df['start'] = pd.to_datetime(df['start'])

#Solution

from collections import Counter

df.groupby([df['start'].dt.to_period('M'), 'id'])['labelName'].apply(lambda x: Counter(x)).reset_index().pivot_table('labelName', ['id', 'start'], 'level_2', fill_value=0)