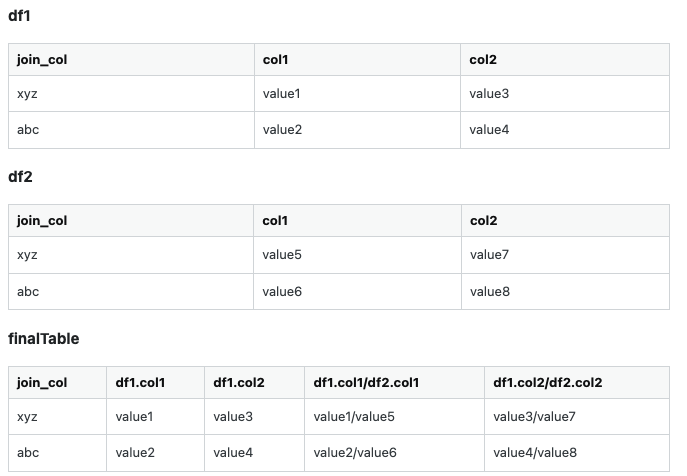

And most importantly number of col1, col2....are not known, there can be hundreds of columns. So we will have to do the join and division dynamically

How to this is spark scala?

CodePudding user response:

Dataset objects have the columns property that gives you an array of column names. It is easy to filter the columns of df1 and leave those that are present in df2, then use map to derive the needed columns:

val df1 = Seq(("xyz", 10.0, 12.0),

("abc", 42.0, 7.0)).toDF("join_col", "col1", "col2")

val df2 = Seq(("xyz", 7.0, 22.0, 11.0),

("abc", 11.0, 9.0, 42.0)).toDF("join_col", "col1", "col2", "col3")

// Common columns in both datasets

val cols = df1.columns.filter(df2.columns.toSet)

val join_col = cols(0)

val joined = df1.join(df2, df1(join_col) === df2(join_col))

// Columns from df1

val df1Cols = cols.map(df1(_))

// Division columns renamed to div_whatever

val divCols = cols.drop(1).map((name) => df1(name) / df2(name) as s"div_${name}")

val finalTable = joined.select((df1Cols divCols) :_*)

finalTable.show(false)

// -------- ---- ---- ------------------ ------------------

// |join_col|col1|col2|div_col1 |div_col2 |

// -------- ---- ---- ------------------ ------------------

// |xyz |10.0|12.0|1.4285714285714286|0.5454545454545454|

// |abc |42.0|7.0 |3.8181818181818183|0.7777777777777778|

// -------- ---- ---- ------------------ ------------------

This one assumes that the join column is the first column in df1.