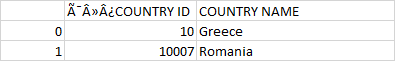

I am tring to remove a column and special characters from the dataframe shown below.

The code below used to create the dataframe is as follows:

dt = pd.read_csv(StringIO(response.text), delimiter="|", encoding='utf-8-sig')

The above produces the following output:

I need help with regex to remove the characters  and delete the first column.

As regards regex, I have tried the following:

dt.withColumn('COUNTRY ID', regexp_replace('COUNTRY ID', @"[^0-9a-zA-Z_] "_ ""))

However, I'm getting a syntax error.

Any help much appreciated.

CodePudding user response:

If the position of incoming column is fixed you can use regex to remove extra characters from column name like below

import re

colname = pdf.columns[0]

colt=re.sub("[^0-9a-zA-Z_\s] ","",colname)

print(colname,colt)

pdf.rename(columns={colname:colt}, inplace = True)

And for dropping index column you can refer to this stack answer

CodePudding user response:

You have read in the data as a pandas dataframe. From what I see, you want a spark dataframe. Convert from pandas to spark and rename columns. That will dropn pandas default index column which in your case you refer to as first column. You then can rename the columns. Code below

df=spark.createDataFrame(df).toDF('COUNTRY',' COUNTRY NAME').show()