I am trying to scrap data from

My attempt:

Here, Firstly, I tried to extract the blog links but there were also some unwanted link

import httplib2

import csv

from bs4 import BeautifulSoup, SoupStrainer

http = httplib2.Http()

links = []

status, response = http.request(f'https://www.forexcrunch.com/category/forex-weekly-outlook/gbp-usd-outlook/page/24/')

for link in BeautifulSoup(response,'html.parser', parse_only=SoupStrainer('a')):

if link.has_attr('href'):

if 'gbp' in link['href'] :

if link['href'] not in links:

print(link['href'])

links.append(link['href'])

From these links the blog article containing weekly forecast need to be filtered. Then, from link article date and title should retrieve and store them,

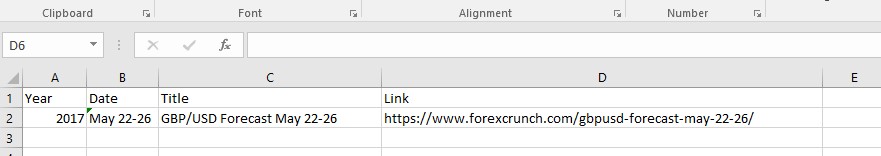

header = ['Year', 'Date', 'Title', 'Link']

f = open('summary.csv', 'w')

writer = csv.writer(f)

writer.writerow(header)

for link in links :

data = [Year,Date,Title,Article Link] # get link data and store

writer.writerow(data)

f.close()

CodePudding user response:

You need to select all parent elements and after iterate each of them.

There are 2 types of articles, big one and 10 smallest.

There you have some example code:

from bs4 import BeautifulSoup

import requests

page = requests.get("https://www.forexcrunch.com/category/forex-weekly-outlook/gbp-usd-outlook/page/24/")

soup = BeautifulSoup(page.content, 'html.parser')

big_article = soup.find("div", class_='col-sm-12 col-md-6')

title = big_article.find("div", class_="post-detail").find("h5")

print("Title: " title.text)

print("Link: " title.find("a")["href"])

author_year = big_article.find("div", class_="post-author")

print("Author: " author_year.find("a").text)

print("Date: " author_year.find_all("li")[-1].text)

print("---------------------")

all_articles = soup.find_all("div", class_='col-sm-12 col-md-3')

for article in all_articles:

title_author_link = article.find("div", class_="post-detail").find_all("a")

print("Title: " title_author_link[0].text)

print("Link: " title_author_link[0]["href"])

print("Author: " title_author_link[1].text)

print("Date: " article.find_all("li")[-1].text)

print("---------------------")

OUTPUT:

Title: GBP/USD Forecast May 22-26

Link: https://www.forexcrunch.com/gbpusd-forecast-may-22-26/

Author: Kenny Fisher

Date: 5 years

---------------------

Title: GBP/USD Forecast May 15-19

Link: https://www.forexcrunch.com/gbpusd-forecast-may-15-19/

Author: Kenny Fisher

Date: 5 years

---------------------

I hope I have been able to help you.