I am trying to create an ADF pipeline that does the following:

Takes in a csv with 2 columns, eg: Source, Destination test_container/test.txt, test_container/test_subfolder/test.txt

Essentially I want to copy/move the filepath from the source directory into the Destination directory (Both these directories are in Azure blob storage).

I think there is a way to do this using lookups, but lookups are limited to 5000 rows and my CSV will be larger than that. Any suggestions on how this can be accomplished?

Thanks in advance,

CodePudding user response:

This is a complex scenario for Azure Data Factory. Also as you mentioned there are more than 5000 file paths records in your CSV files, it also means same number of Source and Destination paths. So now if you create this architecture in ADF, it will goes like this:

You will use the

Lookup activityto read the Source and Destination paths. In that also you can't read all the paths due to Lookup activity limitation.Later you will iterate over the records using

ForEach activity.Now you also need to split the path so that you will get container, directory and file names separately to pass the details to

Datasetscreated for Source and Destination location. Once you split the paths, you need to use theSet variableactivity to store the Source and Destination container, directory and file names. These variables will be then passed toDatasetsdynamically. This is a tricky part as even if a single record is unable to split properly then your pipeline would fail.If above step completed successfully, then you not need to worry about

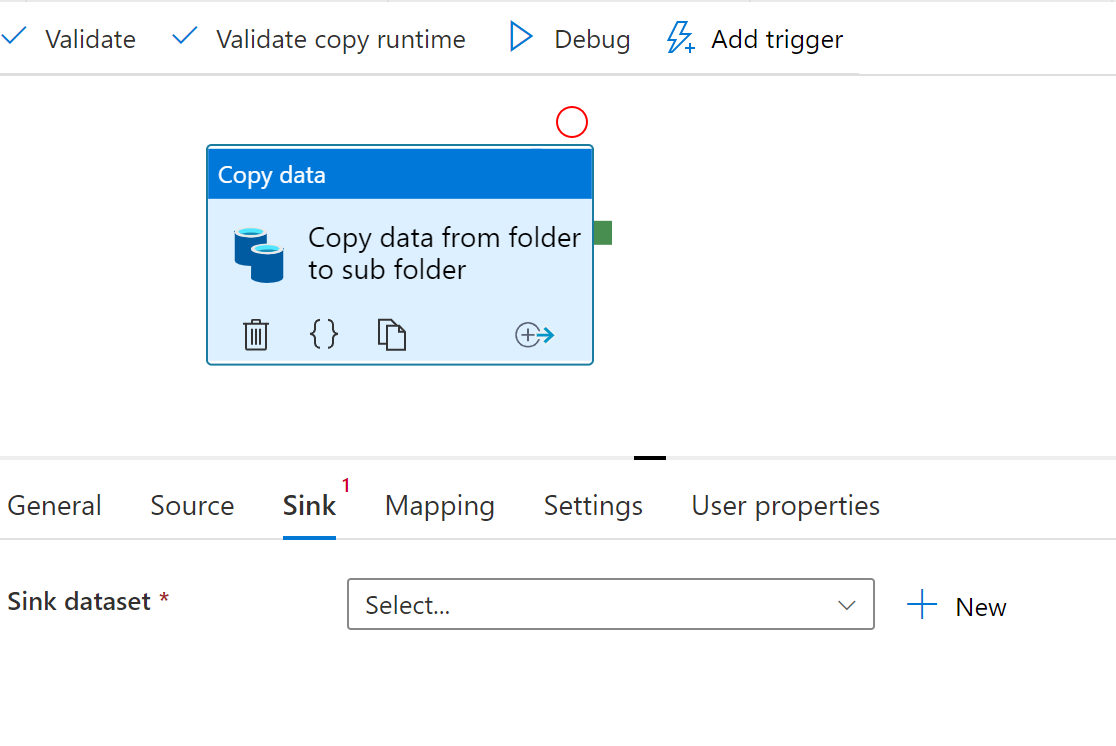

copy activity. If all the parameters got the expected values under Source and Destination tabs in copy activity it will work properly.

My suggestion is to use programmatical approach for this. Use python, for example, to read the CSV file using pandas module and iterate over each path and copy the files. This will work fine even if you have 5000 records.