I am currently working on a project that is needed to cut background and after all smooth it's borders

What I have as a input:

Input:

What I wnat to do :

Cut background:

Smooth edges:

The idea of code is that after background cut, edges are not perfect and it would be better if these edges have been smoothed

What I tried: I saves mask edges

img_path = 'able.png'

img_data = cv2.imread(img_path)

img_data = img_data > 128

img_data = np.asarray(img_data[:, :, 0], dtype=np.double)

gx, gy = np.gradient(img_data)

temp_edge = gy * gy gx * gx

temp_edge

temp_edge[temp_edge != 0.0] = 255.0

temp_edge = np.asarray(temp_edge, dtype=np.uint8)

cv2.imwrite('mask_edge.png', temp_edge)

After all I get stuck

CodePudding user response:

You need to blur the alpha channel and then compose against white background.

Your target picture shows no blur in the color information, only in transparency. Blurring the color data will not replicate this faithfully.

Your segmented image is 4-channel. It contains the transparency mask.

im = cv.imread("image.png", cv.IMREAD_UNCHANGED)

mask = im[:,:,3]

blurred = cv.GaussianBlur(mask, ksize=None, sigmaX=7)

Then compose:

color = np.float32(1/255) * im[:,:,0:3]

alpha = np.float32(1/255) * blurred

alpha = alpha[..., None] # add a dimension for broadcasting

composite = color * alpha 1.0 * (1-alpha)

# suitable for imshow

That looks wrong because the color information in the transparent pixels can't be relied upon. It is invalid, black even, so you don't see any actual background shining through:

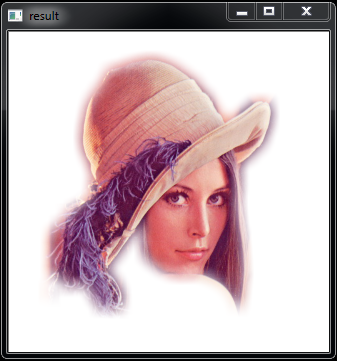

To fix that, I took the color channels from a complete (non-masked) Lena and downsampled it to fit:

You can adjust how close the blur comes to the edges of the mask by dilating or eroding the mask, and only then blur it.

CodePudding user response:

Approach:

Continuing from where you have stopped, load the cut background image.

- Blur the image and store in a different variable

- Convert to grayscale and find contour surrounding the region of interest

- Create a mask and draw the contour with certain thickness

- In a new variable keep the blurred pixel values in the contour while leaving the rest from the cut background image, with the help of

where()function fromNumpy

Code:

img = cv2.imread('cut_background.jpg', 1)

img_blur = cv2.GaussianBlur(img, (7, 7), 0)

gray = cv2.cvtColor(img , cv2.COLOR_BGR2GRAY)

th = cv2.threshold(gray,254,255,cv2.THRESH_BINARY_INV)[1]

contours, hierarchy = cv2.findContours(th, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_NONE)

mask = np.zeros(img.shape, np.uint8)

mask = cv2.drawContours(mask, contours, -1, (255,255,255),7)

result = np.where(mask==(255, 255, 255), img_blur, img )

You can try varying the kernel size used by the Gaussian filter and the thickness of the contour being drawn.

Hope you get the idea.