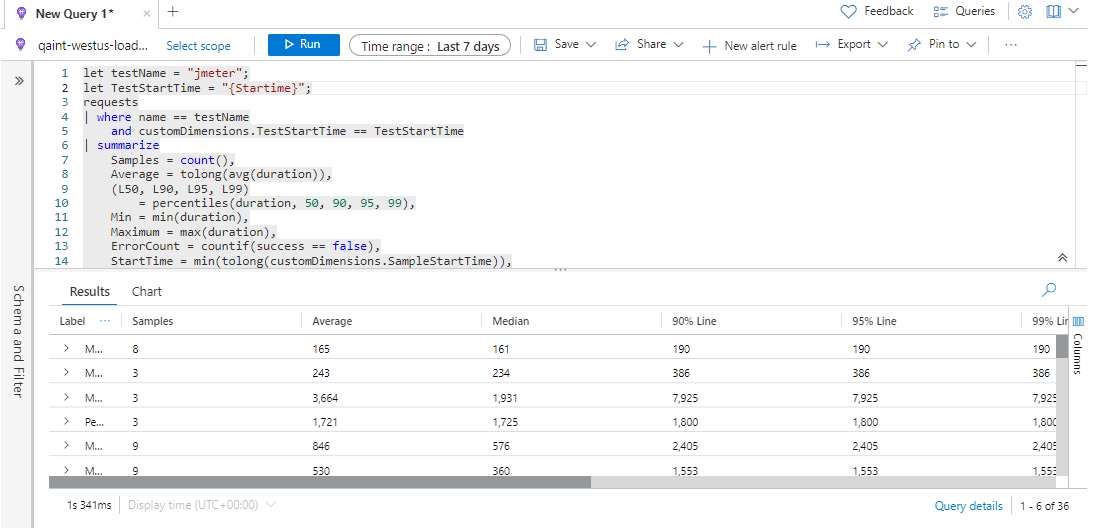

I am trying to create a Kusto query to compare two Jmeter Results, I already has this query that show me the results for 1 test, But I don't know how to add another one and marge the result.

Attached the Query and and image for reference.

Can Someone help me to complete the Query?

let testName = "jmeter";

let TestStartTime = "{Startime}";

requests

| where name == testName

and customDimensions.TestStartTime == TestStartTime

| summarize

Samples = count(),

Average = tolong(avg(duration)),

(L50, L90, L95, L99)

= percentiles(duration, 50, 90, 95, 99),

Min = min(duration),

Maximum = max(duration),

ErrorCount = countif(success == false),

StartTime = min(tolong(customDimensions.SampleStartTime)),

EndTime = max(tolong(customDimensions.SampleEndTime))

by Label = tostring(customDimensions.SampleLabel)

| extend s = 0

| union (

requests

| where name == testName

and customDimensions.TestStartTime == TestStartTime

| summarize

Samples = count(),

Average = tolong(avg(duration)),

(L50, L90, L95, L99)

= percentiles(duration, 50, 90, 95, 99),

Min = min(duration),

Maximum = max(duration),

ErrorCount = countif(success == false),

StartTime = min(tolong(customDimensions.SampleStartTime)),

EndTime = max(tolong(customDimensions.SampleEndTime))

| extend Label = 'TOTAL', s = 9

)

| extend

tp = Samples / ((EndTime - StartTime) / 1000.0),

KBPeriod = (EndTime - StartTime) * 1024 / 1000.0

| sort by s asc

| project

Label, Samples, Average,

Median = round(L50),

['90% Line'] = round(L90),

['95% Line'] = round(L95),

['99% Line'] = round(L99),

Min, Maximum,

['Error %'] = strcat(round(ErrorCount * 100.0 / Samples, 2), '%'),

['Throughput'] = iif(tp < 1.0,

strcat(round(tp * 60, 1), '/min'),

strcat(round(tp, 1), '/sec')

)

| project-reorder

Label, Samples, Average,

['Median'], ['90% Line'], ['95% Line'], ['99% Line'],

Min, Maximum, ['Error %'], ['Throughput']

CodePudding user response:

In order to be able to "compare" you need somehow to distinguish 2 different runs and I fail to see any metric you can use for that.

So the easiest option would be first running i.e. Merge Results Tool which will create an aggregate .jtl results file and then parse it using KQL.

Merge Results Tool can be installed using JMeter Plugins Manager

CodePudding user response:

I solved the problem, here is the Script for compare Results

Hope this help

requests

| where name == "{TestNameA}"

and customDimensions.TestStartTime == "{TestStartTimeA}"

| summarize

Samples_A = count(),

Average_A = tolong(avg(duration)),

(L95_A)

= percentiles(duration, 95),

Min_A = min(duration),

Maximum_A = max(duration),

ErrorCount_A = countif(success == false),

StartTime = min(tolong(customDimensions.SampleStartTime)),

EndTime = max(tolong(customDimensions.SampleEndTime))

by Label = tostring(customDimensions.SampleLabel)

| extend s = 0

| join (

requests

| where name == "{TestNameB}"

and customDimensions.TestStartTime == "{TestStartTimeB}"

| summarize

Samples_B = count(),

Average_B = tolong(avg(duration)),

(L95_B)

= percentiles(duration, 95),

Min_B = min(duration),

Maximum_B = max(duration),

ErrorCount_B = countif(success == false),

StartTime = min(tolong(customDimensions.SampleStartTime)),

EndTime = max(tolong(customDimensions.SampleEndTime))

by Label = tostring(customDimensions.SampleLabel)

| extend s = 1

) on Label

| sort by s asc

| project

Label, Samples_A, Samples_B, Average_A, Average_B,

['95% Line_A'] = round(L95_A),

['95% Line_B'] = round(L95_B),

Min_A, Min_B, Maximum_A, Maximum_B,

['Error_A %'] = strcat(round(ErrorCount_A * 100.0 / Samples_A, 2), '%'),

['Error_B %'] = strcat(round(ErrorCount_B * 100.0 / Samples_B, 2), '%')

| project-reorder

Label, Samples_A, Samples_B, Average_A, Average_B,

['95% Line_A'], ['95% Line_B'], Min_A, Min_B, Maximum_A, Maximum_B, ['Error_A %'], ['Error_B %']